Windows Enterprise Client Boot and Logon Optimization – Part 3, Trace Capture and Benchmarking

This post continues the series that started here.

In the previous post I discussed instrumentation and tools and we walked through the installation of the Windows Performance Toolkit (WPT).

In this post, I want to discuss using WPT to capture boot and logon statistics and how that information may be used to develop a process for benchmarking your Operating System build as you design it.

Preparing for a Trace

Before we get into capturing a boot trace, I want to call out a few important pre-requisites.

- Match the WPT architecture to the Operating System you want to benchmark (see the previous post)

- You must have local admin rights on the system to capture a trace

- Consider the use of Autologon

The first two points are pretty clear but I want to expand on the use of Autologon -

During a boot trace, the instrumentation captures data throughout boot and logon. If a system is left at the logon screen for a prolonged period of time, the trace will be longer and the timing will be skewed. It’s certainly possible to extract information from the trace that tells you how long this wait was but it’s an extra step and it’s not immediately accessible. In the interest of keeping things simple and helping you create a robust process, I want to advocate the use of Autologon while the system is being benchmarked.

N.B. The username and password is stored in clear text in the registry. It’s very important that you remove the Autologon registry entries after you’ve completed benchmarking.

To enable Autologon, refer to knowledge base article - How to turn on automatic logon in Windows XP (this article is still valid for supported versions of Windows).

Capturing a Timing Trace

Everything I’ve discussed to this point has been getting you ready to capture a timing trace. A timing trace makes use of trace options that minimise performance overhead, log to a .ETL file and establish the timing of boot phases.

To capture a timing trace, you begin by launching Windows Performance Recorder (WPRUI.exe). This will be in your environment path if you completed a full installation of WPT. If not, just browse to your WPT folder and launch it.

The options I’ll recommend for a timing trace are shown here -

With these options selected, simply click Start. Windows Performance Recorder will ask you to select a trace file destination and to provide an optional description for the trace -

Once you click Save, you will be prompted to reboot. Once a reboot and login (with or without Autologon) completes, the trace will continue for 120 seconds.

After this time, the trace will be saved to the location specified earlier (see above).

Simple Trace Analysis

The first thing you’ll want to do after capturing a timing trace is to extract some useful information from it. In line with keeping it simple, I’m going to show you how XPerf.exe may be used as a Consumer to process the trace. Let’s start with -

xperf.exe -i <trace_file.etl> -a tracestats

This simple command provides some information about the trace and the system from where it was captured. For example:

Here I can see that the trace was captured from a system with 4 processors running at 2660 MHz and that the total trace time was a little over 3 minutes.

So let’s get down to it. How can I extract the timing information needed to benchmark the system? Again, XPerf.exe is the answer -

xperf -i <trace_file.etl> -o Summary.xml -a boot

The “-o” in this command specifies an output file. The output of boot analysis is in an XML format so I choose an output file with a .xml extension.

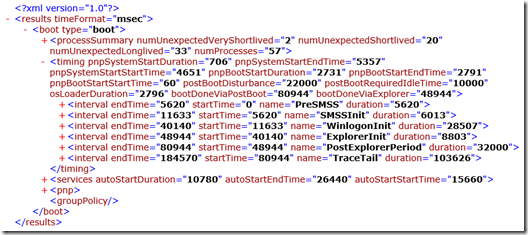

If I open the Summary.xml file with Internet Explorer (or another favoured XML viewer), there’s a great deal of data displayed. Again, keeping it simple means that I want to collapse all of the redundant information and just display data relevant to system boot and logon times. At its simplest, my data looks like this -

So what does all of this mean?

Right at the top we see that timeFormat=”msec” so this tells us that all of the numbers related to time are in milliseconds.

There are six intervals, 5 of which represent boot phases (PreSMSS, SMSSInit, WinlogonInit, ExplorerInit, PostExplorerPeriod) and TraceTail which represents the remaining time in the trace. Boot phases are periods of time in which specific boot/logon activity takes place. I’ll discuss these in a lot more detail in future blog posts.

bootDoneViaExplorer is the time since boot start at which the Windows Desktop (Explorer) launch was complete (48.944 seconds in this example).

postBootRequiredIdleTime is the required amount of accumulated idle time (CPU and Disk 80% idle) before the system is considered ready for use. This is always 10 seconds.

bootDoneViaPostBoot is the time at which 10 seconds of idle time has been accumulated. In practical terms, subtract 10 seconds from this number and consider this the time at which the system was ready for use (80.944 – 10 = 70.944 seconds in this example). This calculation should also be performed against the PostExplorerPeriod boot phase. A value of “-1” here means that the system did not reach an idle state by the time the trace completed.

For the purposes of benchmarking the system, I have everything I need. I can record these numbers and observe how they fluctuate as changes are made to the system image.

Benchmarking

The benchmarking approach I’ll recommend is just an iterative process. Start with a clean installation of Windows on your chosen hardware and gather a timing trace. This provides you with your best possible baseline for that system.

Add a component and benchmark it again. In this way you’ll gain insight into how much time that component costs you.

The diagram above makes reference to optimizing ReadyBoot. For now, just be aware that you’ll need to do this with systems using older rotational hard drives and not with systems using solid state drives (SSDs). I’ll dedicate the entirety of my next blog post to this topic.

Another way to look at it is to benchmark each significant layer of the user experience. This approach may look something like this -

While taking this layered approach, it’s important to apply components that have large overall impact, last. For example, Antivirus software not only has impact on CPU and disk directly but because it may be scanning binaries as they’re loaded by other processes, it can slow those other components down. Install it after all of your other components.

Disk encryption software is another example of a component that may have large overall impact.

Conclusion

By following this iterative process, you’ll be able to understand the time each design decision costs you.

Consider

Time (in minutes) x Average Reboots Per Day x Average Employee Cost Per Minute x #Employees

This number might lead you to re-evaluate design decisions in your client image.

Next Up

Comments

- Anonymous

August 05, 2015

The comment has been removed - Anonymous

August 11, 2015

This post concludes the series that started here . Over the past few weeks I’ve presented a “lite” version - Anonymous

August 13, 2015

This post continues the series that started here . The process that I’m going to describe in the following