Containers in Enterprise, Part 2 : DevOps

Now that we've looked at container basics and how they help in solving some of the bottlenecks in enterprise, let's change gears and move into next section.

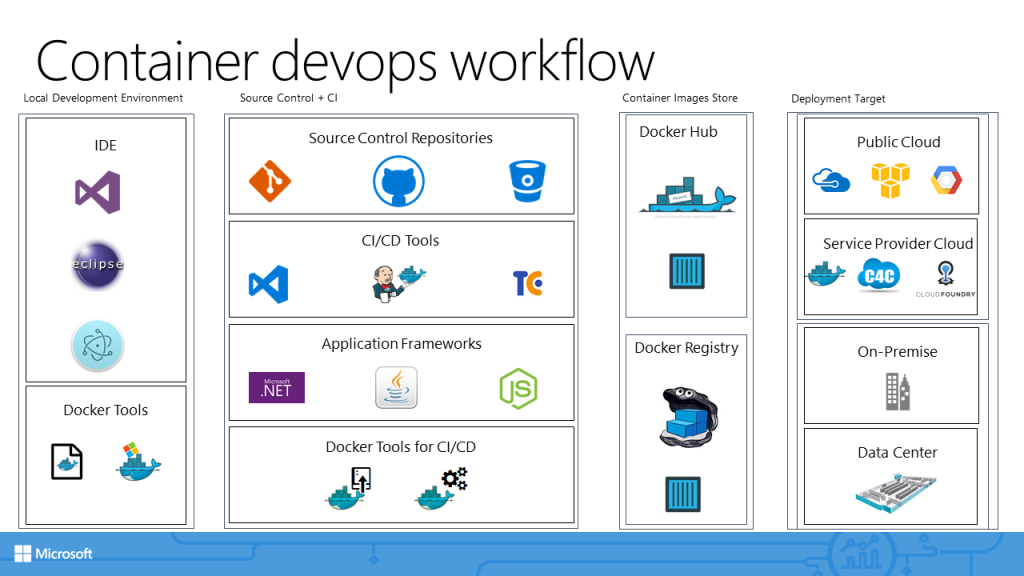

During local development, you do many things manually(docker build and run commands). In production scenarios, though, you'd want to automate most of the things. This is where DevOps for container helps. Below is how a typical container devops pipeline should look like.

It starts with local development. You can use any of common IDEs. This is coupled with Docker Tools for your local development environment(Docker for Windows in our case). Locally, a dockerfile is all that is required.

From this point onward, source control and CI systems take charge. A developer can check-in code to any of their favorite source control repository. All major CI/CD tools (TeamCity, Jenkins, VSTS, etc.) support connecting to these source control repositories. CI/CD tools can build/compile an application against a application development platform such as .Net, Java or NodeJS. Docker tools for CI/CD work on top the compiled application. We'll see how to use them soon.

Once docker tools for CI/CD play their part, flow moves to docker hub/docker registry. Both act as container image store and can store images. However, docker hub is a online registry managed by Docker. If you are not averse to the idea of Docker in charge of your enterprise container images, you can set up your own on-premise registry only for your enterprise.

With the container image you've got, you can then target any deployment target. Docker hub is a good choice for any public cloud platform. Docker registry is good choice for any deployment inside your enterprise or private data center.

Let's see this in action!

I am going to use VSTS in this example. However, same results can be obtained from your favorite CI/CD system such as Teamcity or Jenkins as well.

First things first...

I have set up pre-requisites as below.

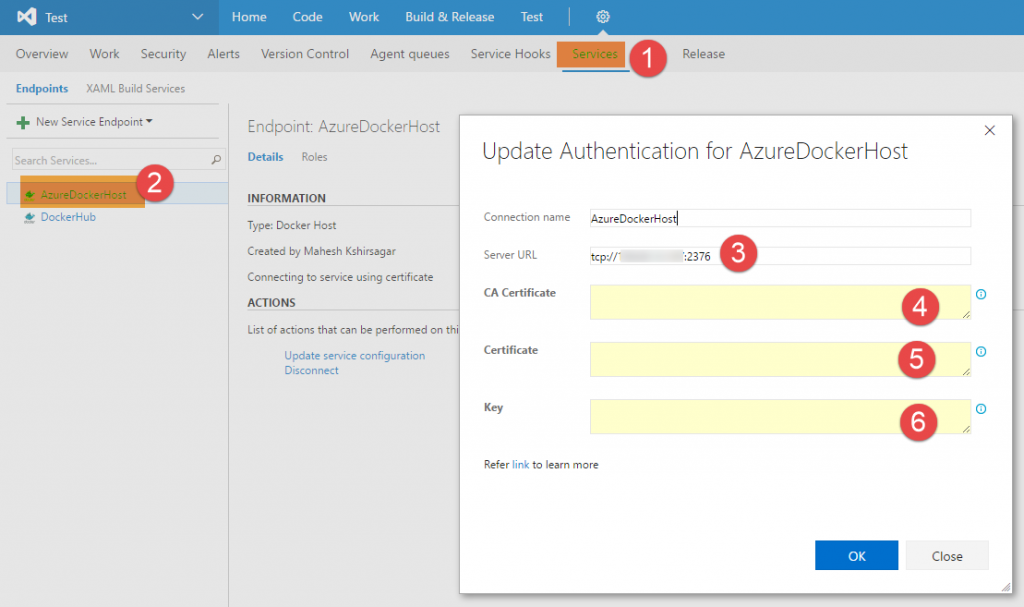

Docker Host in Azure: In earlier example, I was running all docker commands locally. However, with CI/CD, I need a separate host to run docker commands. Think of it as a build server in cloud. I have provisioned the Docker Host by using docker-machine utility. It helps to create/configure machines with docker tools. With it I have used Azure Driver for docker-machine. So when I combine docker-machine with Azure driver, I get a Docker Host in Azure. With Azure driver, you can only create Linux hosts at this stage with Windows support on the way. I've also installed a VSTS Build Agent on this host so that VSTS can communicate with it. You configure this as a service endpoint in VSTS as shown below.

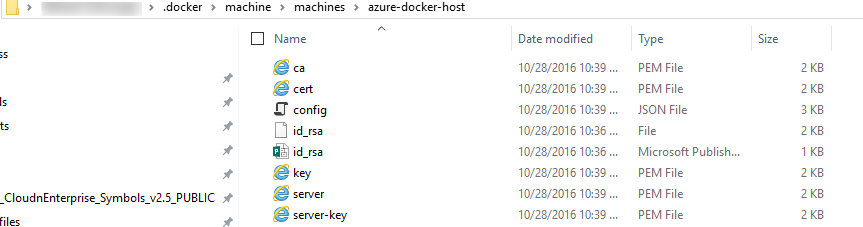

The various certificate entries you see above can be copied from the folder which gets created at location C:\Users\<your-user-id>\.docker\machine\machines\<machine-name-as-in-docker-machine-command> after running docker-machine command.

You just open the ca, cert and key PEM files in a file editor(such as Notepad++), copy the contents and paste them inside the boxes in VSTS.

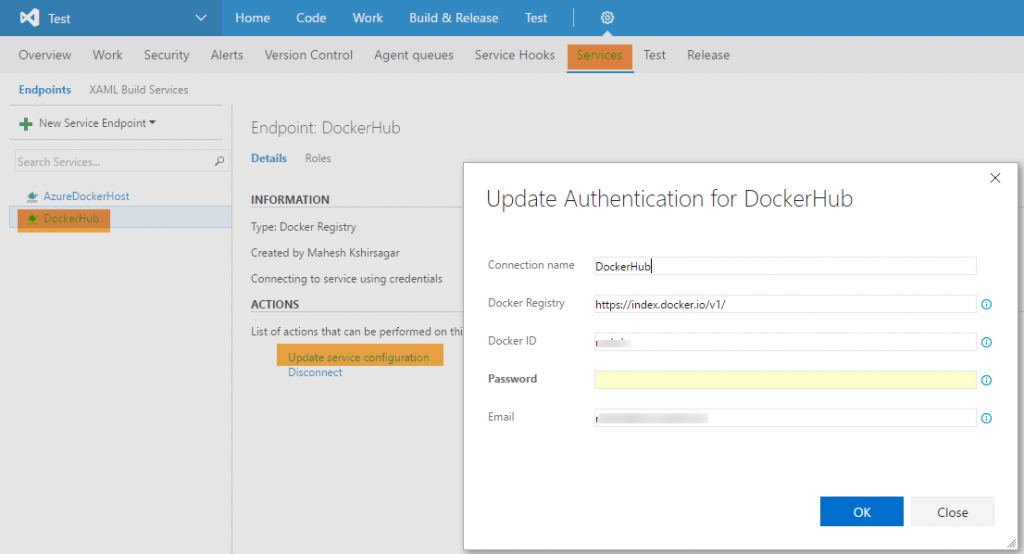

Docker Hub Account: Docker hub is an image store. Think of it as a App Store for Enterprise. With a personal account, you can store up to 5 images. Obviously for enterprise, you should have a enterprise account which costs some money :-). Configuration inside VSTS looks something like as below.

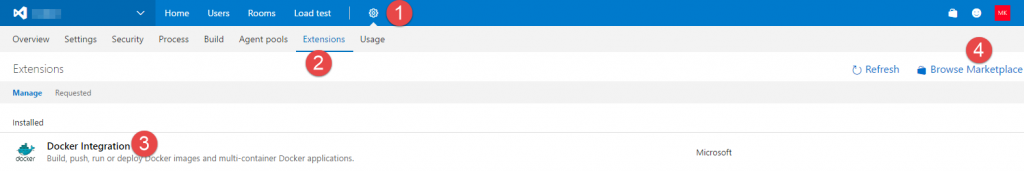

Docker Integration for VSTS: This is docker extension for VSTS. You can install it from VSTS MarketPlace.

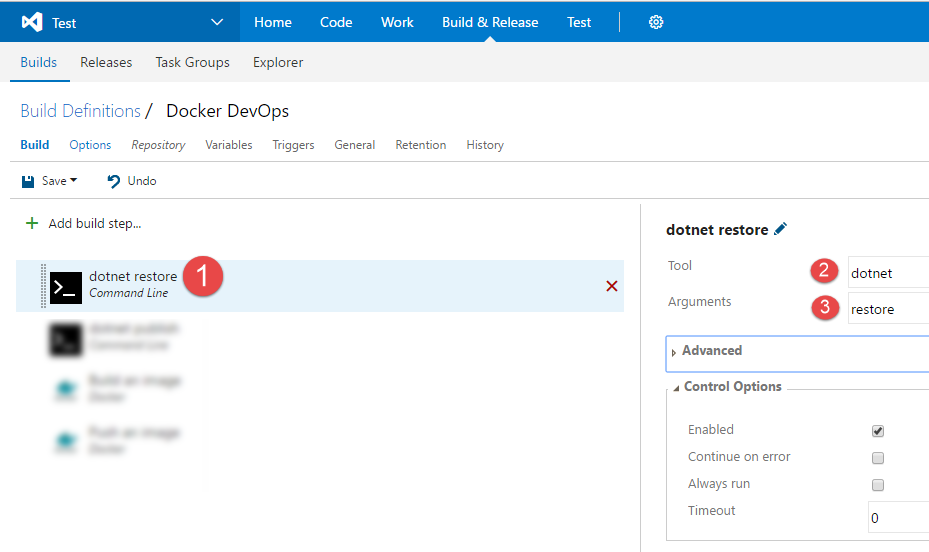

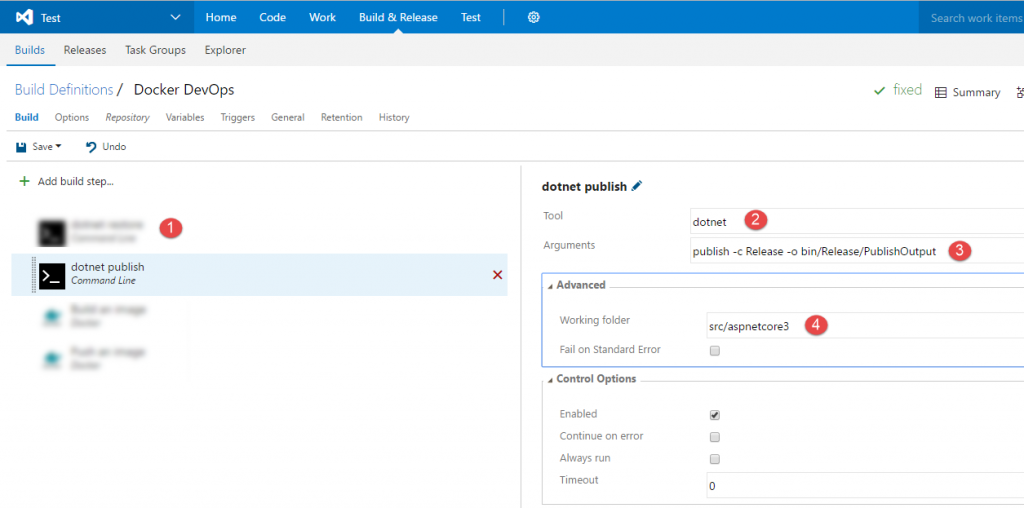

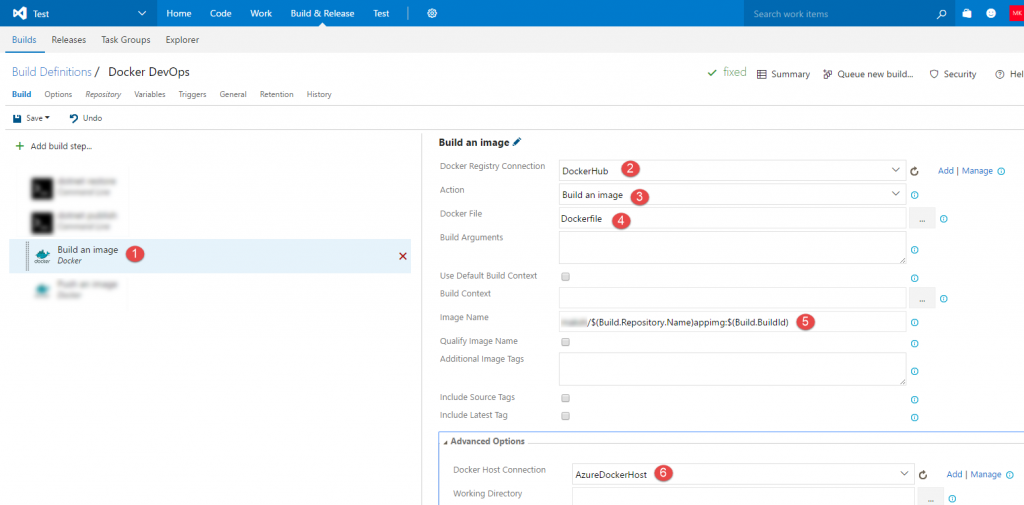

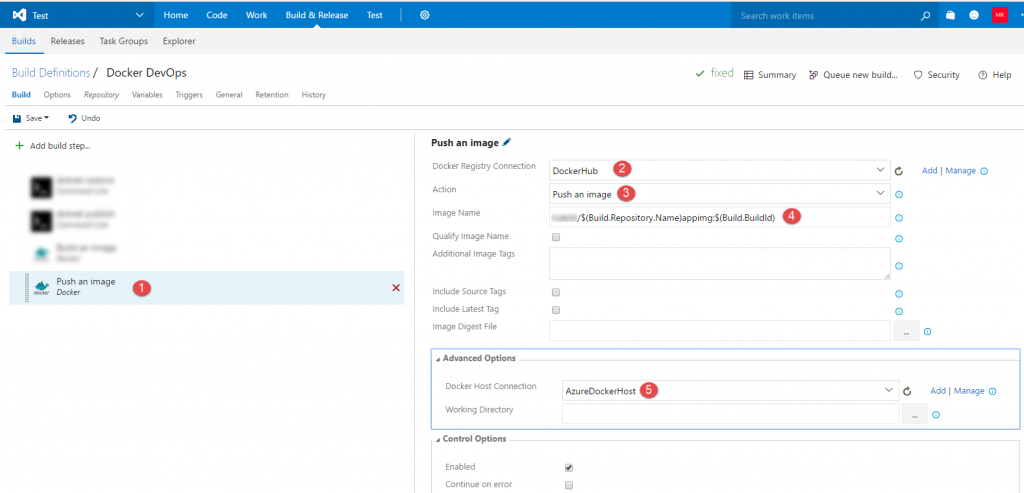

With these pre-requisites in place, you can set the build definition as below.

In the 1st step, just run dotnet restore command. This command brings all the nuget packages that application uses,

in 2nd build step, we run dotnet publish command. This command will compile the application and keep binaries at the location specified with -o switch.

In 3rd step, we run docker build command. This command is enabled by the docker integration extension we installed as part of pre-requisites. This build step uses the docker host in Azure we provisioned as part pre-requites.

Finally, in 4th step, we upload the image that was build previously to docker hub.

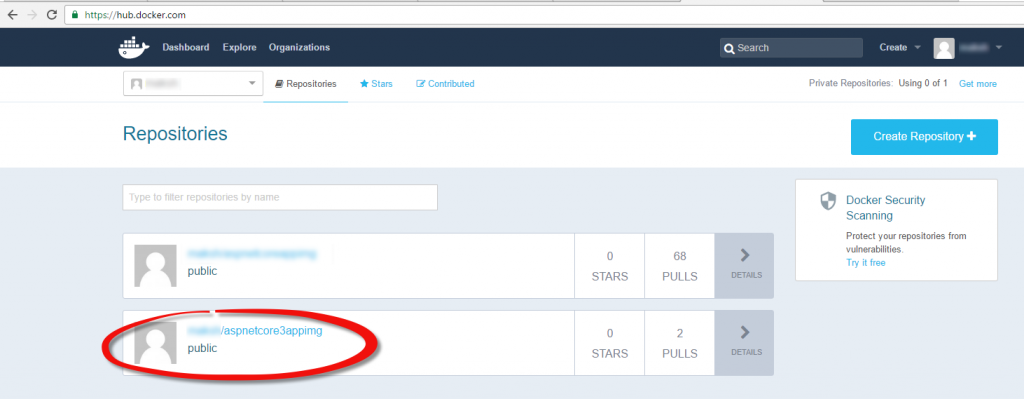

Now, trigger a build or manually queue a build from VSTS. It should run all steps to successful completion. At the end of it, you should be able to see the container image appearing in your Docker Hub account as shown below.

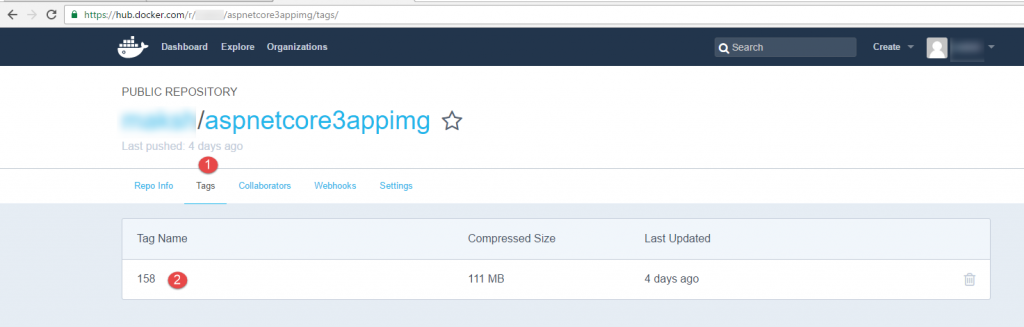

You can get more details (e.g. Tag to be used for Pull command later on) by clicking it.

Now that we have got an image in Docker hub, we can use it for deployment to any machine that runs Linux/Unix.

So I have spin up a Linux machine in azure. Along with port 22 which is open by default for Linux machines, I've opened port 80 as well for HTTP traffic.

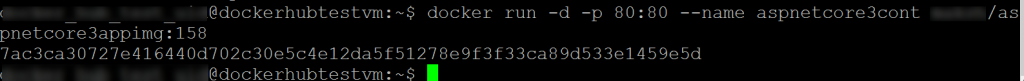

I SSH into this machine and run following docker command to pull container image from Docker hub.

I am running a Docker Pull command. I pass parameters such as the name of container image and the tag that I want to pull. With this information, pull command will bring down that image from Docker hub and store it on my Linux machine.

Obviously next, I create/run a container from this newly downloaded image.

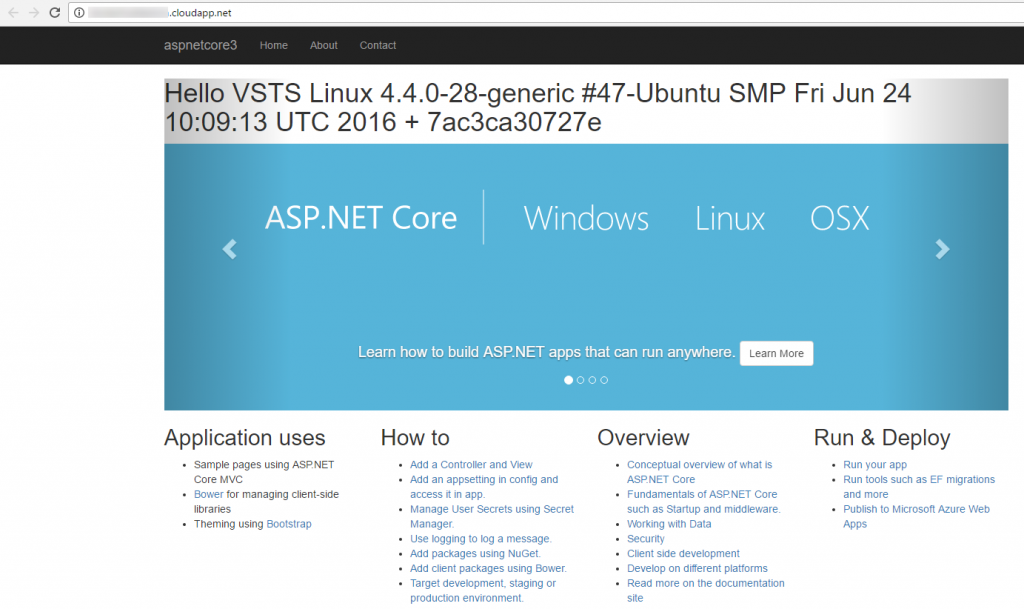

I can now browse to the Linux VM endpoint (DNS Name) and browse application which is running on the Linux server.

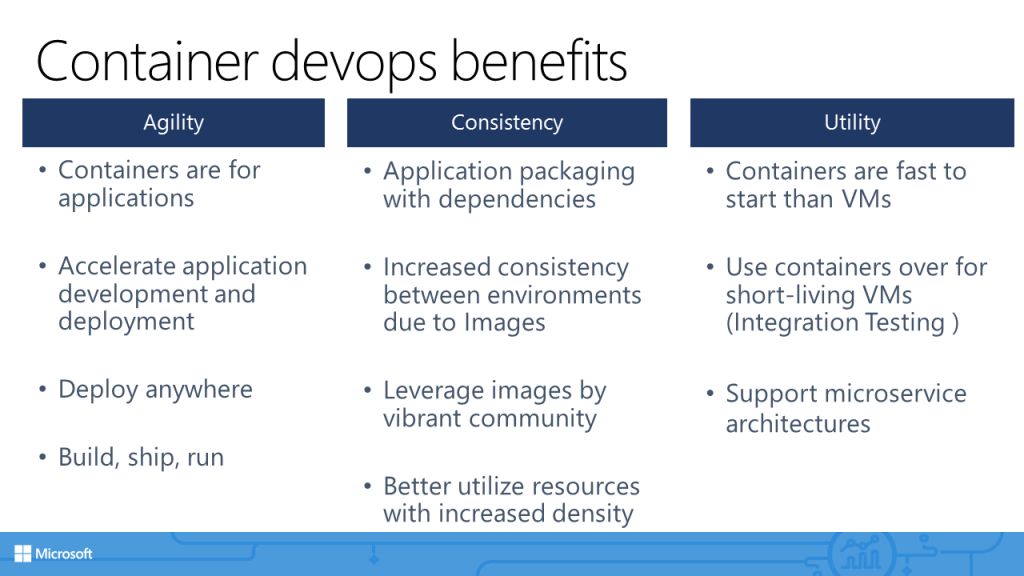

Now that we have got a devops workflow set up, let's discuss more about the benefits this approach brings.

1st set of benefits we get is Agility. We know from following DevOps practices that application development and deployment is accelerated. Containers further accelerate this model by cutting down VM provisioning and start-up times. Because I am now able to bring down container image from Docker hub, I can potentially deploy to any compatible target anywhere. This model enables to build, ship, run anywhere which is Docker's motto.

2nd set of benefits are Consistency. Since application dependencies are packaged with container image, as the image moves between environment to environment, it provisions its dependencies by itself. There are no cases of missing frameworks as in the past. All major software vendors (including Microsoft) have uploaded images of their software in Docker hub. You can use this rich ecosystem to create application of your needs easily. Also, a lot many containers can run on a single host machine. This increases the container density and in-turn resource utilization of the host.

3rd set of benefits is Utility. You must have observed that containers are very fast (matter of 2-3 seconds!) compared to a typical virtual machine. This makes them good candidates to replace short living machines. A typical example is of integration testing. Any enterprise runs these tests typically during night time. Before you could run these tests, you need to bring up a bunch of machines, provision them with pre-requisites, wait for tests to finish and tear them down after execution. All of this takes time and costs. Containers can replace these VMs. They can cut down the time and cost. Another major and perhaps most demanded benefit is enabling micro-service architecture based application development. Instead of building software as a large monolith, you can cut it down into many smaller parts or micro-services. You can then deploy these parts as containers and version, update, service them independently. We'll take a look at how to do this in part 3.