[Azure Service Fabric] Five steps to achieve Event aggregation and collection using EventFlow in Service Fabric

Monitoring and diagnostic are critical part in application development for diagnosing issue at production or development time. It helps one to easily identify any application issue, h/w issue and performance data to guide scope for improvement. It has 3 part workflow starts with 1) Event Generation 2) Event Aggregation 3) Analysis.

1) Event Generation –> creation and generation of events & logs. Logs could be of infra level events(anything from the cluster) or application level events (from the apps and services).

2) Event Aggregation –> generated events needs to be collated and aggregated before they can be displayed

3) Analysis –> visualized in some format

Once we decide the log provider, the next phase is aggregation. In Service Fabric, the event aggregation can be achieved by using (a) Azure Diagnostic logs (agent installed on VM’s) or (b) EventFlow (in process log collection).

Agent based log collection is a good option if our event source and destination does not change and have one to one mapping. Any change would require cluster level update which is sometime tedious and time consuming. In this type, the logs get tanked in storage and then goes to display phase.

But in case of EventFlow, in process logs are directly thrown to a remote service visualizer. Changing the data destination doesn’t require any cluster level changes as like in agent way update. Anytime we can change the data destination path from this file eventFlowConfig.json. Depends on the criticality we can have both if required. However, Azure diagnostics logs are recommended for mostly infra level log collection where as EventFlow suggested for Application level logs. The last step is Event Analysis where we analysis and visualize the incoming data. Azure Service fabric has better integration support for OMS and Application Insights.

In this article, let us see how one can easily use EventFlow in their Service Fabric Stateful application in 5 steps.

Step1:- Let say, create a new Service Fabric Project by selecting “Stateful Service” application. Pls change the .NET version of the project to 4.6 and above.

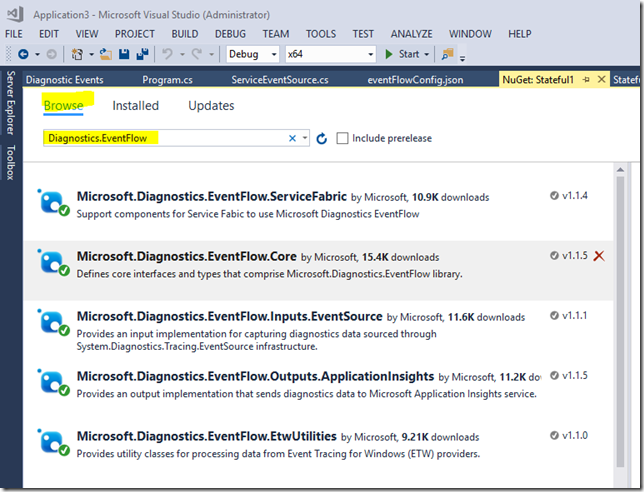

Step2:- Right click and add the following nuget packages. Search for "Diagnostics.EventFlow" and then add the following packages.

Microsoft.Diagnostics.EventFlow.ServiceFabric

Microsoft.Diagnostics.EventFlow.Outputs.ApplicationInsights

Microsoft.Diagnostics.EventFlow.Input.EventSource

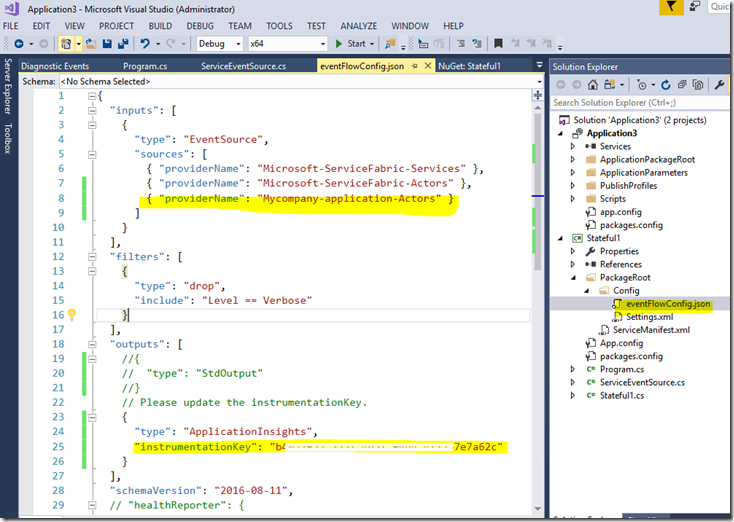

Step3:- Update the eventflowconfig.json file as below. Event Source class uses the Json file to send the data. This file needs to be modified to capture data or configure to desired destination.

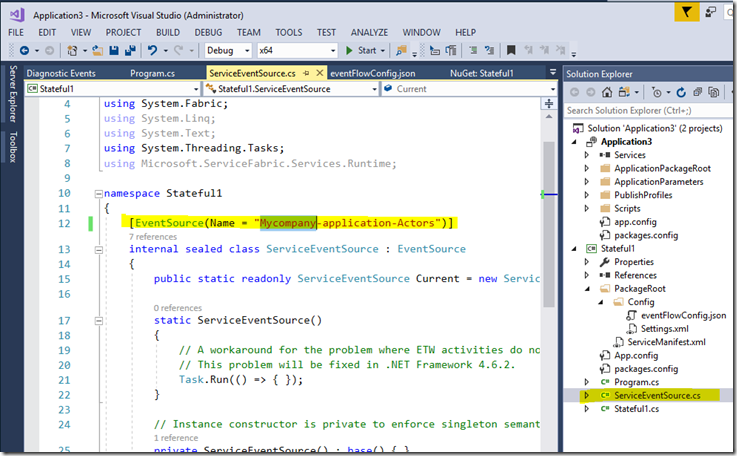

Step4: Update the “ServiceEventSource.cs” class. We need a name of Service’s ServiceEventSource is the value of the attribute set for this class.

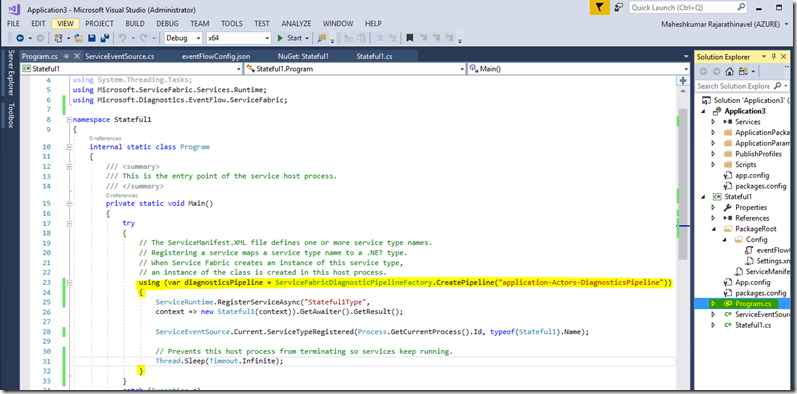

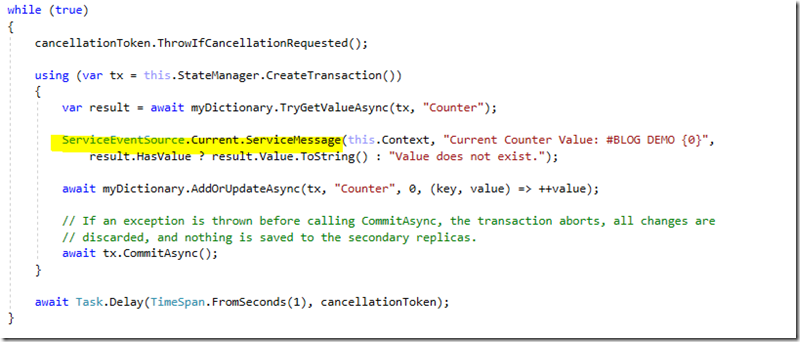

Step5:- Instantiate the EventFlow pipeline in our service startup code and start writing the service message.

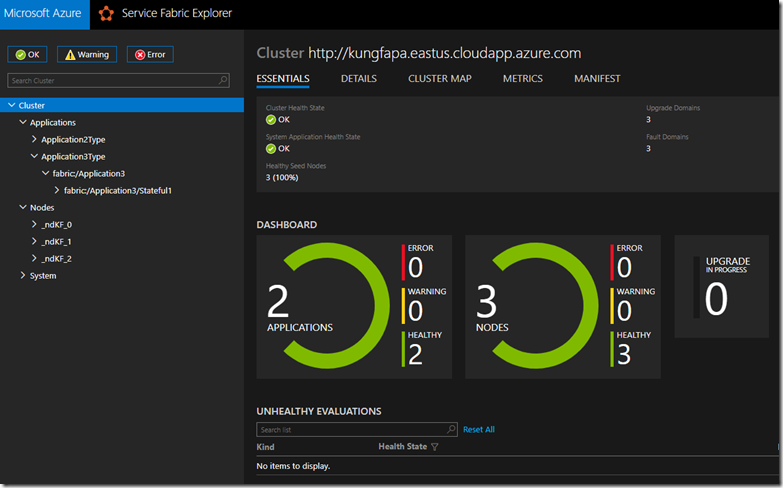

Deploy the application and confirm all green and no issue with deployment or any dependency issue.

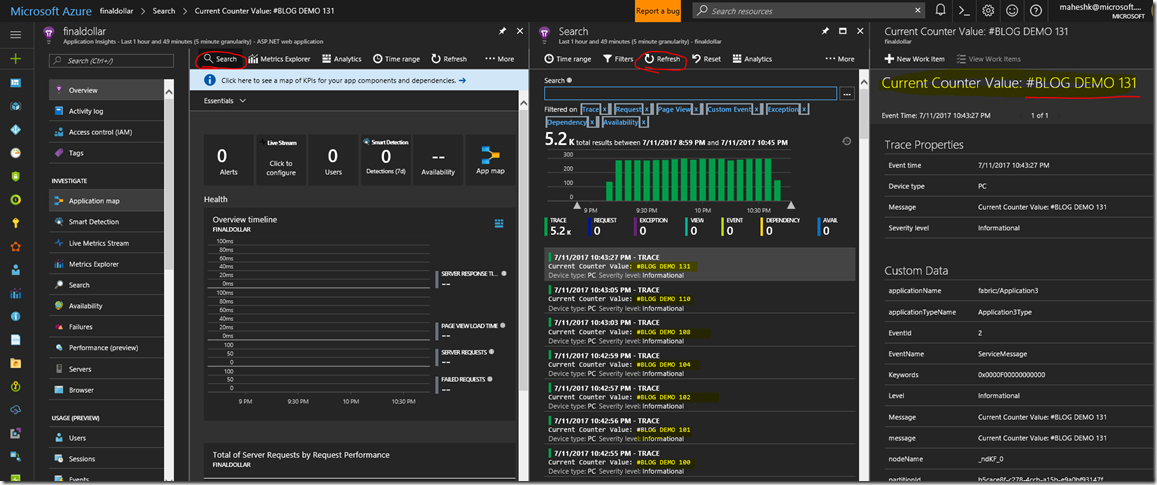

To verify the trace logs, you can log into portal.azure.com > your_application insights > search and refresh (allow few mins to see the data flowing here )

Reference article:-