Fragmentation – the database performance killer

Fragmentation is something that we don’t usually pay enough attention but it causes a very large percents of cases with performance degradation. Sometimes it plays a main destructive role for the overall performance of our database.

Disk fragmentation

There are different kinds of fragmentation. One type of fragmentation is a disk fragmentation, where the disk the database files and log are placed is highly fragmented, causing chunks of db files to be separated on the disk, such causing IO operations for reading extents to increase and additional moving of the disk heads. This is especially true when the disk is not dedicated to the database files, the server is used as a file server and other files are written often to it.

The second scenario is when files of more than one database is placed on the db disk and databases have their default settings. The database default settings are performance killers, especially options for initial size and file growths. See bellow in the '”defaults” fragmentation.

How to deal with it

Use Windows Disk Tools to check disk fragmentation, the tool visualize how your files are fragmented 0n disk. If you realize that your disk is fragmented then you have to plan a downtime to defragment. You can use any tool, including windows out of the box defragmenter to deal with disk fragmentation. BUT first you have to:

- Stop SQL Server service

- Copy database files from the drive you intent to defragment (this is your file level backup in case your tool cause a damage in the database file)

- Run the defragment tool

- Start SQL Server

- Check your databases, best tool to use for checking is DBCC CHECKDB, because it scans all pages inside the database and if there is an physical error it will found it.

The “defaults” fragmentation

When you create a database the default size and default growths settings are 1MB, 10%growth. For TLogs they are …This means every time the database file needs to increase it creates a chunk of .. MB, which is an operation of allocating and initializing disk space on the next disk blocks available which is not necessary to be continuous. Chunks of this size are absolutely not enough for any database operation, especially with large data loads. The result is an IO disaster, one way – in executing an expensive operation of allocating disk space and initializing for database usage at a time of executing transactions, and another - creating a fragmented database file.

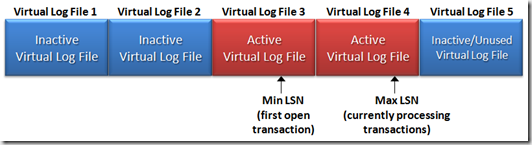

According to the TLog, the default settings for initial size and log growth create TLog fragmentation too. In which way it happens? TLog file contains chunks called Virtual Log Files (VLFs). The number and size of VLFs depends on Tlog file size and growth settings. When they are not set correctly or left as defaults a huge number of VLFs are created which means that the TLog file is fragmented, causing delays in saving pages during transactions, when backing up and truncating the log file, such causing an overall degradation of the workload performance. You can check the number of VLFs in you database log file executing:

DBCC LogInfo(‘yourdbname’)

The number of rows in the result set shows the number of VLFs. When the number is more than 80-100 rows the TLog file is fragmented.

What are the rules SQL Server uses to create VLFs?

<64MB chunk – 4 VLFs

>64MB and <1GB – 8 VLFs

>1GB – 16 VLFs

Let me give you an example:

According to this rule if your log file has default settings for growth (1MB initial size and 10% growth) then 10% increase of 1MB file will create a chunk of 102KB, which is bellow 64MB, thus a 4 VLFs will be created with 25KB size each, next growth will be 110KB, again creating 4 VLFs, with 26KB each, etc.. Imagine if the file needs to growth from 1MB to 200MB, what about 2GB? it will create a hundred of VLFs.

Another example, imagine a Log file which has to increase from 100MB to 200MB:

With default settings of 10% it would have to increase approx 10 times to reach 200MB, and thus will create a total of 40VLFs

If we change the setting and set 64MB growth, then it would have to increase 2 times, thus creating 16VLFs.

It is easy to test this scenario, actually this was part of my MS Days 2009 Top 10 Presentation demo:

- create a simple database and leave the default settings

- create a simple table that will grow quickly and will generate huge log file. To reach this fast use one of the field for the table as char(8000) with a symbol as a default. If you want to calculate rows per second inserted you should include in the table a filed of date/time type and default(getdate())

- backup the database to activate full recovery model (if you switch the db to full recovery it will not act as a full recovery model unless you do a full database backup)

- In new query window start a loop doing an insert to the simple table with default values.

- You can monitor Percent Log Used, Log Growth, Database Growth counters in PerfMon during the loop

- Stop the loop after a while (8-10min)

- Execute DBCC LogInfo(‘YourSimpleDBName’) and view the number of rows there

- If you want to calculate how many rows are inserted into the table per second then you can use my script

- Now you can execute Kimberly’s step 8 from 8 steps to better TLog troughput to reduce the Tlog fragmentation

- Now truncate the simple table and do the test again, compare the rows per second inserted :) Note that it is gained only from changing one default setting.

How to deal with it

There is one main recommendation here – don’t leave default settings for initial size and growth of your database and TLog

What are the best settings or are there any? Well, those who know me probably already know the answer: it depends :) And Yes, it depends on the workload, backup schedule, etc, but in general

For the database file do some rough capacity planning to estimate initial size including tables, data and indexes, once defined you cannot make the settings lower. You can change the settings at any time.

Define the growth based on your regular operations, take into account the maintenance – rebuilding indexes require large amount of database space (approx 1.5 times your table size). Leave your database with 20-30% more free space and larger that data in it. You can monitor when the database file performs growths (there is a counter in PerfMon) and schedule the growths with alter database during non-working hours. This will eliminate the expensive disk allocations during the transactions.

Don’s use percentage for growth settings, use fixed size instead

If you use SQL Server 2005/2008 Enterprise Edition then don’t forget the Instant File Initialization setting. This will allow you the SQL Server to optimize and do a faster initialization when database file growth is needed. (By the way there are signals that the option will be included in standard edition too, which will be very cool! )

Turn OFF Auto-Shrink and don’t use SHRINKDATABASE nor SHRINKFILE as part of your maintenance step, never! I will do a separate post why the shrink is evil, but please trust me at this point.

For the log file

If your Tlog reaches 100GB or it is between 100GB and 300B, start at 8GB, and increase with 8GB chunks – 16, 24, 32, 40.. You will get 16VLFs on every 8GB, which is a 512MB VLF, such the log will clear on every 512MB on normal operations.

It is not recommended to set the growth more than 8GB, because you will end up in another direction of very large VLFs in size and clearing the log will be a challenge. Remember that the Inactive portion of the log is cleared.

What to do if you have your Log fragmented already. There are a perfect instructions how to deal with fragmented Tlog files on Kimberly’s blog 8 steps to better TLog troughput , look at step 8.

The fragmentation inside the database – index fragmentation

Well, this a huge topic and I will just mention thinks in general here and do a separate post on Index fragmentation and optimizations.

Index fragmentation is something you should monitor regularly and I will provide you with some scripts to do it in my next post. This kind of internal database fragmentation is not reached because of wrong database settings but as a result of regular database activities – inserts, deletes and updates in some cases. So it’s normal to have your indexes fragmented at some time, the point is to catch the time when further fragmentation will cause a degradation of performance. Actually based on type and usage of you indexes their fragmentation could reflect not only queries, but data modifications also.

How to deal with it

Regular monitoring and idx maintenance is the key here. The indexes should be monitored for physical and logical fragmentation periodically. Could be once daily for some intensive index/table usage, and most often once a week. During the monitoring you should check percentages of logical and physical fragmentation for particular index and the general rule is if the idx fragmentation (both logical and physical) is more than 30% you should deal with it either with ALTER INDEX REORGANIZE (for logical) or ALTER INDEX REBUILD (for physical).

There is one very important point here, and it is analysis and regular optimization of the indexing structure of your database, which is

How much of your indexes are not used and which are they. Those indexes are very important to be removed, beside consuming database space, they consume resources during maintenance increasing your maintenance time. Probably most important is that non-used indexes slow down the performance of your data modifications.

Which are you missing indexes. These are indexes that are not presented in your database but could be useful to satisfy you queries. You should deal with this recommendations carefully, testing is required. Sometimes it is more important to remove unused index rather that to create additional index.

Analyzing the indexing infrastructure is an exercise that should be done once monthly. This will guarantee that your database is in a good level of optimization.

More on this see in my next post :)

Comments

- Anonymous

July 31, 2016

you can also take the help of this link to solve sql server database physical file fragmentation issue: http://sqlserver-qa.net/2016/08/01/solving-physical-file-fragmentation-issue/