Now Available: Access to Source Code & Demos of AI-Infused Apps

This post is authored by Tara Shankar Jana, Senior Technical Product Marketing Manager at Microsoft.

The success of enterprises in adopting AI to solve real-world problems hinges on bringing a comprehensive set of AI services, tools and infrastructure to every developer, so they can deliver AI-powered apps of the future that offer unique, differentiated and personalized experiences. In this blog post, we are sharing source code and other resources that demonstrate how easy it is for existing developers without deep AI expertise to start building such personalized data-driven app experiences of the future.

To start off, what are the key characteristics of such AI apps?We would expect them to be intelligent and get even smarter over time. We expect them to deliver new experiences, removing barriers between people and technology. And we want them to help us make sense of the huge amount of data that is all around us, to deliver those better experiences.

Let us take you through the journey of building such apps.

Let us take you through the journey of building such apps.

1. Infusing AI

Artificial Intelligence is anything that makes machines smart. Although machine learning is a technique for achieving that goal, what if, instead of creating custom ML models, you could directly start using pre-built ML in the form of abstracted libraries? Microsoft offers this in the form of Cognitive Services. Take a look at how you can infuse AI into real world apps with this cognitive services demo, available with source code on GitHub.

Key takeawaysfrom this demo and code:

- Pre-built AI services in the cloud can address many of your AI needs: Cognitive Services are easy-to-use, customizable, pre-built services that address common tasks where AI may be useful, such as in vision, speech and NLP.

- Easy to use: Cognitive Services are easy to use and compose in your applications, as the above example clearly shows.

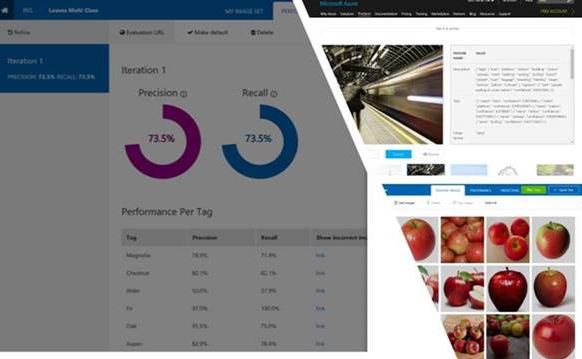

- Customizable: The Custom Vision Service, for example, lets you build a customized image classifier with ease.

In fact, one of the top requests from businesses was this ability to customize these APIs, to recognize their own products for instance. To this end, we released the Custom Vision Service in May 2017, enabling any developer to quickly infuse vision to their applications and easily build and deploy their own image classifiers. The workflow is super simple too – just upload some training data, train, then call your model via a REST endpoint. More information is available here.

You may be wondering how to deploy and use the model created above in real applications. Using the model that was created using the Azure Custom Vision Service, we can create an application that applies that model to images in real-time on an iPhone. The application that you have downloaded already contains a copy of the fruit model, so you can get started right away. In this section, we will show how to convert a model you have downloaded from the Azure Custom Vision Service for use in your application. Start with this demo we have made available on GitHub.

Key takeaways from this demo and code are:

- Use the customizability of APIs such as CustomVision.ai: Build custom image classifiers with ease.

- Use Cognitive Services in your iOS apps: Cognitive Services are easy to include in your iOS apps using Xamarin. For instance, you can easily add Custom Vision models to your iPhone apps this way.

- Deployed models are super-fast: Deploying models to the local device removes the need for an internet connection, provides high accuracy and confidence, and enhances app performance and the user experience.

These examples demonstrate just how easy it is to infuse AI into any app. Although we could have easily called the REST API, we instead used CoreML locally. We hope you are inspired by these services and find interesting ways to incorporate them into your own applications.

These examples demonstrate just how easy it is to infuse AI into any app. Although we could have easily called the REST API, we instead used CoreML locally. We hope you are inspired by these services and find interesting ways to incorporate them into your own applications.

2. New App Experiences

Let us know turn to how applications of the future can provide fundamentally new user experiences. Technology is fast moving to a place where computers are adapting to humans and our natural modes of interaction. One great example of such new app experiences is provided by the whole world of conversation-infused bots. Check out this bot demo and associated source code.

The key message for developers from this demo is:

- Microsoft cloud AI services make it super easy to create conversational AI -infused bots.

3. Data-Driven Apps

Apps of the future sit atop exploding mounds of data. Sometimes the "bigness" of this data is downright hard to comprehend. More data was created in the last two years than in the entire prior history of human beings on this planet. By 2020, we will be creating 1.7 megabytes of new information per second per human on the planet.

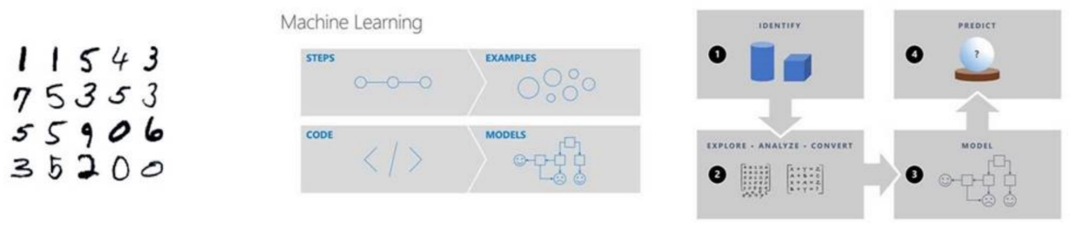

As developers, we need to extract as many insights as we can from all that data, so we can provide novel and amazing experiences for our users. This could be for tasks as varied as recommending a purchase, discovering a friend, eliminating spam from your inbox, or estimating the arrival time for your car. To achieve these things, however, you need the right tools for training models – tools that are easy to integrate with your applications. Let's take a peek at how we are solving those problems today.

Visual Studio Tools for AI offers a powerful toolset for training models that are easy to integrate into your applications. You can use the familiar VS environment to:

- Train and debug on your local machine, to test quickly.

- Train models on powerful VMs in Azure or on-premises Linux machines.

- Train models using Azure Batch AI to dynamically spin VMs up/down, so you only pay for what you need and use.

- Train models using Azure ML to get visibility into how your experiment improves over time.

- Export models to use in your applications.

In this next sample, you'll learn how to use Visual Studio Tools for AI to train a deep learning model locally, then scale it out to Azure, and include that model in an application. The source code and demo files for this sample are available here. This example creates an application that uses ML to recognize numbers/digits.

Additional End-to-End AI Samples

There's additional cutting-edge demos of solutions that cut across all the three AI app pillars that I discussed above, and we are happy to make the source code for each of these available to you in the sections that follow.

JFK Files Demo

On November 22nd, 1963, the President of the United States, John F. Kennedy, was assassinated. He was shot by a lone gunman named Lee Harvey Oswald while driving through the streets of Dallas in his motorcade. The assassination has been the subject of so much controversy that, 25 years ago, an act of Congress mandated that all documents related to the assassination be released this year. The first batch of released files has more than 6,000 documents totaling 34,000 pages, and the last drop of files contains at least twice as many documents. We're all curious to know what's inside them, but it would take decades to read through these.

We approached this problem by using Azure Search and Cognitive Services to extract knowledge and gain insights from this deluge of documents, using a continuous process that ingests raw documents and enriches them into structured information that lets users explore the underlying data. You can access the source code and demo and read more about this at the blog post here.

Stack Overflow Bot Demo

The Stack Overflow bot is intended to demonstrate the integration between the Microsoft Bot Framework and Cognitive Services. This bot uses Bing Custom Search, the Language Understanding Intelligence Service (LUIS), QnA Maker and Text Analytics services. The Bot Framework lets you build bots using a simple, but comprehensive API. The Bots you build can be configured to work for your users wherever they are, such as on Slack, Skype, Microsoft Teams, Cortana and more.

The source code for this bot is available here. To learn more about developing bots with the Bot Framework, check out this documentation.

Skin Cancer Demo

This demo shows how to use Azure Machine Learning services (preview) end-to-end, using it to create an ML model for skin cancer detection and run the model on mobile phones. Skin cancer is the most common form of cancer, globally accounting for perhaps 40% of all cases. If detected at an early stage, it can be controlled. We wanted to create a mobile AI application for everyone to be able to quickly detect whether they need to seek help. The app could be used to flag a set of images which in turn could help doctors be more efficient and focus only on the most critical areas.

For this tutorial, we use the ISIC Skin Cancer dataset. You can refer to this GitHub link to find out how to download this research dataset. In this example, we achieve the following:

- Build the model using Azure ML.

- Convert the model to CoreML.

- Use the CoreML model in an iOS app.

The example also uses the Azure Machine Learning Workbench, a cross-platform desktop application that makes the modeling and model deployment process much faster than what was possible before.

You can find the source code for this example here and a link to the video for this demo is here.

Snow Leopard Demo

Snow leopards are highly endangered animals that inhabit high-altitude steppes and mountainous terrain in Asia and Central Asia. There's only an estimated 3,900 – 6,500 animals left in the wild. Due the cats' remote habitat, expansive range and extremely elusive nature, they have proven quite hard to study. Very little is therefore known about their ecology, range, survival rates and movement patterns. To truly understand the snow leopard and influence its survival rates more directly, lots more data is needed. Biologists have set up motion-sensitive camera traps in snow leopard territory in an attempt to gain a better understanding of these animals. In fact, over the years, these cameras have produced over 1 million images, and these images are used to understand the leopard population, range and other behaviors. This information, in turn, can be used to establish new protected areas as well as improve the many community-based conservation efforts administered by the Snow Leopard Trust.

The problem with snow leopard camera data, however, is that biologists must sort through all the images to identify the ones with snow leopards or their prey as opposed to the vast majority of images that have neither. Doing this sort of classification manually is literally like finding needles in haystacks, and it takes around 300 hours of precious time for each camera survey. To address this problem, the Snow Leopard Trust and Microsoft entered a partnership wherein, by working with the Azure ML team, the Snow Leopard Trust was able to build an image classification model using deep neural networks at scale on Spark.

You can find the source code for this example here and a link to the video is here.

Summary

To quickly recap, this post was about how you can:

- Easily infuse AI magic into your apps, with the help of Cognitive Services and just a little bit of code.

- Create new user experiences, including intelligent bots and conversational AI solutions that understand your users.

- Reason on top of your big data, by creating your own custom AI models in a highly productive and efficient manner, by making use of the comprehensive tools and services we provide.

We are also excited to launch the AI School today – check out https://aischool.microsoft.com – a place where we will be posting several online courses and resources to help you learn all the tips and techniques necessary to be an ace AI developer.

We hope this post helps you get started with AI and motivates you to become an AI developer.