ICYMI: Recent Microsoft AI Updates, Including in Custom Speech Recognition, Voice Output, and Video Indexing

A quick recap of three recent posts about Microsoft AI platform developments – just in case you missed it.

1. Start Building Your Own Code-Free, Custom Speech Recognition Models

Microsoft is at the forefront of speech recognition, having reached human parity on the Switchboard research benchmark. This technology is truly capable of transforming our daily lives, as it indeed already has started to, be it through digital assistants, or our ability to dictate emails and documents, or via transcriptions of lectures and meetings. These scenarios are possible thanks to years of research and recent technological jumps enabled by neural networks. As part of our mission to empower developers with our latest AI advances, we now offer a spectrum of Cognitive Services APIs, addressing a range of developer scenarios. For scenarios that require the use of domain specific vocabularies or the need to navigate complex acoustic conditions, we offer the Custom Speech Service which lets developers automatically tune speech recognition models to their needs.

As an example, a university may be interested to accurately transcribe and digitize all their lectures. A given lecture in biology, to cite one example, may include a term such as "Nerodia erythrogaster". Although extremely domain-specific, terms like these are nevertheless critically important to detect accurately in order to transcribe these sessions right. It is also important to customize acoustic models to ensure that the speech recognition system remains accurate in the specific environment where it will be deployed. For instance, a voice-enabled app that will be used on a factory floor must be able to work accurately despite persistent background noise.

The Custom Speech Service enables acoustic and language model adaptation with zero coding. Our user interface guides you through the entire process, including data importation, model adaptation, evaluation and optimization by measuring word error rates, and tracking improvements. It also guides you through model deployment at scale, so models can be accessed by your apps running on any number of devices. Creating, updating, and deploying models takes only minutes, making it easy to build and iteratively improve your app. To start building your own speech recognition models, start from the original blog post here – see why so many Microsoft customers are previewing these services across a wide range of scenarios and environments today.

2. Use Microsoft Text-to-Speech for Voice Output in 34 Languages

With voice becoming increasingly prevalent as a mode of interaction, the ability to provide voice output (or Text-to-Speech, aka TTS), is becoming a critical technology in support of AI scenarios. The Speech API, a Microsoft Cognitive Service, now offers six additional TTS languages to all developers, namely Bulgarian, Croatian, Malay, Slovenian, Tamil and Vietnamese. This brings the total number of available languages to 34. Powered by the latest AI technology, these 34 languages are available across 48 locales and 78 voice fonts.

Developers can access the latest generation of speech recognition and TTS models through a single API. Developers can use the Text-to-Speech API across a broad swathe of use cases, either on its own – for accessibility or hands-free communication and media consumption, for instance – or in combination with other Cognitive Services APIs such as Speech to Text or Language Understanding to create comprehensive voice driven solutions. Learn more about this exciting development at the original blog post here.

3. Gain Richer Insights on Your Video Recordings

There's a misconception that AI for video is simply extracting frames from a video and running computer vision algorithms on each video frame. While one could certainly take that approach, it generally does not help us get to deeper, richer insights. Video presents a new set of challenges and opportunities for optimization and insights that makes this space quite different from processing a sequence of images. Microsoft's solution, the Video Indexer, implements several such video-specific algorithms.

To take one such example, take the situation of detecting people present in a video. Such people will present their heads and faces in different poses and they're likely to appear under varying lighting conditions as well. In a frame-based approach, we would end up with a list of possible matches from a face database but with different confidence values. Some of these matches may not be the same across a sequence of frames even when it was the very same person in the video the entire time. There's a need of an additional logic layer to track a person across frames, evaluate the variations, and determine the true matching face. There is also an opportunity for optimization whereby we can reduce the number of queries we make by selecting an appropriate subset of frames to query against the face recognition system. These are capabilities provided with the Video Indexer, resulting in higher quality face detection.

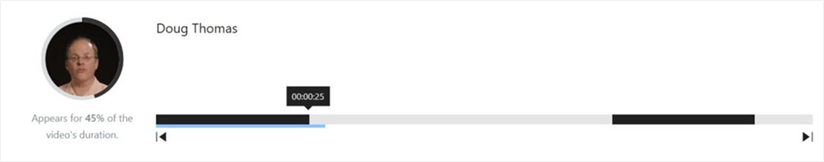

Next, take the example of tracking multiple people present in a video and displaying their presence in that video (or lack thereof) in a timeline view. Simple face detection on each video frame will not help us get to a timeline view of who was present during which part of a given video – getting to a timeline view requires us to track faces across frames, including accounting for side views of faces and other variations. Video Indexer does this sort of sophisticated face tracking and as a result you can see full timeline views on a video.

Similarly, videos offer an opportunity for extracting potentially relevant topics or keywords via optical character recognition – for instance, with signage and brands that may appear in the backdrop of a given video. However, if we process a video as a sequence of stills, we will often end up with lots of partial words as such signage / words may be partially obscured in specific frames. Extracting the right keywords across the sequence of frames requires that we apply algorithms on top of partial words. This, again, is something the Video Indexer does, thus yielding better insights. Learn more about the Video Indexer from the original blog post here.

ML Blog Team