How to Train a Deep-Learned Object Detection Model in the Microsoft Cognitive Toolkit

This post is authored by Patrick Buehler, Senior Data Scientist, and T. J. Hazen, Principal Data Scientist, at Microsoft.

Last month we published a blog post describing our work on using computer vision to detect grocery items in refrigerators. This was achieved by adding object detection capability, based on deep learning, to the Open Source Microsoft Cognitive Toolkit, formerly called the Computational Network Toolkit or CNTK.

We are happy to announce that this technology is now a part of the Cognitive Toolkit. We have published a detailed tutorial in which we describe how to bring in your own data and learn your own object detector. The approach is based on a method called Fast R-CNN, which was demonstrated to produce state-of-the-art results for Pascal VOC, one of the main object detection challenges in the field. Fast R-CNN takes a deep neural network (DNN) which was originally trained for image classification by using millions of annotated images and modifies it for the purpose of object detection.

Traditional approaches to object detection relied on expert knowledge to identify and implement so called "features" which highlighted the position of objects in an image. However, starting with the famous AlexNet paper in 2012, DNNs are now increasingly used to automatically learn these features. This has led to a huge improvement in accuracy, opening up the space to non-experts who want to build their own object detectors.

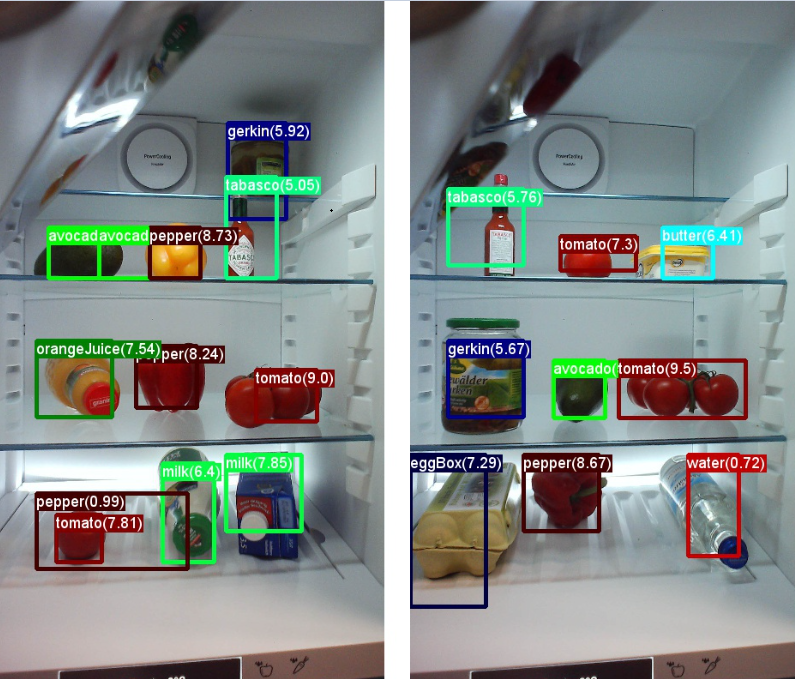

The tutorial itself starts by describing how to train and evaluate a model using images of objects in refrigerators. An example set of refrigerator images, with annotations indicating the positions of specific objects, is provided with the tutorial. It then shows how to annotate your own images using scripts to draw rectangles around objects of interest, and to label these objects to be of a certain class – for instance, an avocado, or an orange, etc. While the example images that we provide show grocery items, the same technology works for a wide range of objects, and without the need for a large number of training images.

The trained model, which can either use the refrigerator images we provided or your own images, can then be applied to new images which were not seen during training. See the graphic below, where the input to the trained model is an image from inside a refrigerator, and the output is a list of detected objects with a confidence score, overlaid on the original image for visualization:

Pre-trained image classification DNNs are generic and powerful, allowing this method to also be applied to many other use cases, such as finding cars or pedestrians or dogs in images.

More details can be found on the Cognitive Toolkit GitHub R-CNN page and in the Cognitive Toolkit announcement.

Patrick & TJ