Deep Learning Part 4: Content-Based Similar Image Retrieval using CNN

This post authored by Anusua Trivedi, Data Scientist, Microsoft.

Background and Approach

This blog series has been broken into several parts, in which I describe my experiences and go deep into the reasons behind my choices. In Part 1, I discussed the pros and cons of different symbolic frameworks, and my reasons for choosing Theano (with Lasagne) as my platform of choice. In Part 2, I described Deep Convolutional Neural Networks (DCNN) and how transfer learning and fine-tuning improves the training process for domain-specific images. Part 3 of this blog series is based on my talk at PAPI 2016. In this blog post, I show the reusability of trained DCNN model by combining it with a Long Short-Term Memory (LSTM) Recurrent Neural Network (RNN). We apply the model on ACS fashion images and generate captions for these images. Part 4 of this series extends the work of Part 3 to retrieve the most similar clothes images.

Introduction

Content Based Image Retrieval (CBIR) is the field of representing, organizing, and searching images based on their content rather than image annotations. Here we work on the retrieval of images based not on keywords or annotations but on features extracted directly from the image data.

Given the role of apparel in society, CBIR of fashion images has many applications. In the previous blog post, we used an ImageNet pre-trained GoogLeNet model for transfer learning and fine-tuning and extracting CNN features from the ACS fashion image dataset. Then we used the CNN features to train a LSTM RNN model for the ACS Tag prediction. In this blog post, we change our model a little - instead of forwarding the re-trained image features to LSTM RNN, we try CIBR of these fashion images.

Dataset

In this work, we use the ACS dataset as before. ACS dataset is a complete pipeline for recognizing and classifying people’s clothing in natural scenes. This has several interesting applications, including e-commerce, event and activity recognition, online advertising, etc. The stages of the pipeline combine several state-of-the-art building blocks such as upper body detectors, various feature channels, and visual attributes. ACS defines 15 clothing classes and introduces a benchmark dataset for the clothing classification task consisting of over 80,000 images, which is publicly available. We use the ACS dataset to create a model to retrieve most similar clothes images.

Fine-tuning GoogLeNet for ACS dataset

The GoogLeNet model that we use here was initially trained on ImageNet. The ImageNet dataset contains about one million natural images and 1,000 labels/categories. In contrast, our labeled ACS dataset has about 80,000 domain-specific fashion images and 15 labels/ categories. The ACS dataset is insufficient to train a network as complex as GoogLeNet. Thus, we use weights from the ImageNet-trained GoogLeNet model. We fine-tune all the layers of the pre-trained GoogLeNet model by continuing the back-propagation.

Similar Image Retrieval

Once we have fine-tuned and retrained the pre-trained DCNN on the ACS image dataset, we follow the below steps:

- Remove the last fully connected layer, which gives us the predicted label.

- Take the last pooled layer, which contains all the re-trained fashion image features.

- Calculate Euclidian distance among the extracted feature set to measure image similarity – less the distance, more similar the image.

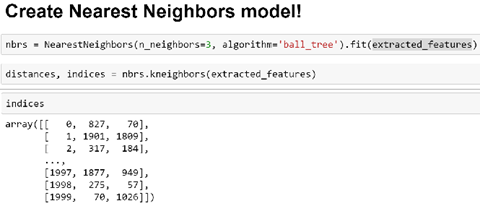

- Run a traditional Nearest Neighbors Model on the extracted feature to calculate the Euclidian distance among the features [Figure 1].

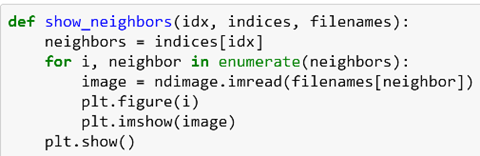

- Create a scoring function to show the top three most similar images compared to the input/test image [Figure 2].

Here we have re-trained the pre-trained DCNN for fashion images. However, re-training of models is not always necessary, especially if there is a big overlap of pre-trained and test image sets. For example, you have an ImageNet pre-trained DCNN and you want to retrieve similar images of cats. The DCNN is already trained to identify cat images as part of the ImageNet dataset training. Hence no re-training is necessary in such cases. Just take an ImageNet pre-trained DCNN, get the image features from the last pool layer, and then calculate Euclidean distances on those pre-trained features.

Sample Result

We pass sample test images to the scoring function and it plots the three images most similar to the test image [Figure 3].

As we have retrained our model on ACS fashion images, the model focus is on clothes in the images. So even if we pass a test image of a human wearing a scarf (as below), the human is ignored, and the model retrieves similar images based on the focus area (e.g., images with most similar scarves).

Conclusion

Here we present an exciting implementation of content-based image retrieval. We believe that the CBIR field will see exponential growth, with the focus being more on application-oriented, domain-specific work, generating considerable impact in day-to-day life.

[caption id="" align="aligncenter" width="480"] Figure 1: Nearest Neighbor model on extracted features[/caption]

Figure 1: Nearest Neighbor model on extracted features[/caption]

[caption id="" align="aligncenter" width="480"] Figure 2: Function to plot top 3 similar images[/caption]

Figure 2: Function to plot top 3 similar images[/caption]

[caption id="" align="aligncenter" width="290"] Figure 3: Scoring function giving most similar image[/caption]

Figure 3: Scoring function giving most similar image[/caption]