Azure ML Text Classification Template

This blog post is authored by Mohamed Abdel-Hady, Senior Data Scientist at Microsoft.

Automatic text classification – also known as text tagging or text categorization – is a part of the text analytics domain. Its goal is to assign a piece of unstructured text to one or more classes from a predefined set of categories. The piece of text itself can be one of many different types, e.g. a document, news article, search query, email, tweet, support ticket, customer feedback, product review and so forth.

Azure ML now includes a template to help developers and data scientists easily build and deploy their text analytics solutions. Among other things, this template can be used to:

-

- Categorize newspaper articles into topics.

-

- Organize web pages into hierarchical categories.

-

- Filter email spam.

-

- Perform sentiment analysis.

-

- Predict users’ intent as expressed through search queries.

-

- Route support tickets.

-

- Analyze customer feedback.

Workflow

The image below presents the workflow of the template. Each step corresponds to an Azure ML experiment and these experiments must run in order as the output of one experiment is the input into the next.

The bag-of-words vector representation model is commonly used for text classification. In this representation, the frequency of occurrence of each word, or term-frequency (TF), is multiplied by the inverse document frequency, and the TF-IDF scores are used as feature values for training a classifier. The n-gram model is another common vector representation model. There is no conclusive answer regarding which one of these works the best – it really depends on the data. Our template provides the user with a framework to quickly try out different vector representations and choose the best one to publish as a web service.

Data Pipeline

In Azure ML , users can upload a dataset from a local file or connect to an online data source from the web, an Azure SQL database, Azure table, or Windows Azure BLOB storage by using the Reader module or Azure Data Factory.

For simplicity, this template uses pre-loaded sample datasets. However, users are encouraged to explore the use of online data because it enables real-time updates in an end-to-end solution. A tutorial for setting up an Azure SQL database can be found here: Getting started with Microsoft Azure SQL Database.

Steps 1-4 in the template (see picture above) represent the text classification model training phase. In this phase, text instances are loaded into the Azure ML experiment and the text is cleaned and filtered. Different types of numerical features are extracted from the text and models are trained on different feature types. Finally, the performance of the trained models are evaluated on unseen text instances and the best model is determined based on a number of evaluation criteria.

In steps 5A and 5B, the most accurate model gets deployed as a published web service either using RRS (Request Response Service) or BES (Batch Execution Service). When using RRS, only one text instance is classified at a time. When using BES, a batch of text instances can be sent for classification at the same time. By using these web services you can perform classification in parallel, either using an external worker or the Azure Data Factory, for greater efficiency.

Text Preprocessing Step

Unstructured text such as tweets, product reviews, or search queries usually require some preprocessing before analysis. This experiment includes a number of optional text preprocessing and text cleaning steps such as replacing special characters and punctuation marks with spaces, normalizing case, removing duplicate characters, removing user-defined or built-in stop-words and word stemming. These steps are implemented using the R programming language, as shown below.

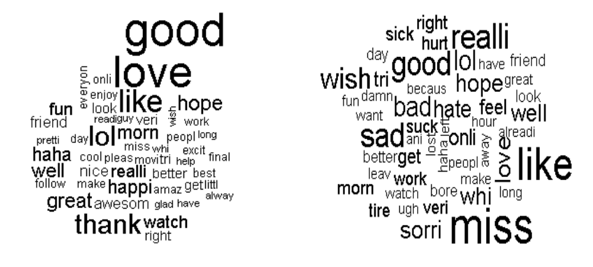

In this step, the user can visualize the most frequent words for each class. In the sentiment analysis use case, the first word cloud, shown below, represents the top positive sentiment-bearing words while the second word cloud shows the most frequent negative sentiment-bearing words in the input training corpus. This visualization helps us validate the quality of the training data before start to build the classification model in the next step.

Feature Engineering Step

The aim of this step is to convert variable-length unstructured text data into equal-length numeric feature vectors. The user has the option to try two types of text representations: N-Gram TF and Unigrams TF-IDF.

N-grams TF feature extraction

The Feature Hashing module can be used to convert variable-length text documents into equal-length numeric feature vectors using the 32-bit murmurhash v3 hashing method provided by the Vowpal Wabbit library. The objective of using feature hashing is dimensionality reduction. Additionally, feature hashing makes the lookup of feature weights faster at classification time because it uses hash value comparison instead of string comparison.

By default, the number of hashing bits is set to 15, and the number of n-grams is set to 2. With these settings, the hash table can hold 2^15 or 32,768 entries, in which each hashing feature represents one or more n-gram features and its value represents the occurrence frequency of that n-gram in the text instance. For many problems, a hash table of this size is more than adequate, although, in some cases, more space might be needed to avoid collisions. You should evaluate the performance of your ML solution using different numbers of bits.

The classification time and complexity of a trained model depends on the number of features (i.e. the dimensionality of the input space). For a linear model such as a support vector machine, the complexity is linear with respect to the number of features. For text classification tasks, the number of features resulting from feature extraction is high because each n-gram is mapped to a feature. The Filter Based Feature Selection module is used to select a more compact feature subset from the exhaustive list of extracted hashing features. The aim is to avoid the effects of the curse of dimensionality and to reduce the computational complexity without harming classification accuracy. Azure ML supports a number of scoring methods such as Pearson Correlation, Mutual Information, Kendall Correlation, Spearman Correlation and Chi-Squared.

Unigrams TF-IDF feature extraction

Create the Word Dictionary

First, extract the set of unigrams (words) that will be used to train the text model. In addition to the unigrams, the number of documents where each word appears in the text corpus is counted (DF). It is not necessary to create the dictionary on the same labeled data used to train the text model – any large corpus that fairly represents the frequency of words in the target domain of classification can be used, even if it is not annotated with the target categories.

TF-IDF Calculation

When the metric word frequency of occurrence (TF) in a document is used as a feature value, a higher weight tends to be assigned to words that appear frequently in a corpus (such as stop-words). The inverse document frequency (IDF) is a better metric, because it assigns a lower weight to frequent words. You calculate IDF as the log of the ratio of the number of documents in the training corpus to the number of documents containing the given word. Combining these numbers in a metric (TF/IDF) places greater importance on words that are frequent in the document but rare in the corpus. This assumption is valid not only for unigrams but also for bigrams, trigrams, etc. The Filter Based Feature Selection module is used to select a more compact feature subset from the exhaustive list of extracted unigram features.

Train and Evaluate Model Step

In this step, users train and evaluate text classification models using state-of-the-art ML algorithms ranging from Two-Class Logistic Regression, Two-Class Support Vector Machine and Two-Class Boosted Decision Tree for binary text classification to One-vs-All Multiclass, Multiclass Logistic Regression and Multiclass Decision Forest for multi-class text classification. The user will also find the Sweep Parameters module that allows her to get the optimal values for the underlying learning algorithm parameters. In the case of binary models, the user has the advantage of visualizing the weights assigned to different words/unigrams that help her justify the decision of the trained model. The weights shown here are a subset of the weights of the sentiment analysis model trained using the template.

The Evaluate Model module helps the user visualize the results of the comparison between the two trained models: the N-grams model (in blue color in the graphs) and unigrams model (in red color in the graphs). Based on this evaluation, the last step deploys the best trained model as a web service.

Deploy Trained Models as Web Services

A key feature of Azure ML is the ability to easily publish models as web services. This step deploys the N-grams TF and the unigrams TF-IDF text models trained in the previous step as web services. Web service entry and exit points are defined using the special Web Service modules. As noted earlier, the web service can be consumed in two modes: RRS (request-response) or BES (batch). Sample code to call the web services are provided in C#, Python and R.

Here are Azure ML Gallery links to each step of the template:

We hope you get an opportunity to use our new template for your text analytics needs. Do share your feedback or thoughts in the comments below.

Mohamed

Comments

- Anonymous

January 01, 2003

@Francisco - yes, the template supports other languages providing that the input training text instances are already tokenized (words are separated by space). In the near future, we are going to add a word breaker (tokenizer) to the text preprocessing step. - Anonymous

May 06, 2015

Is it possible to use this tool with languages other than English, such as Spanish? - Anonymous

May 06, 2015

On the outset..I must say this is fantastic!!

So in terms of performing sentiment analysis will the web service be able to accept large volumes of data or is it restricted in size. For example can I submit a document as the input and will the web service be able to accept it?