Artificial Intelligence and Machine Learning on the Cutting Edge

This post is authored by Ted Way, Senior Program Manager at Microsoft.

Today we are excited to announce the ability to bring intelligence to the edge with the integration of Azure Machine Learning and Azure IoT Edge. Businesses today understand how artificial intelligence (AI) and machine learning (ML) are critical to help them go from telling the "what happened" story to the "what will happen" and "how can we make it happen" story. The challenge is how to apply AI and ML to data that cannot make it to the cloud, for instance due to data sovereignty, privacy, bandwidth or other issues. With this integration, all models created using Azure Machine Learning can now be deployed to any IoT gateways and devices with the Azure IoT Edge runtime. These models are deployed to the edge in the form of containers and can run on very small footprint devices.

Intelligent Edge

Use Cases

There many use cases for the intelligent edge, where a model is trained in the cloud and then deployed to an edge device. For example, a hospital wants to use AI to identify lung cancer on CT scans. Due to patient privacy and bandwidth limitations, a large CT scan containing hundreds of images can be analyzed on a server in the hospital, rather than sending it to the cloud. Another example is predictive maintenance of equipment on an oil rig in the ocean. All the data from the sensors on the oil rig can be sent to a server on the oil rig, and ML models can predict whether equipment is about to break down. Some of that data can then be sent on to the cloud to get an overview of what's happening across all oil rigs and for historical data analysis. Other examples include sensitive financial or personally identifiable data that can also be processed on edge devices instead of having to send data to the cloud.

Intelligent Edge Architecture

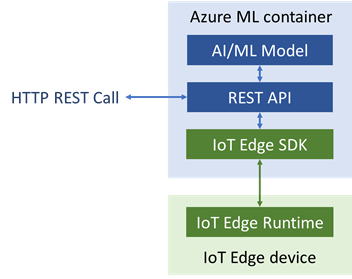

The picture below shows how to bring models to edge devices. In the example of finding lung cancer on CT scans, Azure ML can be used to train models in the cloud using big data and CPU or GPU clusters. The model is operationalized to a Docker container with a REST API, and the container image can be stored in a registry such as Azure Container Registry.

In the hospital, an Azure IoT Edge gateway such as a Linux server can be registered with Azure IoT Hub in the cloud. Using IoT Hub, the pipeline can be configured as a JSON file. For example, a data ingest container knows how to talk to devices, and the output of that container goes to the ML model. The edge configuration JSON file is deployed to the edge device, and the edge device knows to pull the right container images from container registries.

In this way, AI and ML can be applied to data in the hospital, whether from medical images, sensors, or anything else that generates data.

Azure ML Integration

All Azure ML models operationalized as Docker containers can also run on Azure IoT Edge devices. The container has a REST API that can be used to access the model. This container can be instantiated on Azure Container Service to scale out to as many requests as you need or deployed to any device that can run a Docker container.

For edge deployments, the Azure ML container can talk to the Azure IoT Edge runtime. The Azure IoT Edge runtime is installed separately on a device, and it brokers communication among containers. The model may still be accessed via the REST API, or data from the Azure IoT Edge message bus can be picked up and processed by the model via the Azure IoT Edge SDK that's incorporated into the Azure ML container.

AI Toolkit for Azure IoT Edge

Today's IoT sensors include the capability to sense temperature, vibration, humidity, etc. AI on the edge promises to revolutionize IoT sensors and sense things that were not possible before – such as visual defects in products on an assembly line, acoustic anomalies from machinery, or entity matching in text. The AI Toolkit for Azure IoT Edge is a great way to get started. It's is a collection of scripts, code, and deployable containers that enable you to quickly get up and running to deploy AI on edge devices.

We cannot wait to see the exciting ways in which you start bringing AI and ML to the [cutting] edge. If all this sounds exciting to you, we’re always on the lookout for great people to join our team!

Ted

@tedwinway