A New Real-Time AI Platform from Microsoft, and a Speech Recognition Milestone

Re-posted from the Microsoft Research blog.

Microsoft had a couple of major AI -related announcements earlier this week, summarized below.

Project Brainwave, for Real-Time AI

Microsoft unveiled Project Brainwave earlier this week. A new deep learning acceleration platform, Project Brainwave represents a big leap forward in performance and flexibility for serving cloud-based deep learning models. The entire system is designed for high speed and low latency, and can be used in a wide range of real-time AI applications, such as IoT, search queries, videos, real-time user interactions, and many more.

Project Brainwave was demonstrated using Intel's new 14 nm Stratix 10 FPGA.

Project Brainwave has three main layers – a high-performance distributed system architecture, a hardware DNN engine synthesized onto FPGAs, and a compiler and runtime for low-friction deployment of trained models. We used the massive FPGA infrastructure that we've deployed over the past few years, exploiting the flexibility of FPGAs to get research innovations into the hardware platform faster (typically in a matter of weeks), and to increase performance but without losses in model accuracy.

Project Brainwave incorporates a software stack that supports a wide range of popular deep learning frameworks, including Microsoft Cognitive Toolkit and Google TensorFlow. We are working to bring our industry-leading real-time AI capabilities to users in Azure, so customers can run their most complex deep learning models at record-setting performance. Learn more about Project Brainwave here.

New Speech Recognition Milestone

Switchboard is a corpus of recorded telephone conversations that the research community has used to benchmark speech recognition systems for more than 20 years. The task involves transcribing conversations between strangers discussing topics such as sports and politics. Last year, Microsoft announced a major milestone when we reached human parity on Switchboard conversational speech recognition. Essentially, our technology recognizes words in a conversation just as well as professional human transcribers.

When our transcription system reached the 5.9 percent word error rate which we had measured for humans, other researchers conducted their own studies, employing a more involved multi-transcriber process, yielding a 5.1 human parity word error rate. This was consistent with prior research that showed that humans achieve higher levels of agreement on the precise words spoken as they expend more care and effort.

Earlier this week, our research team reached that 5.1 percent error rate with our speech recognition system – a new industry milestone that substantially surpasses the accuracy we achieved last year. We reduced our error rate by 12 percent from last year's level, using improvements to our neural net-based acoustic and language models. We introduced an additional convolutional neural network combined with bidirectional long-short-term memory (CNN-BLSTM) model for improved acoustic modeling. Additionally, our approach to combine predictions from multiple acoustic models now does so at both the frame/senone and word levels. We published a technical report that has the full system details.

Our team also benefited from using the most scalable deep learning software available, Microsoft Cognitive Toolkit 2.1 (CNTK), for exploring model architectures and optimizing the hyper-parameters of our models. Additionally, our investment in cloud compute infrastructure, specifically Azure GPUs, improved the effectiveness and speed with which we could train models and test new ideas.

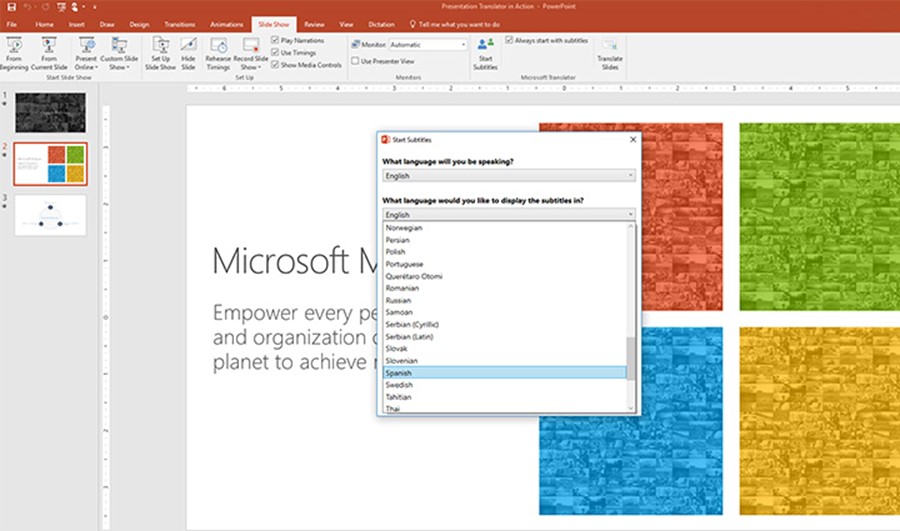

The Presentation Translator service can translate presentations in real-time for multi-lingual audiences.

Reaching human parity has been a research goal for the last 25 years. Our past investments are now paying dividends for customers in the form of products and services such as Cortana, Presentation Translator, and Microsoft Cognitive Services.

While this is a significant achievement, our team (and the speech research community at large) still have many challenges to address. For instance, achieving human levels of recognition in noisy environments, or with distant microphones, in recognizing accented speech, or speaking styles and languages for which only limited training data is available. There's also much work ahead when it comes to computers understanding meaning and intent. Meanwhile, you can learn more about our latest milestone here.

ML Blog Team