Gaming for the greater good

Big games are nothing new at the University of Michigan (UM). Just ask any UM alum about the cross-state rivalry with Michigan State or—shudder—the annual football grudge match against Ohio State. But big-time sports aren’t the only games that matter in Ann Arbor, as Dr. David Chesney’s students will tell you. Every year, students in his upper-level course, Software Engineering, and his entry-level class, Gaming for the Greater Good, are challenged to use technology to create games that serve a social purpose.

“Each year, we look for ways to use gaming technology to help people overcome physical or cognitive disabilities,” explains Chesney. “We look at eight or ten project ideas, then select one or two to work on for a semester or even an entire year. The idea is to let the students experiment with technology and see what it’s capable of in terms of solving real-world problems.”

And one of the technologies that his students seem to gravitate to is Kinect for Windows. “The students are drawn to Kinect for Windows. They find interesting, often nontraditional, ways of using it, which have led to a couple of major successes,” says Chesney.

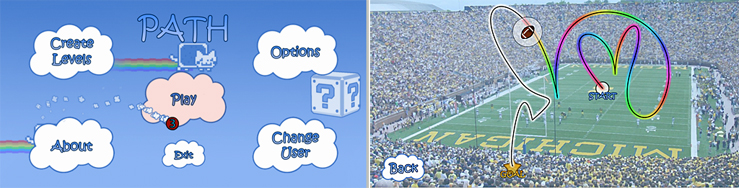

The first of those successes, and the one closest to being released as a commercial product, is a physical therapy application called PATH (the name is an acronym for Physically Assistive THerapy). PATH takes advantage of the Kinect sensor’s body tracking capabilities, challenging users to use different body parts—hands, head, or knees, for example—to trace a pattern that is projected on a large screen.

PATH home screen, left, and a sample pattern, right

Originally intended to help children with autism perfect their gross motor skills, PATH is now being used to provide physical therapy for people with brachial palsy injuries. The game provides multiple levels of difficulty, and it tracks the patient’s progress in terms of accuracy, the time it takes to complete a pattern, and more, allowing both therapist and patient to notice quantifiable improvements in motor function. The game also lets therapists create customized paths, so the therapy can be individualized to meet a particular patient’s needs.

PATH has undergone clinical trials at the University of Michigan’s associated hospital, and Chesney hopes to make it available commercially by early 2016. Any proceeds from the commercialization of PATH, or any of the other games developed by Chesney’s students, will be donated to organizations that work with children who have disabilities.

A more recent Kinect-enabled project to come out of a collaboration between Chesney’s class and an architecture course at UM is the tactile coloring book. It’s not really a book, but a large piece of stretchable fabric that displays several outlined figures: shapes, animals, cars, and so forth. When the right amount of tactile pressure is applied to one of these objects, the fabric responds by changing color, thus enabling a child to color the object by touch. The project is intended to help certain children on the autism spectrum who don’t understand the amount of pressure they are applying to do certain tasks. Consequently, they often use either too much or too little pressure when doing things like using a touch screen or petting a cat.

Kinect for Windows plays a major role in the tactile coloring project. A Kinect sensor located behind the fabric senses the depth to which the material is being stretched, and this data is algorithmically translated into a measurement of the pressure being applied to fabric. When the child applies the right amount of pressure—neither too little nor too much—the fabric changes color, providing the youngster with a visual reward for using the correct amount of tactile force.

https://www.youtube.com/watch?v=uQU7ZhMvH2k

With the 2016 winter semester now under way, Chesney and his students are examining ideas for their next projects. High on the list of possibilities is a “sensory room” for a school that serves special-needs children, most of whom require full-time nursing care. One of the ideas is to use Kinect for Windows to recognize sensory input from the kids, which could be used to alert the nursing staff of the students’ needs.

Another project under consideration is help for a teenaged boy with severe cerebral palsy, who has proficient fine-motor skills in only his right foot. The latest version of Kinect for Windows includes enhanced body tracking capabilities, such as the ability to recognize finger gestures. Chesney ponders whether Kinect for Windows could be trained to recognize the young man’s foot gestures, which could then be translated into any number of actions. “We don’t know if this could work,” says Chesney, “but I’m hoping some of my students will want to give it a try. Often, you can learn as much from failure as you can from success.”

Whatever the outcomes, Chesney is committed to the idea of “gaming for the greater good,” and we’re just thrilled that Kinect for Windows can be part of his mission.

Kinect for Windows Team

Key links

- Learn more about Kinect for Windows

- Learn about the latest Kinect sensor

- Hear Chesney’s students talk about using Kinect to create games to help kids with autism

- Follow us on Facebook and Twitter

Comments

- Anonymous

January 17, 2016

When are you going to improve the Kinect for Windows driver to allow the use of 2 or 4 Kinect V2 XBox One inside a Virtual Machine? Right now just a single Kinect is supported inside VMWare or Parallels. But for higher accuracy a minimum of 2 Kinect are needed.- Anonymous

January 27, 2016

Thank you for your interest. At this time, we have no update on the use of multiple Kinect for Xbox One sensors inside a virtual machine (VM).Beginning with the Kinect for Windows SDK 1.6, the v1 Kinect for Windows sensor has worked in Windows running in VMs, as explained on http://msdn.microsoft.com/en-us/library/jj663795.aspx.Kinect for Windows v2 will not support VMs. The VM would have to emulate/virtualize all the necessary DX11/Shader Model 5 requirements for compute without added latency to ensure that all frames arrive on time. There is very limited threshold when sending frames to the compute shader. Please direct additional questions to our public Kinect for Windows v2 SDK forum, where you can exchange ideas with the Kinect community and Microsoft engineers. You can also browse existing questions or ask a new question by clicking the Ask a question button. Access the forum at https://social.msdn.microsoft.com/Forums/en-US/home?forum=kinectv2sdk&filter=alltypes&sort=lastpostdesc.

- Anonymous

- Anonymous

February 04, 2016

Ok, using the Kinect for Windows Unity package, but need to test for MULTIPLE people. Without hiring 'actors' how can this be accomplished? I know Kinect Studio allows recording of data, but can this be used to feed the Unity app?