Visual Studio 2010 + .NET 4.0 = Parallelism for the Masses

Got Multiple Processors? With the hardware advances today, you would really have to search hard to find a good laptop, desktop, or server that doesn’t come with multi-core processor technologies in the box...

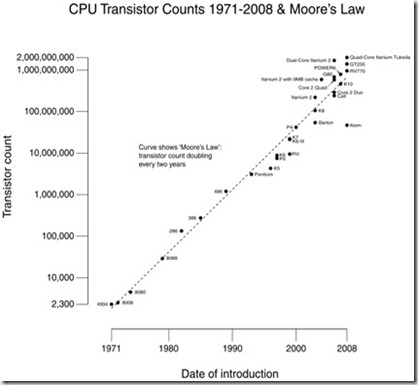

As software developers, we have been spoiled by Moore’s Law (https://www.intel.com/technology/mooreslaw) which states that: “The number of transistors on a chip will double about every 2 years” …

If you look at the chart above, you can certainly see that this has been true over the last 25+ years. Typically, this has been manifested as clock-speed increases..

Well, my friends, I’m sorry to say it – but the free Lunch is over…and the reason is HEAT! We have now reached a major inflection point in the industry as far as taking advantage of further improvements in processor clock speed improvements – and the reasons for this are:

- Increased clock speed == increases power usage

- Increased power usage == increased heat output

- Harder to keep the chips cool…

To drive this point home, here is a picture of what I am going to call an “Egg McMotherboard” – and yes, someone has actually documented exactly how you too can fry an egg on your motherboard using a few pennies as a heat-sink…notice also from the picture below - that this is a water-cooled CPU!

So, what’s the answer? –> Scale-Out by taking advantage of the now largely available Multi / Many Core processors along with the new Parallel Programming capabilities coming in Visual Studio 2010 and the .NET 4.0 Framework.

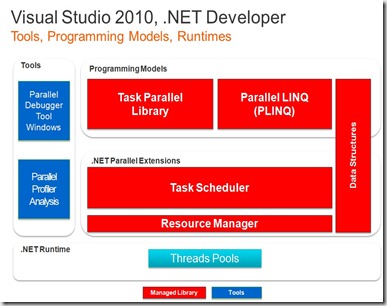

As you can see in the diagram above, Visual Studio 2010 and the .NET 4.0 framework will provide (2) new programming libraries to help you take advantage of Parallel programming to dramatically speed-up your applications.

The Task Parallel Library – allows for (2) types of parallelism:

Task Parallelism - “fine grained”

Simultaneously perform multiple independent operations. Divide-and-conquer, tasks, threads, fork/join, futures, etc.Imperative Data Parallelism

Apply the same operation to common collections/sets in parallel. Looping, data partitioning, reductions, scans, etc.

The Parallel LINQ (PLINQ) Library:

Declarative Data Parallelism

Define what computation needs to be done, without the how. Selections, filters, joins, aggregations, groupings, etc.

Why You Should Care:

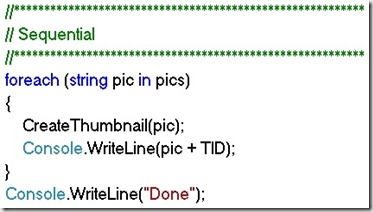

What does all this mean to you? Consider the following code snippet - that represents a traditional (sequential) approach to processing a common task such as creating thumbnails for a series of images; We will demonstrate how this simple loop can be expressed using each of the (3) new Parallel processing metaphors:

Traditional Sequential Approach:

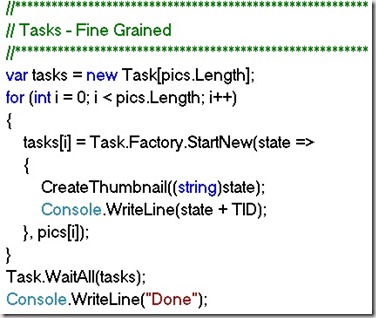

Parallel Tasks:

Below is the same processing loop – but slightly modified to take advantage of the new Tasks API in the .NET 4.0 framework:

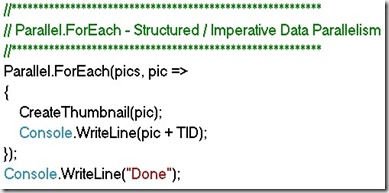

Imperative Data Parallelism:

The code below illustrates how you can “Parallelize” your FOR / FOREACH loops to express common imperative data-oriented operations. Note how this is as easy as adding Parallel. in front of your ForEach statements:

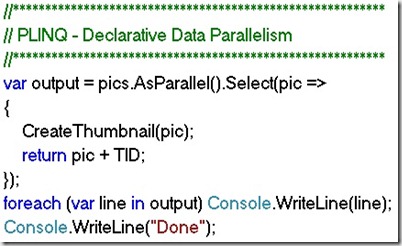

PLINQ:

PLINQ is an implementation of LINQ-to-Objects that executes queries in parallel. The concept is that you express what you want to accomplish, rather than how you want to accomplish it. Note that this has Minimal impact to existing LINQ queries…the only difference is adding an AsParallel() statement in front of the Select statement…How cool is that?

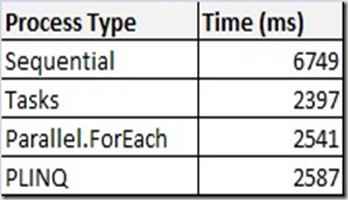

And the results:

I ran each of these on my dual-core Dell 820 laptop with Windows 7 Ultimate – here are the results:

Note that each one of the (3) parallel methods reduced the processing time to LESS THAN HALF of the time it took to process sequentially – WITH MINIMAL CHANGES TO THE CODE!

QUAD Core Anyone?

I happen to have a Quad-Core workstation at home and wanted to take my parallel processing tests one step farther – to see how the relative performance gains stacked-up across dual and quad-core machines…

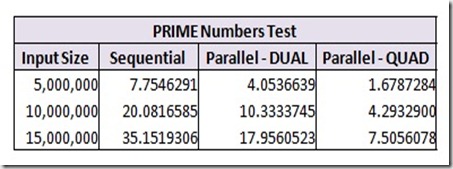

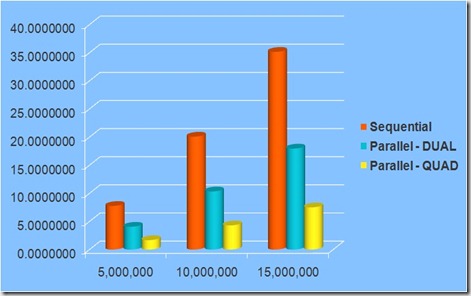

For this test, I chose a different problem example – measuring how much time it takes to calculate all the Prime numbers for sets of 5,10, and 15 million – across each type of processor.

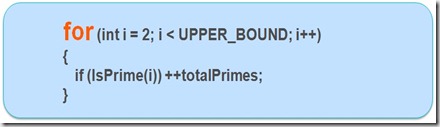

Here is the sequential FOR loop that was the baseline:

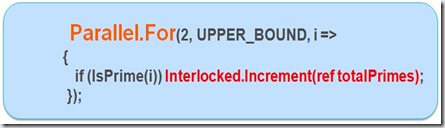

And here is the modified code to take advantage of Declarative Parallel processing – note how the loop also requires special processing to make sure that each iteration protected - the interlocked functions provide a simple mechanism for synchronizing access to a variable that is shared by multiple threads. This function is atomic with respect to calls to other interlocked functions.

Here are the results:

And a chart that shows just how dramatic an effect Parallel processing in the .NET 4.0 framework really is:

Note how the scalability is almost linear – using parallel processing techniques across Dual-Core machines takes roughly 1/2 the processing time of traditional programming methods. And running the same code on a Quad-processor takes roughly 1/4 of the time!

I had the chance to present this topic recently at the Windows 7 / Windows Server 2008 R2 event in Ft. Lauderdale, Florida. I am convinced that this will be a real game-changer for .NET Architects and Developers who are looking to Do More With Less in the new economy…You can download my slides and demos for this talk from my SkyDrive folder at:

https://cid-e80ea9288abd4452.skydrive.live.com/browse.aspx/Parallelism

Enjoy!

Technorati Tags: Visual Studio 2010,.NET 4.0,Parallel Processing