Telemetry my dear Watson - don't leave your bot unmonitored

Here's a recent example of why it's important to keep tabs on your chatbot conversations. Once they are out there in the wild, people will try to break them - be it on purpose, curiosity or even accidentally. Whilst you can add buttons and UI elements to your conversations, you most likely want to allow free text for natural language at some point. Language Understanding Intelligent Service (LUIS.ai) makes this easy to do, allowing you to resolve the intent from the multitude of ways a user can ask a question or give a response.

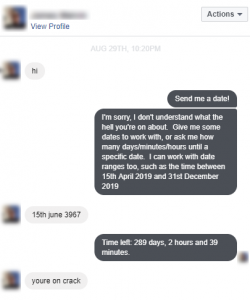

The How Long Have I Got Bot was the first bot I built using the Bot Framework shortly after it was announced in preview at //Build 2016. It's a low value bot that doesn't do anything but calculate the time left between now and a future (or passed date). It's useful for working out how many days you've got left until Christmas, a birthday etc and that's pretty much it. I used this sample bot to learn about the BotBuilder SDK and to explore how channels behaved differently. I enabled Application Insights telemetry so that I could monitor the bot once it was public. So roll on over a year later and it's still out there being hammered by random folk, largely on Facebook Messenger. Within App Insights, I track (anonymously) the questions that are asked to the bot and the response the bot has given. Recently, one conversation stood out, since it was on the Facebook Messenger channel - here's a screenshot of the actual conversation dialog between the user and the bot:

So whilst the bot users' response did make me chuckle, I was curious to what had actually happened here. The user had requested a future date of 15th June 3967, pretty far out - but a valid request all the same. I had updated the bot back in May shortly after this announcement: https://blog.botframework.com/2017/05/08/luis-date-time-entities/ to make date handling much simpler, where I'm using the builtin.datetimeV2 entity to do the hard work of date resolution. So I wondered how this could happen, it was time to test out the Luis.ai model to ascertain what's going on inside with the date entity for dates with some outlier year values.

Here's what happened:

Notice the entity detection, where the year is outside the [$datetimeV2] entity for 31st December 1899 and 1st January 3000. It turns out that the Luis builtin.datetimeV2 range is from the 1st January 1900 to 31st December 2099. Any dates outside that and the year is ignored, causing LUIS to return an ambiguous date - for which my code was always using the latest one (in this case defaulting to the future year 2018). So I've now added the min and max datetimev2 thresholds to the documentation: here.

If you're building a bot for your business or brand, I recommend you monitor your bots' conversations using Application Insights. To help mitigate against these types of language understanding challenges I'd take a look at using Text Analytics Cognitive Service, which you can use to automatically parse any phrase that's given to your bot and determine if it's positive or negative sentiment or to extract key phrases. There are plenty of samples out there that show you how to do this from a bot, such as this article by one of our MVPs, Robin Osborne: https://www.robinosborne.co.uk/2017/03/07/sentiment-analysis-using-microsofts-cognitive-services/.