Process of Managing Risk

Information Security’s core function includes managing information security risk. Now there is a lot of content on the topic of “risk management” from both the academic world and the professional world that you can easily find on the internet. While we don’t subscribe to any one methodology, we have taken the approach that I’ve seen a lot of organizations take, which is to try to adopt the pieces of various models that exist (with as-needed customization of course) in an effort to provide a best-fit scenario for the Microsoft operating environment.

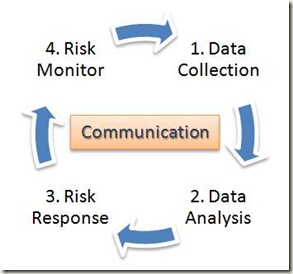

At a high-level, our process breaks down into 4 phases:

- Data Collection

- Data Analysis

- Risk Response

- Risk Monitor

- Data Collection: For us, as with most Information Security organizations, risk management is a data-driven analytical activity in which the most crucial ingredient is the information. Lots of effort goes into obtaining timely, relevant, and accurate information. There must be infrastructure in place to capture the relevant information as well as defined processes with which we can go in and fetch specific information. Information can come from many sources. For example we might find emerging risks by looking at industry trends or automated sensors, like network intrusion detection systems, can tell us about risks in our environment. Streamlined processes for users to submit information may highlight process or other physical risks that are not easily captured through automated scanners. There may also be occasions when direct assessments conducted by our team on systems or processes result in detailed risk information. In summary, we try to gather data from the past (historical incident data), now (current trends), and the future (look to upcoming changes in your world).

- Data Analysis: Once data is collected, appropriate analysis must be conducted to evaluate and prioritize risk. Disparate datasets must be normalized to the point where they can be correlated and aggregated. When data is unavailable, reasonable assumptions must be made. Once you have your normalized data and assumptions you need to decide which model you are going to apply against the data. Deriving models for such objective and consistent analysis can be a daunting task. Organizations must find the balance here between too much analysis and not enough analysis. I have seen organizations get bogged down in analysis to the point that they can never make a decision or act. The data or model is never “detailed enough or good enough.” We call this analysis paralysis. On the other side, I have seen organizations basically skip analysis because it takes time. When organizations skip analysis they can incorrectly prioritize risk (they are making decisions based on emotions rather than facts) and can spend resources incorrectly or lose credibility. Organizations must understand the questions they are trying to answer and upfront identify the level of detail required. Risk qualification models are fast and easy and can be used for organizations that require less analysis. Risk quantification models take more time and work but can be used by organizations that need more details when making decisions. Each organization needs to understand what is required and find the correct mixture between the two models. The appropriate model might even vary between different parts of the same company. The area of data analysis is an area where we are looking to investigate more into as we continue to optimize the robustness of our approach to data analysis given our experiences so far.

- Risk Response: Our end objective is to appropriately manage the risks we identify. Once the data is captured and analyzed, appropriate risk controls must be derived and proposed. In this stage, it is absolutely imperative to keep the business objective in the forefront. A control that is being proposed to a business owner must take into account the business objectives and prove cost effective. It makes no sense to try to implement a risk control that will cost more to implement than the largest foreseeable impact associated with the risk materialization. It’s also important of us to keep in mind that it’s not always necessary to implement a mitigating control for a risk. Sometimes the optimal response is to transfer the risk (e.g., legal disclaimer, insurance, etc.) or perhaps to remove the risk bearing system all together. Perhaps the most optimal approach is just to acknowledge and accept the risk. Whatever the case, an optimal response can only be determined once the risk is understood.

- Risk Monitor: The final phase of our cyclical process is to ensure we are appropriately monitoring the risk after controls have been implemented to ensure the controls are effective and to understand if the actual underlying risk is changing. This may be a manual process or an automated process depending on the risk type. It is also worth noting that some risks end up going through iterative cycles until an optimized control is devised to manage that risk.

A central component of any good risk management process is communication. This is very much the case for us as well. We need to be in constant communication with the business, stakeholders, peer security teams and others to ensure an overall holistic and ongoing approach to managing information security risk.

One example of this process we recently went through involved a tool repository that exists inside of Microsoft’s corporate network. This repository is a collection of tools that Microsoft employees create in their spare time to help with everything from day-to-day task automation to plug-ins for various applications we use. The innovation is great but it does create a potential risk if the collection of tools introduces security vulnerabilities in the Microsoft environment.

As a first step in collecting data, our ACE team went in to conduct sampling assessments of tools as well as the process for uploading tools to this repository. This data was analyzed to assess the level of risk created by these tools. The risk was then prioritized appropriately. A risk response plan was developed to meet Microsoft’s objective of having an environment that encourages innovation and agility in a secure manner. In the end, the risk response was formulated to drive contextual awareness of policies and best practices around secure coding for authors of tools that upload to this repository. We’re now looking to implement this control and move into the monitor phase in which we will be monitoring its effectiveness in maintaining our overall objectives as it relates to this specific risk.

In all this, we’ve always felt that the most challenging and crucial phases are the data collection and data analysis phases (looking forward to discussing this in more detail in later posts). If the data you get isn’t any good or you don’t carry out the right analysis, your entire risk response could be flawed.

In the end, it’s important to note we’re continually looking to optimize the process we have as we believe there is room for much improvement. In fact, I’d love to hear your thoughts on risk management. Definitely one of the most enjoyable aspects of my job is the opportunity to engage with our customers through various means in which I get a chance to share ideas and thoughts. Drop a comment or feel free to send me an email.

-Todd