Microsoft Cloud OS Network Platform (2/2)

In this article I'll continue the story about COSN Platform - an ultimate solution for service providers to provide Azure-like services, hosted in a local datacenter. First post is available here.

High Availability of the COSN Platform

COSN Platform was designed to be highly available, cost optimized and scalable. It consists of several major components, and all these components need to be highly available. The overall COSN Platform stability is based on a several redundancy levels.

Server hardware redundancy

We recommend that every server must be equipped with a pair of redundant power supplies (connected to separate power lines), ECC memory and redundant fans. Well-known server hardware brands are preferred, with a fast replace of failed components.

Storage redundancy

It you wish to use traditional storage, than we recommend to use at least a storage with a pair of redundant controllers, connected to hosts via the pairs of cables with MPIO technology. RAID10 is preferred for storing the VMs, RAID5/6 is preferred to store backups and library items, with an available hot spare of disk. This method protects from one controller failure (including its power supply failure), EthernetFC cable failure and disk drive failures.

If you don't have an available storage system, and you are on a stage of planning a storage solution for COSN Platform, then it's a good idea to use Microsoft Software-defined storage. It is redundant by default.

With any approach that you will choose, remember that storage is the core of the whole COSN Platform, and it's availability is super critical for the tenants.

Network redundancy

Network connectivity is the core for the COSN Platform. So you definitely need to use redundant switches and redundant cable connections between hosts and switches. NIC Teaming is the right approach for the connectivity between Hyper-V hosts and ToR Switches, and MPIO is the approach for iSCSI-based storage system connectivity. Dedicated network adapter for management and monitoring is recommended.

Hyper-V Host redundancy

Any server can fail, nobody is protected from this. So using Hyper-V clustering is required to ensure that all working VMs will restart on a healthy host if a current host will fail. Also it allows to reboot any host in a cluster without downtime, because all running VMs will be seamlessly migrated to another hosts in the cluster using Live Migration technology.

I recommend to use 3 different Hyper-V clusters:

- Management cluster. It is used to run all management VMs and VMs with internal services - AD, VMM, WAP etc.

- Compute cluster. It is used to run all tenant VMs. Hyper-V 2012 R2 has a limit of 64 hosts in a cluster. So for a big environment you may need several compute clusters. Also you may need several compute clusters if you use different types of server hardware for tenant workloads.

- Network cluster. This is a small, but very important cluster - is hosts NVGRE Network gateway VMs. It you use traditional VLANs and don't plan to use NVGRE, then you don’t need it. Usually Network cluster consists of 2-3 hosts with 2+ VMs with RRaS installed. You need to separate it from Compute cluster because you can't run VMs with NVGRE and Network Gateways on the same host (it is not supported). Also it's not a good idea to combine Network Cluster with Management Cluster from a logical perspective, because it's better to separate management VMs and VMs that are needed for tenant VMs to function (if management cluster fails, Azure Pack won't work, but at least tenant VMs will be able to access internet and communicate with each other).

Management components redundancy

Even if you use Hyper-V cluster for all management VMs, you still can have a significant downtime, because VMs need some time to restart on a healthy host after a current host failure. Also some operations can corrupt during this process (SQL Server transactions, for example). That why we recommend to use failover and high availability technologies for all management stack components to add another level of redundancy.

Different management stack components of COSN Platform use different methods for high availability. I can combine them into 4 types:

- Failover Clustering. This type of high availability is based on Windows Server Failover Clustering component.

- Network Load Balancing. High availability based on network traffic load balancing method. It is used for stateless services. You can use either free Microsoft NLB or commercial load balancer - Citrix NetScaler, Kemp LoadMaster or similar.

- Service specific. High availability that uses technologies, which are specific for this service. For example, if you need to have a highly available Active Directory Domain Services, you need to deploy an additional domain controller, configure replication and reconfigure DNS on member servers. It doesn't use Failover Clustering or NLB for high availability.

Some services require a backend based on SQL Server. You can choose between several available high availability options for SQL Server, such as AlwaysOn Failover Clustering or AlwaysOn Availability Groups.

Here is the list of main COSN Platform components with a high availability technologies:

Component |

High Availability Technology |

Active Directory Domain Services |

Service Specific |

Failover Clustering + Highly Available Backend |

|

SPF |

NLB + Highly Available Backend |

NLB + Highly Available Backend |

|

SMA |

NLB + Highly Available Backend |

Network Gateway (RRaS) |

Failover Clustering |

Service Specific + Highly Available Backend |

As you see, you can achieve a multi-level redundancy with COSN Platform to be aligned with modern world 24/7/365 requirements. We give you flexible options, and you can choose the level of high availability that suits your customer needs.

Microsoft Software-defined Storage in details

Recommended option for COSN Platform storage is to use Microsoft Software-defined Storage. I've mentioned it before in a previous post.

It is based on Windows Server 2012 R2 Storage Spaces and Scale-out File Servers (SOFS). With this approach you need to have 2+ JBODs with SAS disks, connected to 2+ Scale-Out File Servers.

Each JBOD enclosure is connected to each Scale-out File Server by a SAS cable. Usually JBOD enclosures have at least 2 external SAS ports, so if you have only 2 SOFSs, then you can connect JBODs to SOFSs directly. If you'll have a bigger deployment with 4+ JBODs, you'll need more SOFSs, and you'll need a pair of SAS switches to connect all components together.

For storing VMs, we recommend to use Mirrored Storage Spaces, with SAS SSD (20%) and SAS NL disk drivers (80%). This ratio is optimal for Storage Tiering. Such storage will be fast, cost efficient and high capacity. For storing backups and library items, you can use Parity Storage Spaces with SAS NL disk drives. SATA drives are not supported in this configuration, because SATA drives can connect only to 1 server at a time, and we have 2 SOFSs.

We also recommend to use RDMA technology to dramatically increase the performance of network connectivity between Scale-out File Servers and Hyper-V hosts. It is called SMB Direct. Special network cards are required but this, but it's definitely worth it, because it eliminates a bottleneck regarding network throughput and latency between SOFSs and Hyper-V hosts.

There are 2 different protocols for RDMA:

- iWARP. It is switch independent and supports routing of RDMA traffic. For example, Chelsio network cards support iWARP.

- RoCe. It is not switch independent, so you also need compatible ToR switches. Additional configuration of swithes is required. Traffic routing is not available, but it is not usually needed in COSN Platform. For example, Mellanox network cards and switches can use RoCe with COSN Platform. Mellanox has published benchmark results regarding RoCe performance advantage compared to iWARP.

2 JBODs with 2-way Mirrored Storage Spaces + 2 Scale-Out File Servers configuration is redundant to one disk drive failure per pool, SAS cable failures and one SOFS failure. If you'll add 3rd JBOD enclosure and connect it to both SOFSs, then you'll be available to leverage Enclosure Awareness. So no single point of failure here, everything is redundant.

As an evolution of Microsoft Software Defined storage approach, Windows Server 2016 Datacenter will support a new technology called "Storage Spaces Direct". This technology allows to build a storage based on local disks, installed into servers. Data is being replicated across several servers via LAN.

I think it will be a good approach for backups, library items and other non-performance sensitive workloads. But traditional Storage Spaces approach based on JBODs and SOFSs will still be the king for storing VMs because of the performanceavailabilitycost-efficiency balance.

Deployment of COSN Platform

As you may see, COSN Platform is a complex thing, if you need to deploy it in production with an additional level of redundancy and high availability.

Microsoft provides you with several deployment options:

- Manual installation

- PowerShell Deployment Toolkit (PDT)

- Service Provider Operational Readiness Kit (SPORK)

Manual Installation

This is obvious - read 100+ technet pages, install each components using its documentation, configure everything and you are done. This is a complex and long way, but we recommend you to pass it to have a full understanding how COSN Platform works. Otherwise it will be hard for you to operate the black box. I recommend you to read documents about IaaS Product Line Architecture, they also include detailed instructions about the fabric deployment.

This is an overview of a manual installation:

- Plan everything. This is super important.

- Connect all cables

- Deploy new AD or use existing

- Configure storage

- Install Hyper-V on hosts

- Create a Hyper-V management cluster and connect shared storage

- Deploy highly available SQL Server for management databases

- Install VMM and VMM Library

- Install SPF

- Install WAP

- Install SMA, WSUS, WDS and other additional components

- Configure networking in VMM

- Deploy Hyper-V network cluster

- Deploy network gateways

- Deploy RDS services for console access to VMs in WAP

- Create Clouds in VMM

- Connect VMM Clouds to WAP

- Configure WAP

- Create VM Templates

- Deploy additional WAP Services if needed

- Publish WAP Tenant Portal, Tenant Authentication and Public Tenant API and other required services to the Internet.

It may look easy, but it can take you several weeks to deploy. But it worth it because you'll understand how COSN Platform works from the inside.

PowerShell Deployment Toolkit

PowerShell Deployment Toolkit (PDT) is a set of PowerShell-based scripts which can automatically deploy System Center and Windows Azure Pack components using the architecture that you describe in a special XML file.

PDT speeds up the COSN Platform installation process, but it doesn't eliminate planning and configuring steps. You still need to understand what you are deploying and how. There is a special GUI tool that can help you to create an XML file - PDTGui. Check this video from TechEd to learn more details about PDT.

Here is an overview of COSN Platform installation using PDT:

- Plan, plan, plan!

- Create variables.xml file for your architecture

- Connect all cables

- Deploy new AD or use existing

- Configure storage

- Install Hyper-V on hosts

- Create a Hyper-V management cluster and connect shared storage

- Run PDT to install COSN Platform components

- Configure networking in VMM

- Deploy Hyper-V network cluster

- Deploy network gateways

- Create Clouds in VMM

- Connect VMM Clouds to WAP

- Configure WAP

- Create VM Templates

- Deploy additional WAP Services if needed

- Publish WAP Tenant Portal, Tenant Authentication and Public Tenant API and other required services to the Internet.

As you see, this process is a little bit easier and it can reduce the deployment time from several weeks to a week or two.

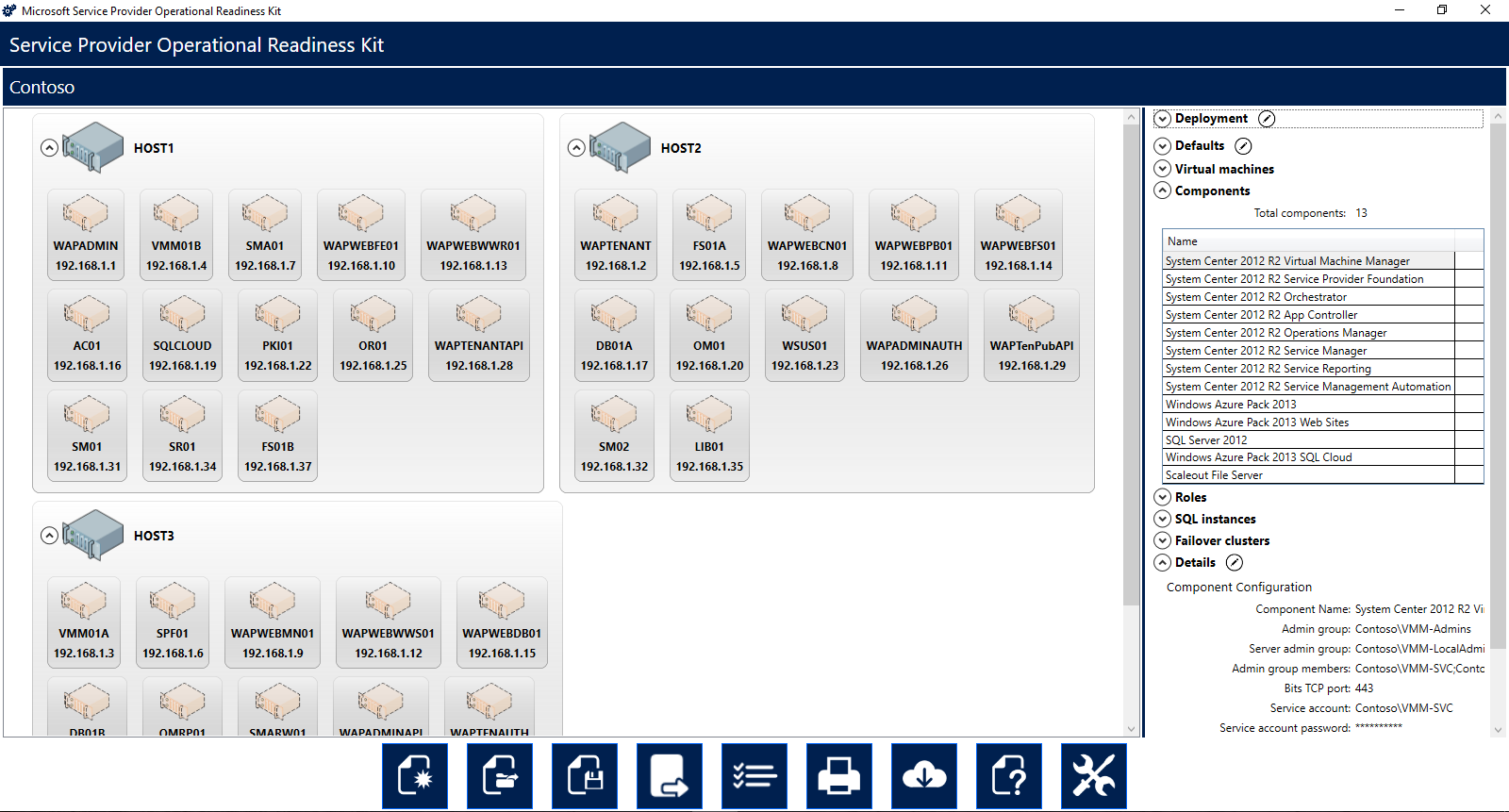

Service Provider Operational Readiness Kit

Service Provider Operational Readiness Kit (SPORK) is special tool for service providers, that helps you with creating PDT configuration files. It generates PowerShell scripts based on PDT and creates all configuration files for you. It has several built-in ready to use architecture templates:

- Proof of Concept (POC) templates - use them if you need to deploy COSN Platform quickly for testing or demo purposes without high availability (non-production)

- Product Line Architecture (PLA) templates - production-ready templates, based on IaaS PLA, that I've already mentioned before.

Check the video demonstration here.

SPORK, comparing to PDT, simplifies planning process, because you need to use an built-in architecture. Also it has GUI and you don't need additional tools if you are not a fan of command line interface. Main advantage of SPORK is that it does a lot of tasks for you, that minimizes the human error. Warning: SPORK is not publicly available for download, and you need to request your Microsoft representative to provide you package with software bits and documentation.

My recommend for service providers: read the documentation, deploy COSN Platform manually in the lab, and then re-deploy it in production using SPORK. You'll have experience to operate COSN Platform, and you'll be sure they it deployed correctly in production.

Server Core or Server with GUI?

As you maybe already know, there are 4 GUI levels in Windows Server 2012 R2: Server Core (no GUI), minimal Server Interface, Server with GUI and Desktop Experience (Windows8-like GUI).

I recommend to use Server Core anywhere you can, because it minimizes the amount of updates you need install, it requires less RAM and disk space, and it is more secure because the attack area is smaller than in Server with GUI mode. Server Core is supported for all COSN Platform components except Active Directory Federation Services and SCOM Data Warehouse VMs. On all other VMs it is better to use Server Core.

If you're afraid of Server Core mode, that you can install Windows Server with a GUI, configure everything and then disable GUI via Server Manager. It will require just a restart. Also it's a good idea to deploy a dedicated VM for management with "Server with a GUI" option, and install all Windows Server and System Center management tools and consoles on it. All COSN Platform administrators will be able to manage all platform components from one place, with Role-based access policies applied.

Reference architecture

In October 2014 Microsoft and Dell launched a cool think together called "Cloud Platform System" (CPS). This is in-a-box solution, than combines Dell hardware with Microsoft software. You just buy from one to four racks, fully loaded with Dell hardware, and Microsoft engineers install COSN Platform on top of it. You have one point of contact for support, the solution is fully tested by Dell and Microsoft. Check this video for details.

In a year CPS lineup was extended with a smaller solution. Old CPS is now called "CPS Premium", and new light version is named "CPS Standard".

CPS Premium is not available everywhere, and because more than a year has passed, some of its components are now outdated. But I usually use CPS Premium architecture as a reference. You can deploy COSN Platform on a similar non-Dell hardware, but using the same architecture principals. So if you are on a stage of deployment, spend some time and learn how CPS Premium is made from the inside.

Future of COSN Platform

With an announcement of Azure Stack last year, I often receive questions about the future of current COSN Platform, based on Azure Pack.

First of all I want to clarify that COSN Platform and Azure Pack are not dead. With a release of Azure Stack this year, Microsoft will have 2 separate offers for companies, who want to deploy "Azure it their own datacenter":

- More Azure-consistent and less flexible platform, based on Azure Stack, which share bits with Azure Resource Manager and new Azure portal (which is currently in preview).

- Less Azure-consistent, but more flexible platform, based on Azure Pack. It share some bits with current Azure portal in terms of UI, but is based on Windows Server and System Center technologies, some of which are not available in Microsoft Azure.

Both offers have strong and weak points, because Azure-consistency is cool, but not for everyone. COSN Platform has some cool technologies, that are very valuable for service providers, but not available in Azure. Some of them:

- Generation 2 VMs without legacy virtual hardware (more resource efficient than Generation 1 VMs)

- VHDX disks up to 64 Tb

- Console access to VMs

- Several network adapters

- Dynamic memory

- Shielded VMs

So current COSN Platform has it strong points for customers against Microsoft Azure (and Azure Stack). COSN Platform is flexible - you can use any available storage system, you can use traditional VLAN-based isolation of tenant networks etc. So right now the future of COSN Platform looks like this:

- COSN Platform will support Windows Server 2016 and System Center 2016. New cool features like Shielded VMs and Storage Spaces Direct can already be implemented in COSN Platform using preview versions of these products.

- Azure Pack continues to evolve. Recently multiple external IPs functionality was added with Update Rollup 8. New functions and fixes will be added with every Update Rollup that will be released during next years.

I thing that with a release of Windows Server 2016, System Center 2016 and Azure Stack until the end of next year the ultimate solution will be to deploy current COSN Platform on 2016 versions for classic IaaS, Desktop-as-a-Service and Database-as-a-Service, and deploy an additional environment on Azure Stack for modern Azure-consistent IaaS and PaaS services. Anyway - don't wait and start your journey to the Microsoft Hybrid Cloud using COSN Platform today!

That's all for today. Thank you for reading, I hope it was valuable for you. During several next weeks I plan to publish new blogposts about Azure Pack tenant experience and functionality extensions, lessons learned from worldwide COSN Platform deployments, how-to guides about Azure and COSN Platform integrations and more. So stay tuned!

Comments

- Anonymous

January 06, 2016

Previously I've mentioned what is Microsoft Cloud OS Network and why it is so important for Microsoft Hybrid Cloud story. Today I'll describe what is "Microsoft Cloud OS Network platform" (or shortly - COSN Platform) - a stack of Microsoft - Anonymous

February 01, 2016

The comment has been removed - Anonymous

March 18, 2016

Kirill, how to get SPORK?