MapReduce on 27,000 books using multiple storage accounts and HDInsight

In our previous blog, Preparing and uploading datasets for HDInsight, we showed you some of the important utilities that are used on the Unix platform for data processing. That includes Gnu Parallel, Find, Split, and AzCopy for uploading large amounts of data reliably. In this blog, we’ll use an HDInsight cluster to operate on the Data we have uploaded. Just to review, here’s what we have done so far:

- Downloaded ISO Image from Gutenberg, copied the content to a local dir.

- Crawled the INDEXES pages and copied English only books (zips) using a custom Python script.

- Unzipped all the zip files using gnu parallel, and then took all the text files combined and then split them into 256mb chunks using find and split.

- Uploaded the files in parallel using the AzCopy Utility.

Map Reduce

Map reduce is the programming pattern for HDInsight, or Hadoop. It has two functions, Map and Reduce. Map takes the code to each of the nodes that contains the data to run computation in parallel, while reduced summarizes results from map functions to do a global reduction.

In the case of this word count example In JavaScript, the mapping function below, simply splits words from a text document into an array of words, then it writes it to a global context. The map function takes three parameters, key, value, and a global context object. Keys in this case are individual files, while the value is the actual content of the documents. The Map function is called on every compute node in parallel. As you noticed, it writes a key-value pair out to the global context: the word being the key and the value being 1 since it counted 1. Obviously the output from the mapper could contain many duplicate keys (words).

// Map Reduce function in JavaScript

var map = function (key, value, context) {

var words = value.split(/[^a-zA-Z]/);

for (var i = 0; i < words.length; i++) {

if (words[i] !== "")

context.write(words[i].toLowerCase(), 1);

}

}};

The reduce function also takes key, values, context parameters and is called when the Map function completes. In this case, it takes output from all the mappers, and sums up all the values for a particular key. In the end you get word:count key value pair. This gives you a good feel of how map reduce works.

var reduce = function (key, values, context) {

var sum = 0;

while (values.hasNext()) {

sum += parseInt(values.next());

}

context.write(key, sum);

};

To run map reduce against the dataset we have uploaded, we have to add the blob container in the cluster’s configuration page, if you are trying to learn how to create a new cluster. Please take a look at this video: Creating your first HDInsight cluster and run samples

HDInsight’s Default Storage Account: Windows Azure Blob Storage

The diagram below explains the difference between HDFS, or the distributed file system natively to Hadoop, and the Azure blob storage. Our engineering team had to do extra work to make the Azure blob storage system work with Hadoop.

The original HDFS uses of many local disks on the cluster, while azure blob storage is a remote storage system to all the compute nodes in the cluster. For beginners, all you have to know is that the HDInsight team has abstracted both systems for you through the HDFS tool. And you should use the Azure blob storage as a default, since when you tear down the cluster, all your files will still persist in the remote storage system.

On the other hand, when you tear down a cluster, the content you store on HDFS contained on the cluster will disappear with it. So, only store temp data that you don’t mind losing in HDFS. Or before you tear down the cluster, you should copy them to your blob storage account.

You can explicitly reference hdfs (local) by using hdfs:// while asv:/// to reference files in the blob storage system. (default).

Adding Additional Azure Blob Storage container to your HDInsight Cluster

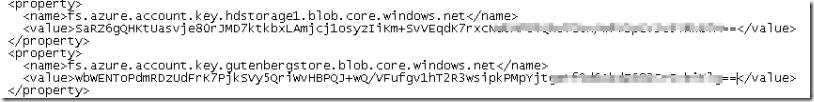

On the head node of the HDInsight cluster in C:\apps\dist\hadoop-1.1.0-SNAPSHOT\conf\core-sites.html, you need to add:

<property>

<name>fs.azure.account.key.[account_name].blob.core.windows.net</name>

<value>[account-key]</value>

</property>

For example, in my account, I simply copied the default property and added the new name/key pair.

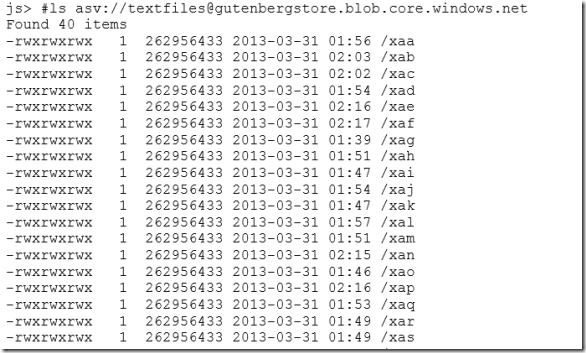

In the RDP session, using the Hadoop commandline console, we can verify the new storage can be accessed.

In the JavaScript Console, it works just the same.

Deploy and Run word count against the second storage

In the samples page in the HDInsight Console.

Deploy the Word Count sample.

Modify Parameter 1 to: asv://textfiles@gutenbergstore.blob.core.windows.net/ asv:///DaVinciAllTopWords

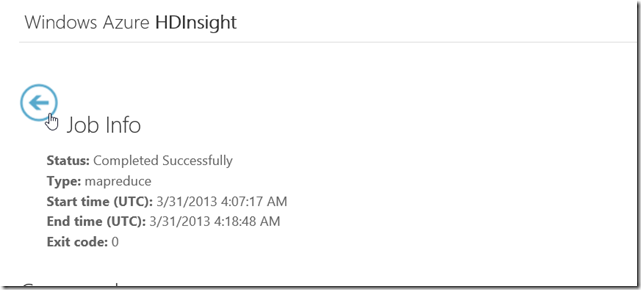

Navigate all the way back to the main page and click on Job History, find the job that you just started running.

You may also check more detailed progress in the RDP session, recall that we have 40 files, and there are 16 mappers total (16 cores) running in parallel. The current status is: 16 complete, 16 running 8 pending.

The job completed within about 10 minutes, and the results are stored in DaVinciAllTopWords directory.

The results is about 256mb

Conclusion

We showed you how to configure additional ASV storage on your HDInsight Cluster to run Map Reduce Jobs against. This concludes our 3 part blog Set.