Graph Engine vs. C++ unordered_map: memory consumption test

"How is Graph Engine K/V store different from a... C++ map?"

Well, virtually they do the same thing after all: to store key-value pairs. So why bother using Graph Engine against the other? I'm not going to talk about remote access, fault tolerance and other fancy stuff for now... Let's take a look at a much easier, but critical factor: memory consumption.

Data structures

First let's calibrate our data structures. For the Graph Engine side, we drop TSL (wait didn't you just write a long post about the importance of TSL?) and leave only the bare metal memory cache. It stores cell IDs (keys), cell types (unsigned short values), cell blob location and cell size. To simulate this, we use the following data structure in C++:

struct CellEntry

{

void* ptr;

int32_t len;

uint16_t cellType;

};

Initially I picked map<K,V> instead of unordered_map<K,V>. After watching it crawling bytes at 20MB/s for minutes... ^C^C^C^C^C.

And here's the code.

C++:

#include <vector>

#include <iostream>

#include <unordered_map>

#include <cstdint>

#include <ctime>

using namespace std;

#define ENTRY_SIZE 314

#define ENTRY_COUNT (1<<20)*64

struct CellEntry

{

void* ptr;

int32_t len;

uint16_t cellType;

};

unordered_map<long, CellEntry> LocalStorage;

int _tmain(int argc, _TCHAR* argv[])

{

srand(time(NULL));

for (int i = 0; i < ENTRY_COUNT; ++i)

{

long id = rand() * rand();

void* p = new char[ENTRY_SIZE];

LocalStorage[id] = { p, ENTRY_SIZE, /*cellType:*/0 };

if (rand() % 3 == 0)

{

LocalStorage.erase(id);

delete[] p;

}

}

return 0;

}

C# w/ Graph Engine:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using Trinity;

namespace MemoryConsumptionTest_CSharp

{

internal class Program

{

const int ENTRY_SIZE = 314;

const int ENTRY_COUNT = (1 << 20) * 64;

static void Main(string[] args)

{

Random r = new Random();

byte[] buf = new byte[ENTRY_SIZE];

TrinityConfig.CurrentRunningMode = RunningMode.Embedded;

for (int i = 0; i < ENTRY_COUNT; ++i)

{

long id = r.Next() * r.Next();

Global.LocalStorage.SaveCell(id, buf, 0, ENTRY_SIZE, cellType: 0);

if(r.Next(3) == 0)

{

Global.LocalStorage.RemoveCell(id);

}

}

}

}

}

We store cells with a fixed length 314, 64 millions of them. Both C++ and C# will assure that the allocated memory gets committed unlike the malloc() function, which would need a memset() to trigger that. Also, along cell insertions, we randomly remove entries with the probability 1/3... This is to test their behaviors with regard to the memory fragmentations introduced by removals.

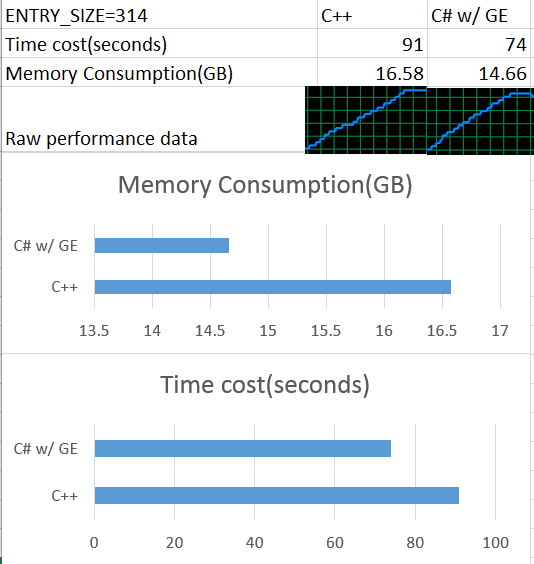

If the environment is correctly set up, the two programs should have the same semantics (remember to compile 64-bit programs, and make sure your machine can handle that ~20GB of memory allocation). The following performance data is acquired on a server with Xeon X5650@2.67Ghz and 96GB of RAM:

The "Raw performance data" row shows the screen shots of the task manager memory window during the two runs. Notice that for GE, there's a slight memory usage drop (only about a hundred of megabytes). This is due to the garbage collector running in the background collecting the space taken by the removed entries. I will go into the details of our garbage collector in another post.

As we can see, Graph Engine outperforms the unordered_map in both memory consumption (nearly 2GB less space) and speed (nearly 20 seconds faster).

And we're talking about the comparison between a full-fledged key-value store and a data structure...

Comments

Anonymous

June 04, 2015

Set the size of the map when creating it. Most of the time is in realloc and rehashing of keys.Anonymous

June 04, 2015

The c++ code has a serious memory leak, so no wonder it eats 17GB.Anonymous

June 04, 2015

Why does the C# code allocate buf outside of the loop (so once) while C++ does it inside (so 64 million times)? That should explain 64MB*314(ENTRY_SIZE)*2/3(a third is randomly released) or a difference of 13.4GB (maybe more if the memory allocator has some overhead, which is likely) and some of the CPU time. Also, what kind of memory metric are you reporting? Is it working set (ie memory in physical RAM, which wouldn't report the allocation of buf since you don't read or write from it) or commit size (ie amount of address space allocated)?Anonymous

June 05, 2015

@Tim, Thanks for pointing that out. I'm not sure about the granularity of the allocation. Will it adjust the strategy to make incremental allocation instead of power of two? Because it does not make sense if the new C++ already provides facility for in-place initialization, delayed, without committing all allocated stuff.Anonymous

June 05, 2015

The comment has been removedAnonymous

June 05, 2015

@Vincent, It's because the memory allocation occurs inside SaveCell. We have our own memory management subsystem specifically designed for our scenario. Also, all the allocated memory should be committed.Anonymous

June 05, 2015

@whacko, With the current logic, ideally the minimum space required is 64M * 314B * (2/3) + 1GB = 14.08GB(that 1GB for storing the cell entries), because I do freed 1/3 of the space. But it still takes 17GB...Anonymous

June 05, 2015

The comment has been removedAnonymous

June 05, 2015

I'm very disappointed in the deceptive use of bar graphs. The memory consumption bar graph does not contain the origin and as such makes it look like C++ is using 2.5x the amount of memory as C# when the reality is that the C++ program is using only 13% more memory.Anonymous

June 05, 2015

Regarding the 'still takes 17GB' comment. Calling LocalStorare.reserve() ahead of time may help a little bit with memory fragmentation on the C++ part thus reducing the memory. It may still take more memory than C# because of the compacting GC for C# though.Anonymous

June 05, 2015

@John, We're not using GC for C#. We implement our own GC inside Graph Engine. :-)Anonymous

June 05, 2015

@f64280 Yeah I'll update that later. Thanks!Anonymous

June 05, 2015

I hacked the code to count the leaks (on osx) and the run leaked 517957 times (0.77% of the time), leaking a bit more than 162638498 bytes (so I guess it isn't that disastrous)...Anonymous

June 05, 2015

The comment has been removedAnonymous

June 06, 2015

hi Michael, If you embed an array into the CellEntry, it would lose the flexibility to store entries of different sizes. I will show the idea in another post and test it agaist 64M of randomly-sized cell entries.