SCOM DataItem size limit and possible issues

This post describes a very rare situation in which you might run in either very big SCOM environments or with very unusual workflows.

Initial scenario

A customer wanted to monitor a large distributed environment with a custom workflow. The workflow was running, but never returned any data. We analyzed the workflow and found out, that the output of this workflow was a DataItem with a size of about 4,200,000 bytes. That is really huge and not very common.

Our colleague Mihai Sarbulescu verified, that we have actually a

limit of (4 x 1024 x 1024) – 1024 = 4,193,280 bytes for each DataItem.

So what happens, when a workflow creates a DataItem which exceeds the limit? Well, it somehow depends, but in our customer case the data was simply dropped without any further information or notice.

What the heck is a DataItem?

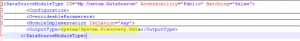

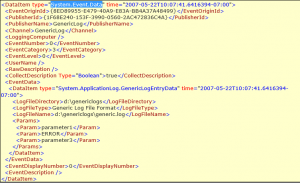

Every workflow in a SCOM Management Pack consist of modules. Modules can produce output data, which can be forwared as input data into other modules. A data source module e.g. can generate output data of a certain type, like System.Discovery.Data or System.Event.Data.

The data generated by such a module is called a DataItem and it is basically a XML document:

The total size of such a single DataItem should not exceed 4,193,280 bytes.

How can you check the DataItem size?

That can be quite tricky. In most cases only discovery workflows should generate that citical amount of data and such workflows usually uses scripts as a data source. If the data source module executes a script you can run the script with the right amount parameters and forward the result from StdOut into a text file.

C:\cscript.exe my.workflow.script.vbs Param1 Param2 ... ParamN > C:\Temp\DataItem.txt

In this text file you will have the DataItem and the size of the file gives you an estimate of the size.

Other possible methods could be the SCOM ETL trace or the MP workflow analyzer.

My workflow is affected by this limit: what can I do?

The simple and plain answer: reduce the amount of data! Affected workflows should mostly be discovery workflows, so reduce the amount of discovered properties or split the discovery workflow into different steps (= workflows) to reduce the resulting DataItem size.

Comments

- Anonymous

June 22, 2016

The comment has been removed - Anonymous

June 22, 2017

As I know it's only true for Com object MOM.ScriptAPI.