Faster, Higher, Stronger – UAG Performance

Everybody’s always talking about performance, but what does it really mean? In this post I’m going to describe some of the behind-the-scenes work we’ve been doing on UAG performance.

Performance improvements are a major area of focus for this release. First and foremost, in UAG we switched to 64-bit architecture, thus overcoming the address space limitation that existed in the 32-bit architecture. In addition, we invested in reducing CPU and memory consumption in major scenarios, establishing and verifying system settings for maximum capacity, and more. For example, one of the recent improvements is utilizing the port scalability feature of Windows in RPC over HTTP(s) scenarios.

Outlook clients tend to use relatively large number of connections with the server (an average of 15-20, and up to 30 connections per outlook client). Using the port scalability feature enables us to utilize several IPs between the UAG and the backend server thus significantly enlarging the number of available ports. This will enable concurrent publishing for significantly more Outlook clients.

Test methodology and testing tools

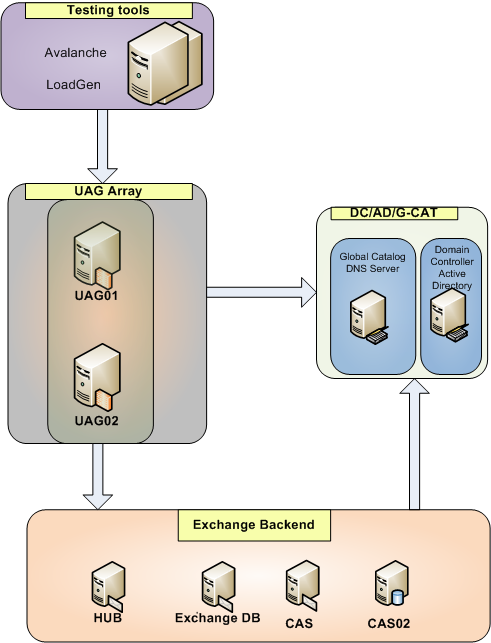

So how does the UAG product team validate system performance? Our performance testing environment is comprised of an end-2-end Microsoft environment, including physical UAG servers, Exchange backend, Active Directory/Domain Controller; and load generators that simulate end-user machines. The idea is to simulate the customer environment as closely as possible, including Web Farm Load Balancing (WFLB) towards the backend, array/load balancer configuration on UAG, etc.

Some of the tools we use for UAG performance improvements and testing:

· Exchange Load Generator (LoadGen) is a load testing tool developed by Microsoft Exchange team. Customers can use it to test UAG (and of course Exchange) performance in their environment prior to deployment. LoadGen supports various mail protocols; we use it to simulate Outlook Anywhere publishing.

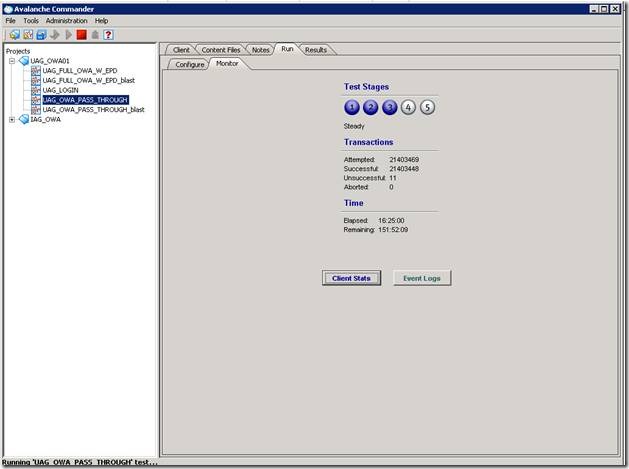

· Avalanche appliance by Spirent Communications was used to simulate Web Publishing (e.g., OWA).

Avalanche console

Performance test example

We've been running performance and stability testing for quite a long time. As an example, here are some results of one of stability tests we ran in preparation for the UAG Beta release.

Test environment:

· 2xUAG machines in array/Windows Network Load Balancing configuration

· Exchange 2007 backend with 2 Exchange CAS servers (with WFLB load balancing), HUB, mailbox store

Test scenario: load on each UAG server:

· 1K concurrent Outlook Web Access (OWA) users (54 Mbps throughput)

· 2K concurrent Outlook Anywhere/RPC over HTTP(s) users

Test length: 72 hours

Typical test environment

The observed test results were as following:

End-user response times (collected by Avalanche, for OWA test):

| Action | Response time (sec) |

| Get Logon Page | 0.187 |

| Login | 0.706 |

| Inbox | 0.905 |

UAG server resources utilization (collected from UAG server performance monitor):

UAG performance statistics example

What’s next?

This is just a first glance on the UAG performance story. We’re working on further optimizing and verifying UAG performance and scalability towards our release candidate (RC) and the release itself, enhancing the performance tests with additional scenarios (e.g., ActiveSync), and more. Stay tuned!

Olga Levina

Program Manager, UAG Product Group

Contributors: Asaf Kariv, Dima Stopel, Oleg Ananiev

Comments

Anonymous

January 01, 2003

Any info on timing estimate for RC availability?Anonymous

August 04, 2009

Olga, This is great that Microsoft is participating in load testing and publishing results. I'd be very interested in other test scenarios like many clients doing rdp thru UAG, many clients doing Network Connector, etc. For each test I'd also like to more specifics of the HW (CPU/L2-cache, RAM, etc), and would like to see comparisons of the same test run with certain features on and off like endpoint detection, endpoint policies, url rulesets, authorization, etc. Or at minimum would like to know if these features were on or off in any specific test. I'd also like to see the system stressed so we can see how many concurrent users the systems could handle before performance started degrading. Keep up the good work! Thanks, MarkAnonymous

August 05, 2009

I would also like to see more data on the performance counters per application/method (both best case, assuming pure web scaling up to several thousand and beyond, to worst case, 80 - 100 applications with a mixture of web and socket, again scailing up to several thousand to see what the load base is), as well as the counters Mark mentioned. Excellent dataAnonymous

August 06, 2009

I really appreciated this article. This helps to answer many client's questions regarding the performance of the UAG especially as the UAG becomes better known amognst the SharePoint Users. This is the area that I and most SP admins see the UAG being used for.Anonymous

November 30, 2009

hello, has there been any update to this data based on new releases?