Standards-Based Interoperability

There has been quite a bit of discussion lately in the blogosphere about various approaches to document format interoperability. It’s great to see all of the interest in this topic, and in this post I’d like to outline how we look at interoperability and standards on the Office team. Our approach is based on a few simple concepts:

- Interoperability is best enabled by a multi-pronged approach based on open standards, proactive maintenance of standards, transparency of implementation, and a collaborative approach to interoperability testing.

- Standards conformance is an important starting point, because when implementations deviate from the standard they erode its long-term value

- Once implementers agree on the need for conformance to the standard, interoperability can be improved through supporting activities such as shared stewardship of the standard, community engagement, transparency, and collaborative testing

It’s easy to get bogged down in the details when you start thinking through interoperability issues, so for this post I’m going to focus on a few simple diagrams that illustrate the basics of interoperability. (These diagrams were inspired by a recent blog post by Wouter Van Vugt.)

Interoperability without Standards

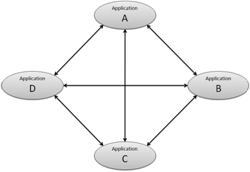

First, let’s consider how software interoperability works when it is not standards-based.

Consider the various ways that four applications can share data, as shown in the diagram to the right. There are six connections between these four applications, and each connection can be traversed in either direction, so there are 12 total types of interoperability involved. (For example., Application A can consume a data file produced by Application B, or vice versa.)

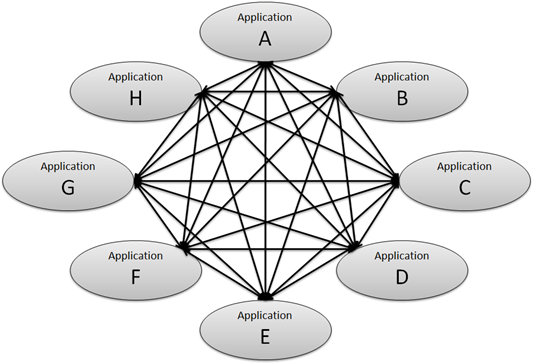

As the number of applications increases, this complexity grows rapidly. Double the number of applications to 8 total, and there will be 56 types of interoperability between them:

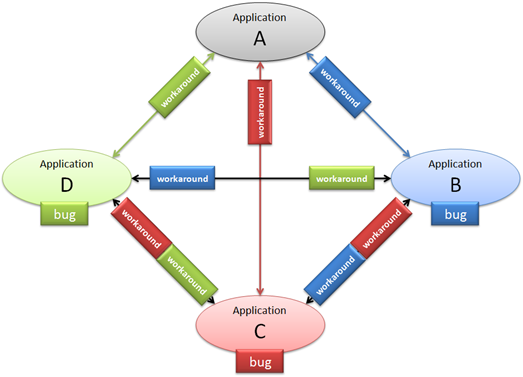

Let’s go back to the simple case of 4 applications that need to interoperate with one another, and take a look at another factor: software bugs. All complex software has bugs, a nd some bugs can present significant challenges to interoperability. Let’s consider the case that 3 of the 4 applications have bugs that affect interoperability, as shown in the diagram to the right.

nd some bugs can present significant challenges to interoperability. Let’s consider the case that 3 of the 4 applications have bugs that affect interoperability, as shown in the diagram to the right.

The bugs will need to be addressed when data moves between these applications. Some bugs can present unsolvable roadblocks to interoperability, but for purposes of this discussion let’s assume that every one of these bugs has a workaround. That is, application A can take into account the known bug in application B and either implement the same buggy behavior itself, or try to fix up the problem when working with files that it knows came from application B.

Here’s where those workarounds will need to be implemented:

Note the complexity of this diagram. There are 6 connections between these 4 applications, and everyone one of them has a different set of workarounds for bugs along the path. Furthermore, any given connection may have different issues when data moves in different directions, leading to 12 interoperability scenarios, every one of which presents unique challenges. And what happens if one of the implementers fixes one of their bugs in a new release? That effectively adds yet another node to the diagram, increasing the complexity of the overall problem.

In the real world, interoperability is almost never achieved in this way. Standards-based interoperability is much better approach for everyone involved, whether that standard is an open one such as ODF (IS26300) or Open XML (IS29500), or a de-facto standard set by one popular implementation.

Standards-Based Interoperability

In the world of de-facto standards, one vendor ends up becoming the “reference implementation” that everyone else works to interoperate with. In actual practice, this de-facto standard may or may not even be written down – engineers can often achieve a high degree of interoperability simply by observing the reference implementation and working to follow it.

De-facto standards often (but not always) get written down to become public standards eventually. One simple example of this is the “Edison base” standard for screw-in light bulbs and sockets, which started as a proprietary approach but has long since been standardized by the IEC. In fact this is a much more common way for standards to become successful than the “green field” approach in which the standard is written down first before there are any implementations.

Once a standard becomes open and public, the process for maintaining it and the way that implementers achieve interoperability with one another changes a little.

The core premise of open standards-based interoperability is this: each application implements the published standard as written, and this provides a baseline for delivering interoperability. Standards don’t address all interoperability challenges, but the existence of a standard addresses many of the issues involved, and the other issues can be addressed through standards maintenance, transparency of implementation details, and collaborative interoperability testing.

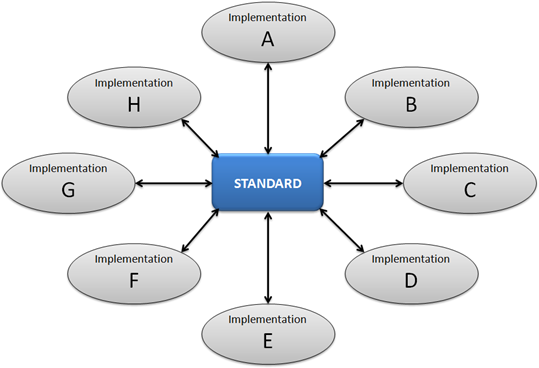

In the standards-based scenario, the standard itself is the central mechanism for enabling interoperability between implementations:

This diagram is much simpler than the other diagram above that showed 56 possible connections between 8 implementations. The presence of the standard means that there are only 8 connections, and each connection only has to deal with the bugs in a single implementation.

How this all applies to Office 2007 SP2

I covered last summer the set of guiding principles that we used to guide the work we did to support ODF in Office 2007 SP2. These principles were applied in a specific order, and I’d like to revisit the top two guiding principles to explain how they support the view of interoperability that I’ve covered above.

Guiding Principle #1: Adhere to the ODF 1.1 Standard

In order to achieve the level of simplicity shown in the diagram above, the standard itself must be carefully written and implementers need to agree on the importance of adhering to the published version of the standard. That’s why we made “Adhere to the ODF 1.1 Standard” our #1 guiding principle. This is the starting point for enabling interoperability.

Recent independent tests have found that our implementation does in fact adhere to the ODF 1.1 standard, and I hope others will continue to conduct such tests and publish the results.

Guiding Principle #2: Be Predictable

The second guiding principle we followed in our ODF implementation was “Be Predictable.” I’ve described this concept in the past as “doing what an informed user would likely expect,” but I’d like to explain this concept in a little more detail here, because it’s a very important aspect of our approach to interoperability in general.

Being predictable is also known as the principle of least astonishment. The basic concept is that users don’t want to be surprised by inconsistencies and quirks in the software they use, and software designers should strive to minimize or eliminate any such surprises.

There are many ways that this concept comes into play when implementing a document format such as ODF or Open XML. One general category is mapping one set of options to a different set of options, and I used an example of this in the blog post mentioned above:

When OOXML is a superset of ODF, we usually map the OOXML-only constructs to a default ODF value. For example, ODF does not support OOXML’s doubleWave border style, so when we save as ODF we map that style to the default border style.

Our other option in this case would have to turn the text box and the border into a picture. That would have made the border look nearly identical when the user opened the file again, but we felt that users would have been astonished (in a bad way) when they discovered that they could no longer edit the text after saving and reopening the file.

What about Bugs and Deviations?

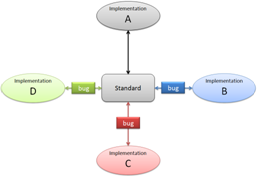

Of course, the existence of a published standard doesn’t prevent interoperability bugs from occurring. These bugs may include deviations from the requirements of the standard. In addition, they may include different interpretations of ambiguous sections of the standard.

The first step in addressing these sorts of real-world issues is transparency. It’s hard to work around bugs and deviations if you’re not sure what they are, or if you have to resort to guesswork and reverse engineering to locate them.

Our approach to the transparency issue has been to document the details of our implementation through published implementer notes. We’ve done that for our implementations of ODF 1.1 and ECMA-376, and going forward we’ll be doing the same for IS29500 and future versions of ODF when we support them.

Interoperability Testing

The final piece of the puzzle is hands-on testing, to identify areas where implementations need to be adjusted to enable reliable interoperability.

This is where the de-facto standard approach meets the public standard. If the written standard is unclear or allows for multiple approaches to something, but all of the leading implementations have already chosen one particular approach, then it is easy for a new entrant to the field to see how to be interoperable. If other implementers have already chosen diverging approaches however, then it is not so clear what to do. Standards maintainers can help a great deal in this situation by clarifying and improving the written standard, and new implementers may want to wait on implementing that particular feature of the standard until the common approach settles out.

We did a great deal of interoperability testing for our ODF implementation before we released it, both internally and through community events such as the DII workshops. We’ve also worked with other implementers in a 1-on-1 manner, and going forward we’ll be participating in a variety of interoperability events. These are necessary steps in achieving the level of interoperability and predictability that customers expect these days.

In my next post, I’ll cover our testing strategy and methodology in more detail. What else would you like to know about how Office approaches document format interoperability?

Comments

Anonymous

June 05, 2009

Hi Doug, Thanks for asking :] When do you will release a fix for the formulas issues on SP2 (there's a lot of people asking me about it in Brazil... And I mean "important" people). Best, JomarAnonymous

June 05, 2009

Hi Jomar, For information on our approach to formula support in SP2's implementation of ODF 1.1, see these posts: http://blogs.msdn.com/dmahugh/archive/2009/05/05/odf-spreadsheet-interoperability.aspx http://blogs.msdn.com/dmahugh/archive/2009/05/09/1-2-1.aspx As covered there, we'll be looking closely at Open Formula when it is approved and published. Personally, I think Open Formula looks very promising, and it will be good to have a published standard for formulas in future versions of ODF. Did you have any thoughts on the topics covered in the blog post above? I'd be interested in knowing, for example, whether or not you agree that standards conformance is important for enabling standards-based interoperability.Anonymous

June 05, 2009

The comment has been removedAnonymous

June 05, 2009

Hi Doug, nice post ( and nice illustrations ). Can you tell us about the advance of the fix in ODF formula handling in Office 2007 ( you know, the "square-bracket-thing" that prevents any real interoperability between spreadsheets generated by Microsoft(TM) Office 2007 and the Rest Of The World(TM) ODF spreadsheets ). Thanks in advance.Anonymous

June 05, 2009

The comment has been removedAnonymous

June 05, 2009

@Dennis, the tractor-pull analogy is right on. The principles are indeed often in tension/opposition with one another on certain details. There’s always room for people to debate a given approach is more or less astonishing than another approach, of course. But our Excel program managers felt strongly, based on their long experience with real customer issues, that if you open a spreadsheet someone sends you and see different results from the calculation than the sender saw that would be much worse experience than if you saw the same results they sent, but you could not easily recalculate them. With the current state of ODF 1.1 spreadsheet interop there is just no easy way for someone to know if they are seeing the same calculation results that someone else saw. And the astonishment could be severe if, for example, you accept someone’s bid to remodel your kitchen for $10,000 and then later they tell you that in their favorite spreadsheet application the total came to $15,000. Thanks for your comment, Franco. Regarding formulas, note the responses to Jomar and Dennis above. I think it's important that we all remember there is NO published standard for ODF spreadsheet formulas yet. Nor is there any de-facto standard that everyone agrees on. In truth, among all of the other ODF implementations real-world complex spreadsheets don’t interoperate very well either. The only way an implementer can try to make ODF spreadsheet interop work today (and by work I mean not just for trivial cases, but really work reliably for real-world complex spreadsheets) is by the “spaghetti diagram" method, with all of the complexity and risk of bugs that entails. No implementer we know of has attempted that, and the very point of my post was to explain why we don’t think this is a good approach, and why we think the standards-based approach is better. In the case of spreadsheet formulas, help is on the way -- OpenFormula is under development for use with ODF 1.2. In the meantime, we should not pretend that all was well with spreadsheet interop before SP2 came along. And (in my opinion) we would be collectively better off to spend our energies on solving the problem instead of complaining about it. FYI, I’d like to keep this thread on-topic around the concepts covered in this post, as outlined in my comment policy. There are already 118 comments on the two threads about formulas that I linked to above, so I’ll only be letting more comments on that topic appear here if they truly add something new to the discussion that has not already come up in those threads.Anonymous

June 06, 2009

The comment has been removedAnonymous

June 06, 2009

The comment has been removedAnonymous

June 07, 2009

Still no committee draft on OpenFormula spec in OASIS. Will OASIS even allow ODF 1.2 to continue in the standardization proces when it is relying heavily on a specifcation still in draft form ?Anonymous

June 08, 2009

@A Nonymous, The concept of an implementation round-tripping content that it doesn't understand is something we considered during initial planning of our ODF implementation. It was also one of the topics raised during the roundtable discussions at the DII event in Redmond last July. The problem is that if you don't understand a piece of content, then you can't reliably know whether other changes you've made in areas that you do understand may have invalidated the content. Consider, for example, a spreadsheet like this:

Suppose we open that spreadsheet in an implementation that round-trips content it doesn't understand, and we insert a header row. What happens? The cells all shift down, but the formula gets round-tripped without a change. So when you open the modified spreadsheet back in the original application, you see a different result than it had before:

@Andre, you're right, things get very messy when you have to deal with not only multiple products, but multiple versions of each one.

Anonymous

June 08, 2009

The comment has been removedAnonymous

June 09, 2009

The comment has been removedAnonymous

June 09, 2009

> In my next post, I’ll cover our testing strategy and methodology in more detail. What else would you like to know about how Office approaches document format interoperability? Doug: could you shed some light if and how the MS Office-team and the ODF-converter team deal with interop testing between 2007sp2 and the plug-in? Since MS provides technical and architectural guidance for the OpenXML/ODF converter project, I assume both teams somehow share the same methodology on testing two different products, so it could be helpful for other ODF-developers as well... Thanks, BartAnonymous

June 09, 2009

@A Nonymous, this sounds potentially fragile to me, because the consumer would not be taking full responsibility for the integrity of the content, but would still be modifying certain aspects of it. @Mitch, your suggestion is essentially a combination of various approaches used in existing implementations. Have you suggested that the current versions of Symphony (1.2) and OpenOffice.org (3.1) should implement this multiple-choice approach as well? In any event, I think the best path forward is for all of us to focus on making Open Formula as interoperable as possible. The wide variety of approaches that people have suggested indicates a need for an agreed-upon standard in this area. @Bart, that's a good question, I'll plan to address it in my next post.Anonymous

June 09, 2009

@Doug: yup, they should. The fact that they don't is no reason not to one-up them, is it? You could, like, innovate... If they do imitate you, then interoperability wins anyway! Thing is, their approach (keep the formula) may allow a patient user to re-write a new formula based on the old one and get his (proper) results (in essence, to recreate user intent). Agreed, it's far from being the best solution, but there's no actual loss: result can be found again without external reference. Current Excel system's would mean that a spreadsheet named LifeTheUniverseAndEverything.odc woud merely contain 42 in Excel - the question would be scrapped. And them Vogons are not patient. Now, another (non-interactive, but maybe more workflow-disruptive) solution would be a new error message: #NAMESPACE:42 (namespace is not know; last known good value is 42), which would be technically correct (error:can't parse the formula), informative (error caused by unknown namespace), non-destructive (formula and namespace appear in edit mode, like in other spreadsheet programs, and last good value is kept after error message) and forward looking (the day that namespace is supported, formula can be computed again). Or both solutions, I don't know? It could also be added to ODF 1.2 (how to manage formulas from different namespaces), and it doesn't prevent OpenFormula's development. Of course, it would mean some careful thinking: how does one work with a spreadsheet that uses several formula namespaces? Although the only thing taken into account by a formula is the 'last known good value', there could be problems such as floating point precision (some may accept double 32-bit float precision, others be limited to single 32-bit precisions, others may use 256-bit precision...), or dates (oxml-f may accept 1902-02-29, odf-of certainly won't) to work out... I do think those questions should be addressed as soon as possible; destroying a formula ain't a good solution, converting it from one format to another isn't either (ceil() and floor() in MS Office for example are mathematically wrong on negative values; a parameter exists in oooc and OF to deal with import, but there's no way to export floor() or ceil from those namespaces to MS formulas correctly), and merely keeping the last known good value... well, if last known good value is 256-bit precision and is read on a spreadsheet program that has single 32-bit precision, then if Excel's solution is used, not only will you lose the formula, you'll also lose the actual value (loss of precision). I dunno, maybe I'm overlooking something, what do you think?Anonymous

June 10, 2009

The comment has been removedAnonymous

June 10, 2009

Um, "potentially fragile"? Which part of this is fragile? (I will refrain from commenting on this comment appearing to be an application of "fear, uncertainty, and doubt".) Presuming of course, that the original suggestion, making the formulas and input cells READ-ONLY, means the integrity of THAT content is unimpeachable. And I am presuming that the application (excel) is already doing these other things (tracking cell relocation, and cell modification), already. If relocation is handled (it is), that is most of the battle. If it is NOT the case that excel relies exclusively on modifications of either input values or formulas, to trigger formula calculation, then excel is grossly flawed. If it IS the case (that it relies exclusively), then the rest of the battle is won. My questions are:

- Whose call at Microsoft is it, to do this or not do this?

- If this was considered and discarded, I fail to see the rationale for doing so. Can you elaborate on that decision?

- Whose call at Microsoft is it, to revisit the question of using this method to support foreign namespaces in read-only, round-trip proof ways? Name Withheld

Anonymous

June 14, 2009

In this blog post, I’m going to cover some of the details of how we approached the challenges of testingAnonymous

June 15, 2009

Microsoft neemt vandaag en morgen (15 en 16 juni 2009) deel aan een ODF bijeenkomst in Den Haag. HetAnonymous

June 19, 2009

Voici les derniers posts concernant Open XML et l’implémentation d’ODF dans Office 2007 SP2. De très