Assess an Enterprise With Data Migration Assistant–Part 3: Loading an Assessment

In my previous post I took you through how to run a scaled assessment using the dmaDataCollector PowerShell function.

In this post I’ll take you through how to consume the assessment JSON output using the dmaProcessor PowerShell function.

Consuming the assessment JSON file

Once your assessment has finished you are now ready to import the data into SQL Server for analysis.

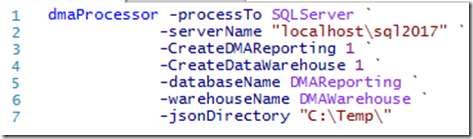

Open PowerShell and run the dmaProcessor function.

Parameters

- processTo – Where the JSON file will be processed too. Possible values are SQLServer and AzureSQLDatabase

- serverName – The SQL Server instance of where the data is to be processed too. If using AzureSQLDatabase then put the SQL Server name only (do not put .database.windows.net). You will be prompted for 2 logins when targeting Azure SQL Database. The first is your Azure tenant credentials, the second is your admin login for the Azure SQL Server.

- CreateDMAReporting – This will create the staging database where the JSON file will be processed too. If the database already exists and this flag is set to one then the objects do not get created. This is useful is a single object is dropped as this will create the object again

- CreateDataWarehouse – This will create the datawarehouse which will be used by the PowerBI report

- databaseName – The name of the DMAReporting database

- warehouseName – The name of the data warehouse database

- jsonDirectory – The directory containing the JSON assessment file. If there are multiple JSON files in the directory then they are processed 1 by 1

The dmaProcessor should take only a few seconds to process a single file.

Loading the data warehouse

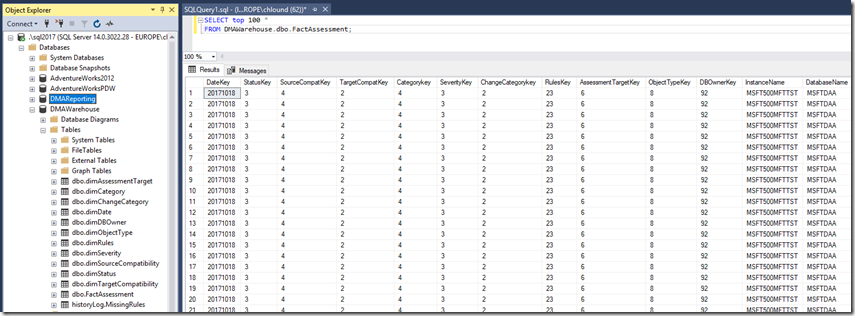

Once the dmaProcessor has finishing processing the assessment files, the data will be loaded into the DMAReporting database in the ReportData table.

From here we need to load the data warehouse.

Use the attached script to populate any missing values in the dimensions then load the data warehouse.

The script will take the data from the ReportData table in the DMAReporting database and load it into the warehouse. If there are any errors during this load process it will likely be due to missing entries in the dimension tables.

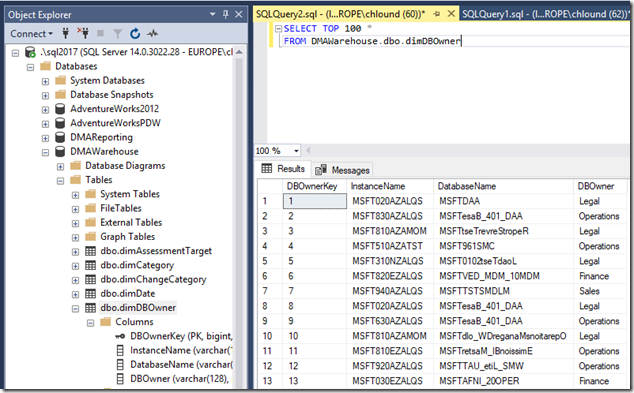

Set your database owners

To get the most value from the reports it’s a great idea to now set the database owners in the dimDBOwner dimension and update DBOwnerKey in the FactAssessment table. This will allow slicing and filtering the PowerBI report based on specific database owners.

The attached script provides the basic TSQL statements for you to do this. However, it is not mandatory.

In the next post we will wrap this process up by adding the reporting on top of this data warehouse.

Get the warehouse loading script here

Script Disclaimer

The sample scripts provided here are not supported under any Microsoft standard support program or service. All scripts are provided AS IS without warranty of any kind. Microsoft further disclaims all implied warranties including, without limitation, any implied warranties of merchantability or of fitness for a particular purpose. The entire risk arising out of the use or performance of the sample scripts and documentation remains with you. In no event shall Microsoft, its authors, or anyone else involved in the creation, production, or delivery of the scripts be liable for any damages whatsoever (including, without limitation, damages for loss of business profits, business interruption, loss of business information, or other pecuniary loss) arising out of the use of or inability to use the sample scripts or documentation, even if Microsoft has been advised of the possibility of such damages. Please seek permission before reposting these scripts on other sites/repositories/blogs.