FIM 2010 Performance Testing - Hardware

My goal with this post is to give you some data on the type of hardware we are using internally for our testing, which you could use to help inform your own hardware needs. This is the hardware we are using to evaluate the product for release & that it will be able to support the workloads needed in MSIT. This hardware is not configured or meant for production, but just for testing. An example of this is that we have our hard drives configured in RAID 0 to get the most out of fewer drives in our testing, but you would likely have yours setup for redundancy. In which case you could add additional drives to get similar results.

Similar to in my previous post about our topologies, there are two basic classes of hardware we use in our testing. What I will refer to as a standard machine & a performance machine.

|

Standard Machine |

Performance Machine |

CPU |

1x4 Core Core2 Q6600 2.4 GHz |

2x4 Core Xeon E5410 2.33 GHz |

Memory |

4 GB |

32 GB |

Hard Drive |

Single Hard drive |

8 – 136 GB 10k Hard Drives |

On the performance machine we have the 8 hard drives currently allocated as follows. Your situation will be different but we have standardized our machines on a single drive configuration for both Sync & FIMService to allow us to use machines for either depending on our testing needs.

|

Drive Setup |

Purpose |

C |

1 – 136 10k Hard Drive |

OS\Applications |

E |

1 – 136 GB 10k Hard Drive |

SQL Logs (ldf files) |

F |

6 – 136 GB 10k Hard Drives (RAID 0) |

SQL Data Files (mdf files) |

In each of our performance rigs we have 2 performance machines & then as many standard machines as needed based on the topology. In our standard topology we use the standard machine for a client & other test machines for Visual Team System (VSTS) to generate our load.

The performance machines thus far have always been allocated to the SQL server as that is where the primary horsepower is needed for both Sync & FIMService. Each of these components use resources in a different manner.

Synchronization Service

Synchronization service (formally MIIS) the primary place we have observed the most bang for your buck is in the disk subsystem. Increasing your disk throughput will increase the performance of sync the most. Most of the time if you observe disk IO of Sync you will see the disk under the heaviest utilization.

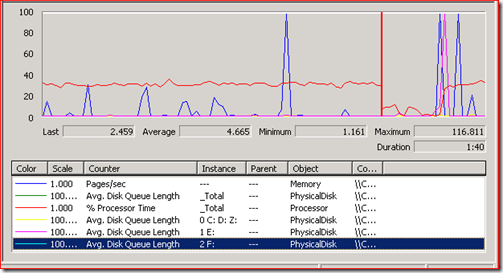

Resource Utilization of Synchronization Service

Notice the white line at the top is our SQL server drive

FIM Service

FIM Service leverages SQL to do a large amount of our processing, if you ran SQL profiler on the system you would observe some very large queries being executed. SQL queries handle many of the core services of our product including query evaluation, rights enforcement, & set transitions. As such for the FIMService db, having higher CPU power will give you the best increase in performance.

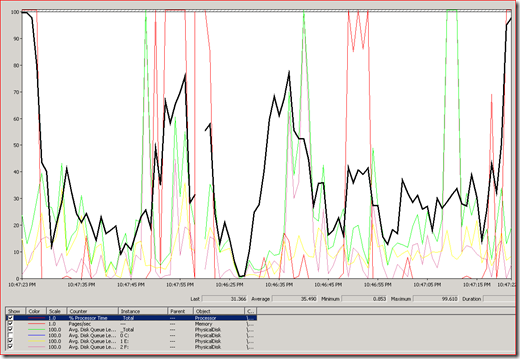

Resource Utilization of FIMService

From the above you can see that occasionally we have spikes of usage in memory & the F drive which corresponds to the SQL data file drive in this rig. Furthermore, in this case I have the FIMService & SQL Server on the same machine, so we can compare CPU utilization of both processes to see where the processing power is being used most.

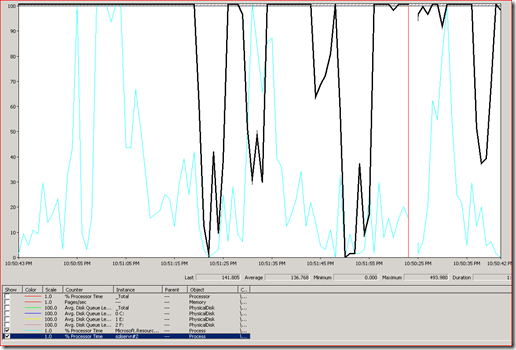

FIMService vs. SQL Server CPU Utilization

From above the highlighted black line is the CPU usage of SQL Server, the light blue line is the CPU usage of Microsoft.ResourceMangementService. This demonstrates at least at this point in time that the primary consumer of your CPU & where you should invest your CPU power is your SQL Server.

In my next post I will discuss policy objects & how we are thinking about them in our performance testing.

Comments

Anonymous

October 10, 2009

Great stuff Darryl, I would also love to see whether or not altering the Network packet size in SQL makes any difference to performance - in the past I've increased it from the default 4k all the way to 32k but my increases in performance are anecdotal until I can do further testing. Also, anyway you can get your hands on any of the SSD devices like the Fusion IO PCI-X device that can deliver over 1GB/s throughput with a mere 50 microsecond latency (over 100k IOPS!). I'm dying to see ILM/FIM backend run on one of those.Anonymous

October 13, 2009

The comment has been removed