Build a Cloud-hosted, Web-Based Blob Copy Utility using Node.js

Introduction

The goal is simple. Present a web form that allows a user to type in a URL. A username and password also accepted.

When the user clicks perform copy, the image pointed to by the URL will be copied to azure blob storage through a streaming mechanism.

We will build a blob copy utility using Node.js, hosted in Azure. We will host this application in Azure websites.

There are a few things to accomplish at the Azure portal. First, we will provision in Azure website. We will also provision in Azure storage account.

The assumption here is that you already have a repository defined in Github. We will correlate our Azure websites to Github.

Workplan

As you can see we have a few things to do at the portal in addition to writing some code. I will assume you have the skills to go figure out how to provision a storage account. But I will walk you through the new portal where we provision and Azure website.

We have three pieces of code. We have one node.JS file called server.JS. For the web form we have SubmitForm.htm and style.css.

Provision Github Repo

We will login to Github and define a new repository called blobcopy.

Provide a name for your repo.

Copy the following code from the Github web site.

Make a folder on your local drive.

Paste in the commands from the clipboard. This will associate your local repository with the repo hosted at Github.

Image 15

Provision Web App at the Portal

Let's begin by creating an Azure Web Site to host our Node.js application. Now we will go to the Azure portal to provision in Azure website.

Click the new button on the lower left corner.

From the create menu choose web + mobile.

The GUI will expand. On the right click web app.

Choose the region (data center location), then click CREATE. Choose a region.

Provide a name for your node.JS hosted website. In my case on calling at my blob copy but your name will be different.

Choose a region.

You will wait less than 2 minutes for your site to come up.

We will now set up continuous deployment with Github. Click set up continuous deployment.

For the source select Github by Github.

Select configure required settings, where you will go back to choose the repo that you created at Github. In my case that was called blobcopy.

blobcopy has been selected.

You will need to login to github here to associate your github repo.

Now it is time to ride our node. JS code.

Writing some node.js code (Hello World)

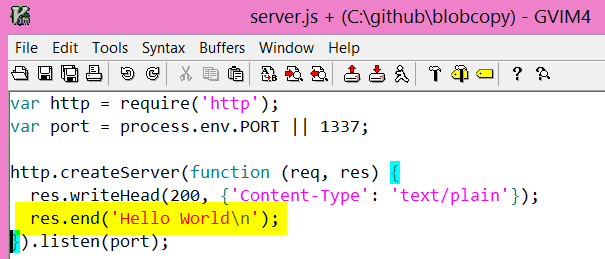

We will create a program call server.JS. It's important to give it this name because that's what Azure websites will use as a default file name.

We will write a simple "hello world" example to start.

Save your file returned back to the command line, and type in the following Github commands. This is what allows Github to copy our code from a local hard disk up into the cloud where we are hosting our Azure website

We will now return to the portal to copy the URL of our provisioned website.

We will now navigate to that URL and should see hello world. This validates that we have things set up correctly.

Web Client

Let's now write a web client that will submit form data to our node back-end. The point is to be able to pass in blob endpoints to node, which will then copy them to another destination.

Your form might look something like this. Notice I'm running this locally but you can choose to also run this in Azure, using the same techniques just discussed.

Image 31

Source Code for SubmitForm.html

The style sheet can be found in the Appendix.

Take special node of the URL where the form fields are submitted.

https://myblobcopy.azurewebsites.net/

The original intent of this program is to copy pictures from Amazon over to Azure. That's why you see the source URL pointing to Amazon.

Although we will enter and support a username password, for the purposes of this demo we are not going to worry about authentication and authorization.

One important thing to notice here is the form action points to our provision website up in Azure, from the previous step.

Once again, the url is:

https://myblobcopy.azurewebsites.net/

<html>

<head>

<link href="style.css" rel="stylesheet">

</head>

<body>

<form action="https://myblobcopy.azurewebsites.net/" method="post">

<div id="title">Copy blob from Amazon to Azure</div>

<div class="row">

<label class="col1">Source URL (Amazon): </label>

<span class="col2"><input name="sourceurl" class="input" type="text" id="sourceurl" value="https://www.motorbikespecs.net/images/Suzuki/GSXR_1000_K3_03/GSXR_1000_K3_03_2.jpg" size="80" tabindex="1" /></span>

</div>

<div class="row">

<label class="col1">User Name: </label>

<span class="col2"><input name="username" class="input" type="text" id="username" value="c" size="80" tabindex="3" /></span>

</div>

<div class="row">

<label class="col1">Password: </label>

<span class="col2"><input name="password" class="input" type="text" id="password" value="d" size="80" tabindex="4" /></span>

</div>

<div align="center" class="submit">

<input type="submit" value="Perform Copy" alt="send" width="52" height="19" border="0" />

</div>

</form>

</body>

</html>

Return back to the server.js node code

Let's now finish writing our server back-end the perform a copy.

Submitting form fields and having Node.js receive them.

We will need to leverage a bunch of node packages. We will need to install a number of those node packages, approximately eight of them.

At the top of our node.js app (server.js) we will want to leverages some packages, like this:

var http = require('http');

var querystring = require('querystring');

var azure = require('azure');

var request = require('request');

var stream = require('stream');

var util = require('util');

var url = require("url");

var path = require("path");

But these packages will need to be installed. The assumption is that you've installed Node on your development computer and Node Package Manager. You can get the bits here:

npm install http

npm install querystring

npm install azure

npm install request

npm install stream

npm install util

npm install url

npm install path

Here is the output of request as an example. Azure is a big one and will take the longest. These are packages or libraries used by our app for additional functionality. You can read about them here:

Copying an image to Azure Storage

Double check and make these edits are in your code.

var http = require('http');

var querystring = require('querystring');

var azure = require('azure');

var request = require('request');

var stream = require('stream');

var util = require('util');

var url = require("url");

var path = require("path");

var blobService = azure.createBlobService('brunostorage', 'YOU GET THIS FROM THE PORTAL UNDER YOUR STORAGE ACCOUNT 8OGSlD7wWHLQA+q3dXmNUOUtBW/liL3a1zc8DevrtlAKnI5wwzg==');

var containerName = 'ppt';

var port = process.env.PORT || 1337;

http.createServer(function(req, res) {

var body = "";

req.on('data', function(chunk) {

body += chunk;

});

req.on('end', function() {

console.log('POSTed: ' + body);

res.writeHead(200);

// Break apart submitted form data

var decodedbody = querystring.parse(body);

// Parse out the submitted URL

var parsed = url.parse(decodedbody.sourceurl);

// Get the filename

var filename = path.basename(parsed.pathname);

// Send text to the browser

var result = "Copying " + decodedbody.sourceurl + "</br> to " +

filename;

res.end(result);

// Start copying to storage account

loadBase64Image(decodedbody.sourceurl, function (image, prefix) {

var fileBuffer = new Buffer(image, 'base64');

blobService.createBlockBlobFromStream(containerName, filename,

new ReadableStreamBuffer(fileBuffer), fileBuffer.length,

{ contentTypeHeader: 'image/jpg' }, function (error) {

//console.log('api result');

if (!error) {

console.log('ok');

}

else {

console.log(error);

}

});

});

});

var downloadImage = function(options, fileName) {

http.get(options, function(res) {

var imageData = '';

res.setEncoding('binary');

res.on('data', function(chunk) {

imageData += chunk;

});

res.on('end', function() {

fs.writeFile(fileName, imageData, 'binary', function(err) {

if (err) throw err;

console.log('File: ' + fileName + " written!");

});

});

});

};

var loadBase64Image = function (url, callback) {

// Required 'request' module

// Make request to our image url

request({ url: url, encoding: null }, function (err, res, body) {

if (!err && res.statusCode == 200) {

// So as encoding set to null then request body became Buffer object

var base64prefix = 'data:' + res.headers['content-type'] + ';base64,'

, image = body.toString('base64');

if (typeof callback == 'function') {

callback(image, base64prefix);

}

} else {

throw new Error('Can not download image');

}

});

};

var ReadableStreamBuffer = function (fileBuffer) {

var that = this;

stream.Stream.call(this);

this.readable = true;

this.writable = false;

var frequency = 50;

var chunkSize = 1024;

var size = fileBuffer.length;

var position = 0;

var buffer = new Buffer(fileBuffer.length);

fileBuffer.copy(buffer);

var sendData = function () {

if (size === 0) {

that.emit("end");

return;

}

var amount = Math.min(chunkSize, size);

var chunk = null;

chunk = new Buffer(amount);

buffer.copy(chunk, 0, position, position + amount);

position += amount;

size -= amount;

that.emit("data", chunk);

};

this.size = function () {

return size;

};

this.maxSize = function () {

return buffer.length;

};

this.pause = function () {

if (sendData) {

clearInterval(sendData.interval);

delete sendData.interval;

}

};

this.resume = function () {

if (sendData && !sendData.interval) {

sendData.interval = setInterval(sendData, frequency);

}

};

this.destroy = function () {

that.emit("end");

clearTimeout(sendData.interval);

sendData = null;

that.readable = false;

that.emit("close");

};

this.setEncoding = function (_encoding) {

};

this.resume();

};

util.inherits(ReadableStreamBuffer, stream.Stream);

}).listen(port);

Updating your repo

We are now ready to synchronize our local edits up to Azure websites by issuing the following Github commands.

git add . --all

git commit -m "new updates"

git push -u origin master

Make sure that your form is submitting to the correct endpoint. Yours will be different from the highlighted section below.

Run the submitting form and it should look something like this. Notice that are hardcoded a URL that points to an existing photo. Ideally, this could be some photo sitting on Amazon that you wish to copy to Azure.

Once you click perform copy the following screen should appear. It indicates that the file was successfully downloaded and written to your Azure blob storage account.

You can download a simple utility to go to your Azure storage blob files to validate the correct copy of current.

You can download it for free here:

https://azurestorageexplorer.codeplex.com/

Conclusion

There is room for improvement in this quick sample. One of the things you can do is make the process more asynchronous. The way to do that is to leverage Azure queues. That means all submitted URLs would go into a queue. From there a background process would read from the queue and perform the actual operation whereby the images are copied azure blob storage. But the code provided above serves as an excellent starting point to build a more robust solution.

Appendix

Style Sheet - style.css

#linear_grad

{

height: 100px;

background: linear-gradient(#deefff, #96caff);

}

#button_grad

{

height: 40px;

width: 100px;

color: #ffffff;

font: bold 84% 'trebuchet ms',helvetica,sans-serif;

font-size: large;

background: linear-gradient(#aeaeae, #929292);

}

body {

background-color: #DBE8F9;

font: 11px/24px "Lucida Grande", "Trebuchet MS", Arial, Helvetica, sans-serif;

color: #5A698B;

}

#title {

width: 330px;

height: 26px;

color: #5A698B;

font: bold 11px/18px "Lucida Grande", "Trebuchet MS", Arial, Helvetica, sans-serif;

padding-top: 5px;

background: transparent url("images/bg_legend.gif") no-repeat;

text-transform: uppercase;

letter-spacing: 2px;

text-align: center;

}

form {

width: 335px;

}

.col1 {

text-align: right;

width: 135px;

height: 31px;

margin: 0;

float: left;

margin-right: 2px;

background: url(images/bg_label.gif) no-repeat;

}

.col2 {

width: 195px;

height: 31px;

display: block;

float: left;

margin: 0;

background: url(images/bg_textfield.gif) no-repeat;

}

.col2comment {

width: 195px;

height: 98px;

margin: 0;

display: block;

float: left;

background: url(images/bg_textarea.gif) no-repeat;

}

.col1comment {

text-align: right;

width: 135px;

height: 98px;

float: left;

display: block;

margin-right: 2px;

background: url(images/bg_label_comment.gif) no-repeat;

}

div.row {

clear: both;

width: 335px;

}

.submit {

height: 29px;

width: 330px;

background: url(images/bg_submit.gif) no-repeat;

padding-top: 5px;

clear: both;

}

.input {

background-color: #fff;

font: 11px/14px "Lucida Grande", "Trebuchet MS", Arial, Helvetica, sans-serif;

color: #5A698B;

margin: 4px 0 5px 8px;

padding: 1px;

border: 1px solid #8595B2;

}

.textarea {

border: 1px solid #8595B2;

background-color: #fff;

font: 11px/14px "Lucida Grande", "Trebuchet MS", Arial, Helvetica, sans-serif;

color: #5A698B;

margin: 4px 0 5px 8px;

}