Azure Speech Service: Example project for custom voices, transcribe videos & generate subtitles!

The Azure Developer Community blog has moved! Find this blog post over on our new blog at the Microsoft Tech Community:

https://techcommunity.microsoft.com/t5/Azure-Developer-Community-Blog/Azure-Speech-Service-Example-project-for-custom-voices/ba-p/338692

========================

Feed audio from friends, family or vids of your favourite celebrity.

Train a customised voice that sounds just like them.

Then make them say whatever you want!

[audio wav="https://msdnshared.blob.core.windows.net/media/2019/01/Martin-Fake-Voice-V2.wav"][/audio]

OK so this still sounds a little bit robotic. But the training data was less than 8 minutes of poor quality audio, with non-matching text.

With not much more effort, you can buff this up to sound very close to the real person!

To hear what Martin sounds like (and learn a thing or two) watch this excellent series of clips about Azure DevOps.

Why implement a custom voice?

This is a rapidly maturing technology, which has many real-world benefits. You can generate custom voices for chat bots, to use in games, for interacting with home automation, including Cortana, Alexa or Siri skills. It offers very believable video narration, and more realistic voice for call handling, to name just a few popular uses. The transcription service was where I came in. It is driven by the latest AI from Azure Cognitive Services. It provides almost real-time transcription and corrects itself as it goes.

Is it a lot of work to implement this new tech?

As an IT pro reading the docs, training a "Custom Voice" with Microsoft Speech Service sounds like a complicated and cumbersome task.

I also initially thought it sounded like too big of a job, to prepare all the training data for a decent sounding voice.

But it wasn't, and I hope to dispel that myth for you as well!

The services are very forgiving and will produce recognisable results with under a minute's worth of training data!

The code I've uploaded to GitHub makes it even simpler.

What is a simplest way to implement this tech?

Instead of spending hours reciting carefully scripted phrases for training data, why not flip it around?

Take existing audio and first transcribe that to text.

Then feed that the original audio and the auto-transcription BACK into the service!

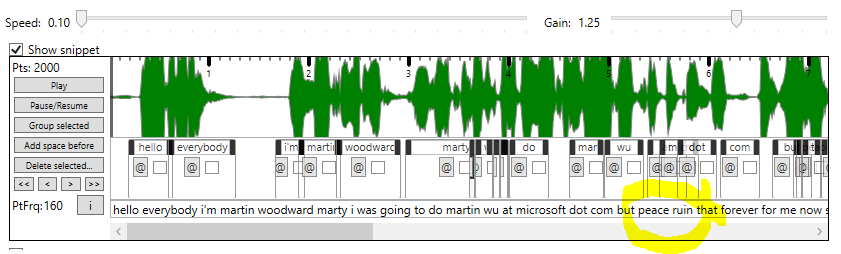

For even better results, spend as little as half an hour, tidying the transcription mistakes and feed that curated/cleaned version back in.

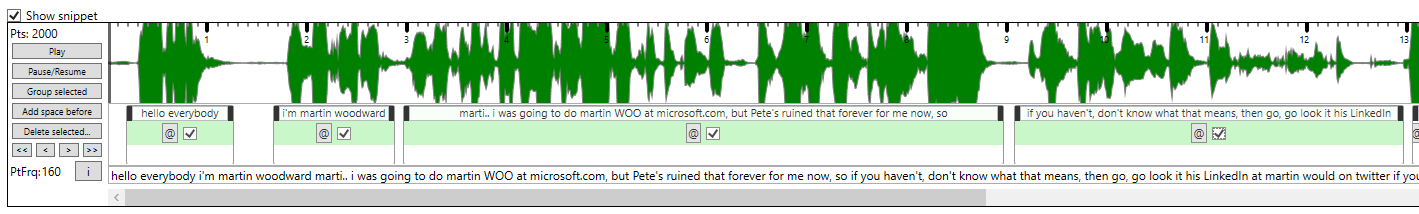

You'll see how easy that is, with the demo tool I've posted posted to GitHub.

It also helps you splice the audio up, into small chunks to feed into the service.

This visual tool will do everything required to prep the training data and you can learn and adapt it to your needs.

Read more and watch demos of this project HERE

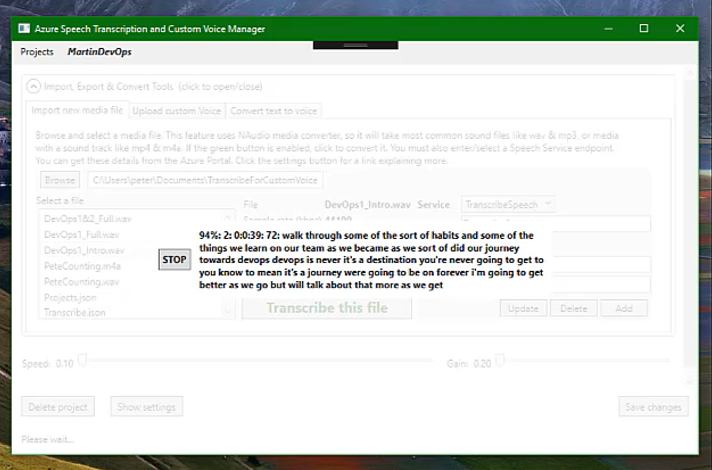

This project (which you can just download and run) will show you how to authenticate against the Speech Service, how to upload audio for transcription and also how to request and download the your final custom generated audio files.

Three simple steps to implement

- Transcribe your audio with the Speech to Text service

- Upload your snippets of curated phrases and text into the Custom Voice service

- Tell it what you want it to say and download the result

You can download the code for this project from GitHub and have your own custom voice ready, in under an hour!

The initial results may initially sound a little "robotic", of course. But it's shocking to hear a familiar voice coming back at you. The final quality of your voice is actually only limited to the amount of time you then have. To tidy up the samples, perfect the acoustic library and work on the phonetic scripts. But you can still get very impressive results, with very little work.

Why did I need to implement this tech?

Simply for better transcription results. I initially just wanted to provide viewers a transcript of a video presentation that Martin Woodward (Microsoft Principal Group Program Manager for Azure DevOps) gave, to my Azure Developer Meetup group last November. You can watch the full set of eight clips from that great session here.

The basic recognition code was copy n paste from the docs and I had transcription results within just FIVE minutes! However, the sound quality and his slight accent meant the transcription had many errors. they would have taken me some time to manually correct.

So I decided instead to feed the "best bits" of the audio BACK into the service, generate an acoustic model and hopefully get a much improved transcription out. And it worked!

As a side-effect, I also generated a custom voice, which was trained to sound very similar to Martin! I was so impressed at how easy it was.

So over the festive break, I pulled together some demo code to show how it is implemented.

This is now available in this C# GitHub repo , to demonstrate how to implement this.

You can download that now and try yourself. It's easy!

Within just 30-60 minutes, you can get impressive results!

I'm filling out a more detailed article on using the service HERE on TechNet Wiki.

Plus, I'll be documenting the project features in the GitHub project wiki - if you just want to use the tool and not know the code.

Pete Laker,

Azure MVP and Microsoft IT Implementer

This is part of a series of Azure developer blog posts, in my role as Microsoft IT Implementer.

I'm honoured to have been asked by Microsoft to demystify and demonstrate some of the latest technologies, which excite me - and I hope you too!

Comments

- Anonymous

January 28, 2019

Great stuff Pete, I am checking out the repo now! - Anonymous

January 30, 2019

This is a good one! I love the whole approach of coding your way out of typing a transcript manually :) - Anonymous

January 30, 2019

That's a pretty hilarious sample, Pete! Great job on this! - Anonymous

January 31, 2019

Looking into repo, interesting one.