SCP.Net with HDInsight Linux Storm clusters

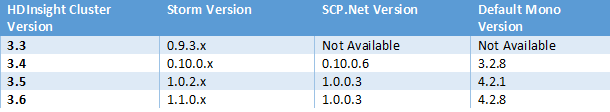

SCP.Net is now available on HDInsight Linux clusters 3.4 and above.

Versions

Note:

HDInsight Storm team recommends HDI 3.5 or above clusters for users looking to migrate their SCP.Net topologies from Windows to Linux.

HDInsight custom script actions can be used to update the Mono version on HDI Clusters.

For more details please look at: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-install-mono

Development of SCP.Net Topology

Pre-Steps

Azure Datalake Tools for Visual Studio

HDInsight tools for Visual Studio does not support submission of SCP.Net topologies to HDI Linux Storm clusters.

The latest Azure Datalake Tools for Visual Studio is needed to develop and submit SCP.Net topologies to HDI Linux Storm clusters.

The tools are available for Visual Studio 2013 and 2015.

Please note: Azure DataLake tools has compatibility issues with other/older extensions to Visual Studio.

One known issue is where no clusters are shown in the drop-down for topology submission.

If you encounter this issue, please uninstall all extensions to Visual studio, and re-install Azure Datalake tools.

Java (JDK)

SCP.Net generates a zip file consisting of the topology DLLs and dependency jars.

It uses Java (if found in the PATH) or .net to generate the zip.

Unfortunately, zip files generated with .net are not compatible with Linux clusters.

Java installation requirements:

- Java should be installed on the machine (JDK1.7+) (example: C:\JDK1.7)

- JAVA_HOME system variable should be set to the installation path. (C:\JDK1.7)

- PATH system variable should include %JAVA_HOME%\bin

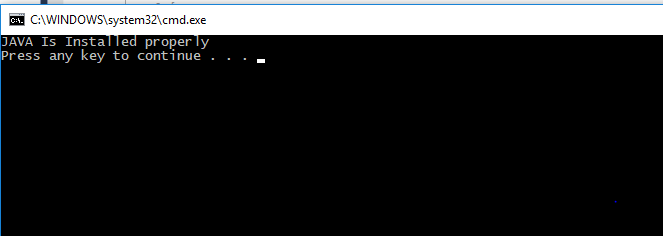

Verify JDK installation

To verify that JDK is installed correctly on your machine, create an simple Console application project in Visual Studio, and copy paste the below code into the main program.

using System;

using System.IO;

namespace ConsoleApplication2

{

class Program

{

static void Main(string[] args)

{

string javaHome = Environment.GetEnvironmentVariable("JAVA_HOME");

if (!string.IsNullOrEmpty(javaHome))

{

string jarExe = Path.Combine(javaHome + @"\bin", "jar.exe");

if (File.Exists(jarExe))

{

Console.WriteLine("JAVA Is Installed properly");

return;

}

else

{

Console.WriteLine("A valid JAVA JDK is not found. Looks like JRE is installed instead of JDK.");

}

}

else

{

Console.WriteLine("A valid JAVA JDK is not found. JAVA_HOME environment variable is not set.");

}

}

}

}

Run the program from within Visual Studio (CTRL + F5). The output of the program should be as shown in the below

Creating a SCP.Net topology

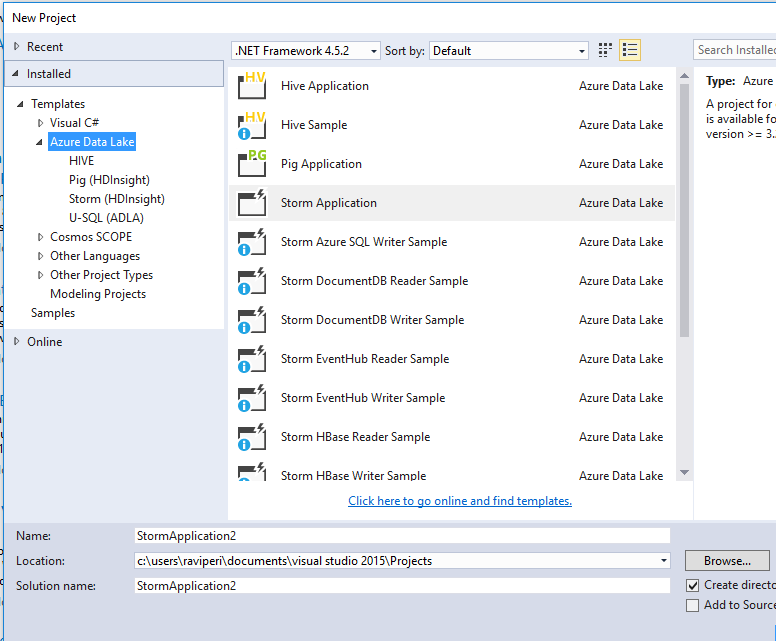

Open visual studio, and the create new project dialog should have storm templates.

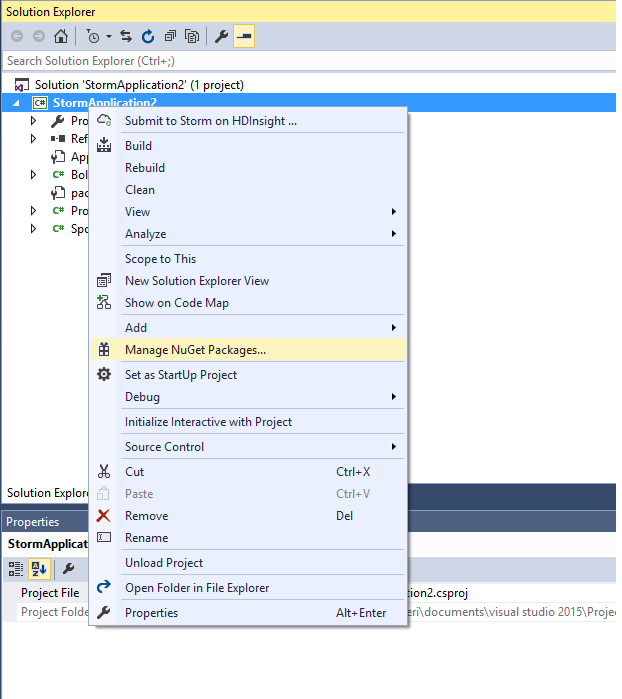

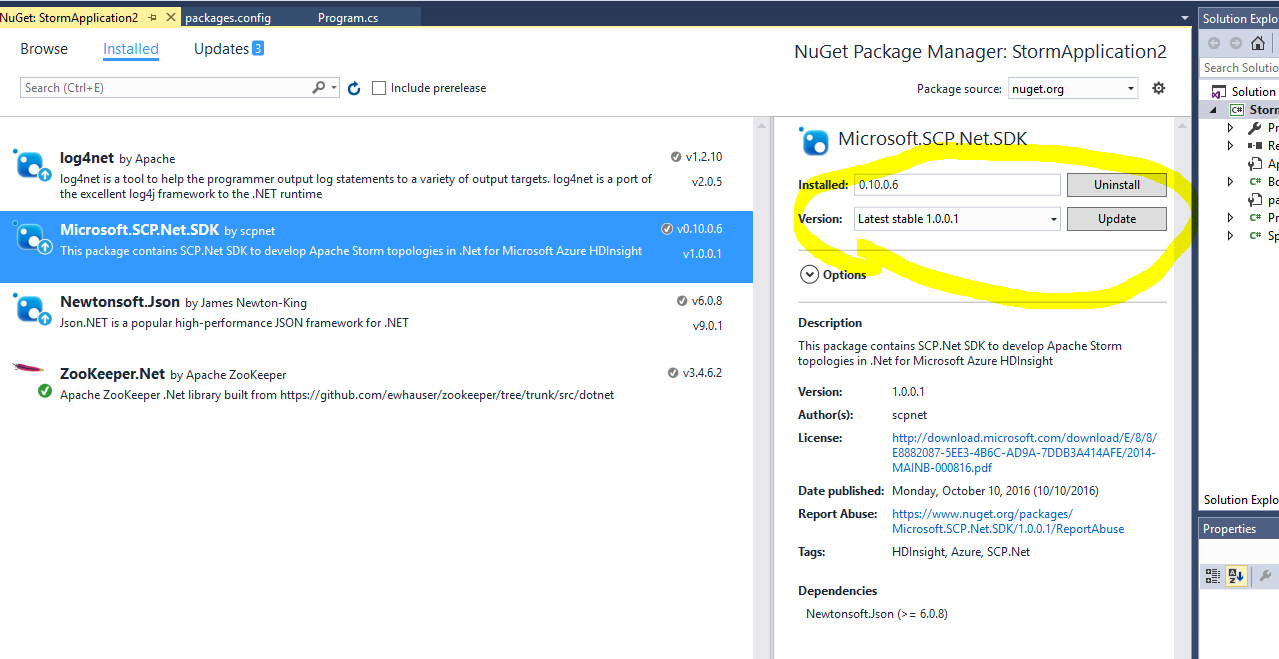

The default SCP.net nuget package version needs to be updated to 1.0.0.3 for HDI 3.5 and above clusters.

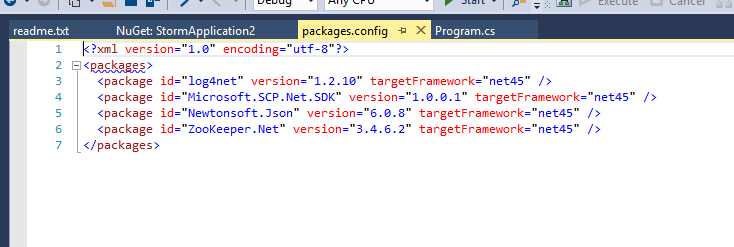

Updated packages.config should look similar to below. (Note: The version of SCP.Net SDK should be the latest available in Nuget repo).

Submission of the Topology

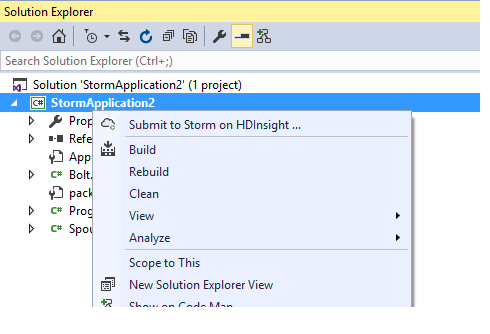

Right click on the project and select Submit to Storm on HDInsight … option

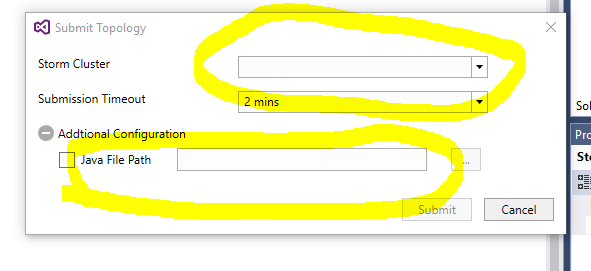

Choose the Storm Linux cluster from the drop down.

Java File Path

If you have java jar dependencies, you can include their full paths as a ; string. or you can use \* to indicate all jars in a given directory.

SCP.Net Submission Logs

Topology submission operations are logged into /var/log/hdinsight-scpwebapi/hdinsight-scpwebapi.out on the active head node.

Users can look into the above file on head node to identify causes for submission failures. (In case where the output from the tool is not helpful).

Investigating Submission failures

Topology submissions can fail due to many reasons:

- JDK is not installed or is not in the Path

- Required java dependencies are not included

- Incompatible java jar dependencies. Example: Storm-eventhub-spouts-9.jar is incompatible with Storm 1.0.1. If you submit a jar with that dependency, topolopgy submission will fail.

- Duplicate names for topologies

/var/log/hdinsight-scpwebapi/hdinsight-scpwebapi.out file on active headnode will contain the error details.

Common error scenarios:

FileNotFoundException

The hdinsight-scpwebapi.out file contains error that looks like below:

Exception in thread "main" java.io.FileNotFoundException: /tmp/scpwebapi/da45b123-f3fc-44c4-9455-da8761b00881/TopologySubmit-Target/targets/

One reason for this is that the topology package uploaded could not be compiled correctly into a topology Jar file for submission.

The most common cause for such failure is not having JDK installed in the path. Please use the verify JDK step from above to ensure JDK is installed and available to Visual Studio.

The next common cause for such failures is a missing Java dependency jar. Ensure that all required jar files are included.

Worker logs contain errors Received C# STDERR: Exeption in SCPHost: System.IO.FileNotFoundException: Cannot find assembly: "/usr/hdp/2.5.4.0-121/storm/scp/apps/XYZ.dll

All required Topology dlls are not getting included. Please ensure that all third-party/custom libraries that the topology needs are copied to the output folder.

Comments

- Anonymous

May 04, 2017

Thanks!! This is super helpful. I'm facing another issue while using Azure storage SDK. When I'm initializing the storage account, i setting the storage credentials using something like belowvar storageCredentials = new StorageCredentials(_storageAccountName, _storageAccountKey);But this is failing error:2017-05-04 16:52:08.732 m.s.p.TaskHost [ERROR] Received C# STDERR: at Microsoft.WindowsAzure.Storage.Auth.StorageCredentials..ctor (System.String accountName, System.String keyValue) in :0 I believe this is issue with cert permissions on Mono and when I had tested on 3.5 cluster late last year it was resolved running the custom script below:#!/bin/bashsudo apt-get install -y ubuntu-monosudo chmod -R 755 /etc/mono/certstore/certs/But when I spin up a new cluster now, I'm unable to initialize azure storage connection even after running the above custom script.Anything changed? Please suggest how can i fix this.I'm using below versions for Azure storage, SCP.Net on HD 3.5 linux cluster, Hybrid topology.- Anonymous

May 04, 2017

Kiran,Please refer to https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-install-mono to install the latest Mono version (4.8.1) on the cluster. The custom action script mentioned in the article will install the certs package as well, which should prevent errors such as what you see.If the issue persists please contact HDInsight support team, so they can investigate and help unblock you.

- Anonymous