How do I track this in my Stored Procedure? (Using a custom trace event)

It’s been a while since I’ve posted an entry as I’ve been busy with moving to a different position that has me working dedicated to one customer here in my PFE role at MS. I’ve been keeping up with the topics I want to post about, so there are plenty more posts to come.

Today I want to cover an interesting feature of SQL Server that few people are familiar with – the ability to create your own custom trace event.

So first, when might you want to use a custom trace event? The obvious example is live debugging. Application code often adds debugging capabilities by using special builds or special modes enabled by registry keys or command line switches. Why not add this capability to stored procedures with a debug flag parameter that can be toggled on or off (off by default)? But why? A simple statement level trace can help identify the logic followed by a stored procedure, but sometimes you may need to go deeper to find what local variables are getting initialized or changed to. Going deeper, perhaps when taking a branch in a stored procedure, and you need to check something on the system to get more information about the environment or another table at run time that the stored procedure is executing in. Or, perhaps you’d like a very targeted security audit on one table implemented with a trigger before the new server level auditing was introduced in SQL Server 2008. There are many good reasons, so let’s take a look at how to set it up.

To accomplish this, we rely on a stored procedure aptly named: sp_trace_generateevent. Here is that syntax:

sp_trace_generateevent [ @eventid = ] event_id

[ , [ @userinfo= ] 'user_info' ]

[ , [ @userdata= ] user_data ]

You can find this at:

https://msdn.microsoft.com/en-us/library/ms177548.aspx

@EventID must be a value between 82 and 91 – giving you a possibility of 10 separate trace events. All trace events are identified by a unique trace event id number (which you can find in sys.trace_events). This range of 10 event ids are reserved for custom events. So let’s review an example. In a similar example I posted a few months back with a custom performance counter in SQL Server, we checked the level of the USERSTORE_TOKENPERM cache store to watch for it to grow to a large size as described in that post (and the kb article contained within that post). That post is here:

We could do something similar, but a performance monitor collection can only track data we can quantify numerically. What if we need more detailed information? As an example, let’s take a batch processing job that is having problems. It’s part of a larger business process that imports data into SQL Server using the BULK INSERT command. The job starts every night at a set time, and must only run if a data file exists. But when the job fails, we need to know why. Was the file there? Was the file 0KB? Was it too large and caused a performance problem? So let’s take a look at how we’d implement the custom event here. So in our nightly batch processing stored procedure, we have a piece of code that checks to see if the data file is there to decide whether we continue, check another location, use a WAITFOR to check later, or fail:

create table #t1 (NameOfFile nvarchar(256))

declare @FileExists bit;

declare @FileInfo nvarchar(256)

insert into #t1

exec xp_cmdshell 'dir c:\temp | find /i "testfile.dat"'

set @FileExists = (select COUNT(*) from #t1 where NameOfFile like '%testfile.dat%')

if @FileExists = 1

begin

<ADDITIONAL CODE HERE>

end

else

begin

<ADDITIONAL CODE HERE>

end

drop table #t1

NOTE: xp_cmdshell is good for our example here, but be sure you understand the security risks before using xp_cmdshell and take the necessary steps to secure the server and control it’s execution if you choose to use this in a production environment.

The <ADDITIONAL CODE HERE> section is where we’d implement the code to perform a BULK INSERT, or perform a WAITFOR and then try again.

To get diagnostic information in a production environment when the procedure or job has a problem, we need something to collect this data when the procedure is not being run interactively. A custom trace event fits nicely. We could toggle the extra trace information on and off with a @debug flag, but here is the code we add above the <ADDITIONAL CODE HERE> sections:

set @FileInfo = ISNULL((select * from #t1 where NameOfFile like '%testfile.dat%'), 'Data File Not Found');

exec sp_trace_generateevent @event_class = 82, @userinfo = @FileInfo

This code checks to verify that the file’s name is in our temporary table (a table variable would be a good choice as well). We select with a filter for the file name because we may have picked up some extra information from the file system. The nature of the file system and the fact we are checking only one directory/folder ensures that we will only find 1 result or nothing (NULL). If a null is found – meaning the file doesn’t exist – then the ISNULL function returns a friendlier “Data File Not Found”.

Assuming any @debug flag is toggled on, here are the final steps to capture this information:

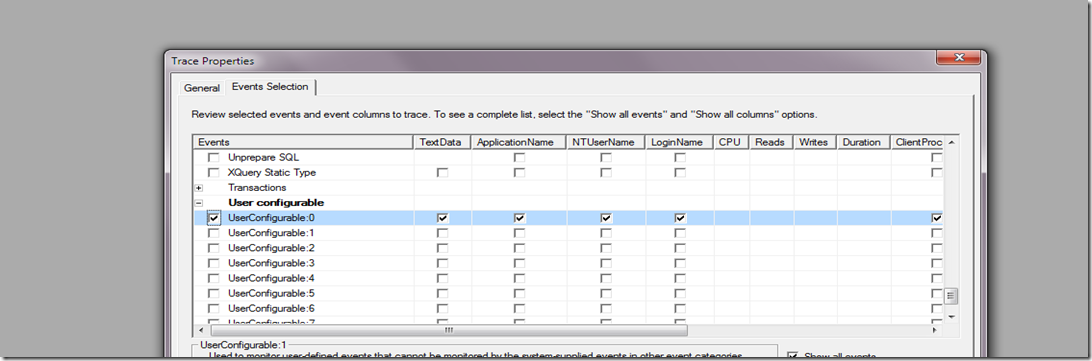

Start a trace and add the UserConfigurable:0 trace event. This maps to an @event_class of 82. UserConfigurable:1 would map to an @event_class of 83, and so on. So we capture this in our trace:

Now, we begin our trace and execute our sample. In the first example, we make sure the file does not exist by modifying this line:

insert into #t1

exec xp_cmdshell 'dir c:\temp | find /i "WRONGFILE.dat"'

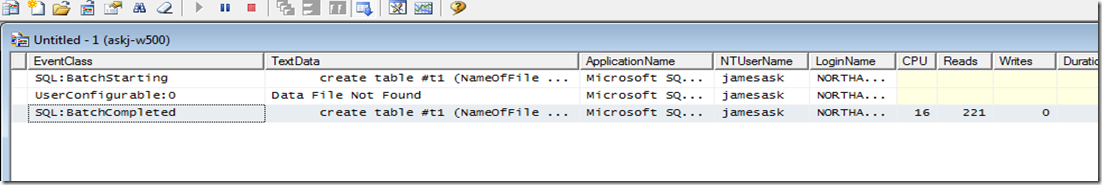

this gives us the expected event in Profiler with TextData of “Data File Not Found”:

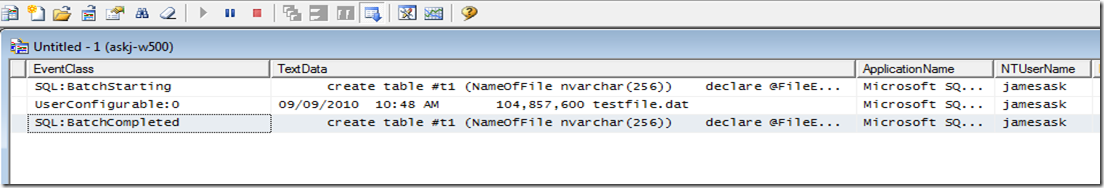

Then we correct the typo in our code to make sure the file is found and check our trace again:

Now we know the file existed, it was 104MB big, and was created at 10:48 am on Sept 9th. Very valuable information obtained during execution to help us troubleshoot our business process.

This is just one example of the usefulness of the custom trace events available in SQL Server. They are available in SQL Server 2000, SQL Server 2005, and SQL Server 2008.

-Jay