Supporting Advanced Data Access Scenarios in Tabular 1400 Models

In the context of this blog article, advanced data access scenarios refers to situations where data access functions inside M expressions cannot easily be represented through data source definitions in Tabular metadata. These scenarios are predominantly encountered when copying and pasting complex M expressions from an Excel workbook or a Power BI Desktop file into a Tabular 1400 model, or when importing Power BI Desktop files into Azure Analysis Services. Often, these complex M expressions require manual tweaking for processing of the Tabular 1400 model to succeed.

Background information

Before jumping into these advanced data access scenarios, let's review two basic concepts that differ between M expressions in Power BI Desktop files and in Tabular 1400 models: Data Source Representations (DSRs) and Credentials/Privacy Settings. Understanding the differences is a prerequisite to mastering the advanced data access scenarios covered later in this article.

In Power BI Desktop, you can write a very simplistic M expression like the following and import the resulting table of database objects into your data model:

let

Source = Sql.Database("server01", "AdventureWorksDW")

in

Source

Yet, in Tabular 1400, you first need to define a data source in the model, perhaps called "SQL/server01;AdventureWorksDW" ?, and then write the M expression like this:

let

Source = #"SQL/server01;AdventureWorksDW"

in

Source

The result is the same. So why did Tabular 1400 models introduce a different way of providing the data source information? One of the main reasons is explicit data source definitions facilitate deployments. Instead of editing 50 table expressions in a model as part of a production deployment, you can simply edit a single data source definition and all 50 table expressions access the correct production data source. Another key requirement is programmability. While SSDT Tabular is certainly a main tool for creating Tabular 1400 models, it's by no means the only one. In fact, many Tabular models are created and deployed in an automated way through scripts or ETL packages. The ability to define data sources in a model programmatically is very important.

Going one level deeper, check out the definition of the Sql.Database function in the Power Query (M) Formula Reference. You can see it is defined as in the Data Access Function column of the following table. Next, look at a structured data source definition for SQL Server in a Model.bim file of a Tabular 1400 model. The data source definition follows the format shown in the Data Source Definition column of the below table. A side-by-side comparison shows you that all parameters of the Sql.Database function are also available in the data source definition. That's not surprising. However, the Mashup engine only works with M expressions. It doesn't know anything about Tabular 1400 metadata. During modeling and processing, the tools and the AS engine must translate the data access functions and their data source definitions back and forth with the help of the Mashup engine.

| Data Access Function | Data Source Definition |

| Sql.Database(server as text, database as text,optional options as nullable record) as table | {"type": "structured","name": "name as text","connectionDetails": {"protocol": "tds","address": {"server": "server as text","database": "database as text"},"authentication": null,"query": "optional query as text"},"options": {optional options as nullable record}"credential": {...}} |

As a side note, if your M expressions in Power BI Desktop use data access functions that do not yet have a data source representation in Tabular 1400, then you cannot use these M expressions in your Tabular 1400 model. We first must enable/extend the corresponding connector. Our goal is to add a data source representation to every connector available in Power BI Desktop and then enable these connectors for Tabular 1400.

So far, things are relatively straightforward. But, it gets tricky when credential objects come into the picture. In the M expressions above, where are the credentials to access the data source? Power BI Desktop stores the credentials in a user-specific location on the local computer. This mechanism doesn't work for Tabular 1400 because these models are hosted on an Analysis Services server. The credentials are stored in the credential property of the structured data source in the model metadata (see the data source definition in the table above).

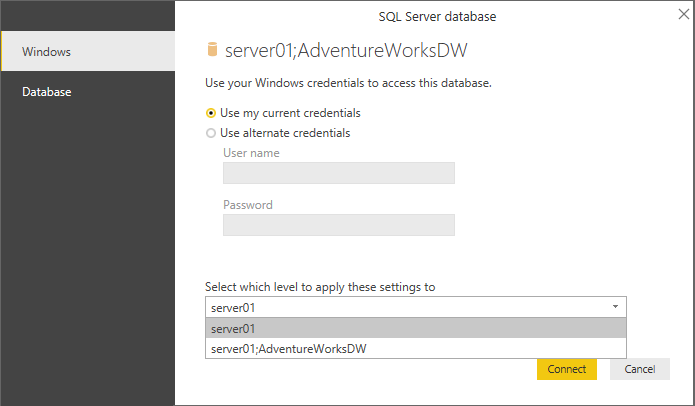

Given that Power BI Desktop stores the credentials outside of the .pbix file, how does the Mashup engine know which credentials to use for which data source? The crucial detail is the Mashup engine uses the data access parameters to establish the association between the data access functions and their credentials. In other words, if your Power BI Desktop file includes multiple Sql.Database("server01", "AdventureWorksDW") statements, because they all use the same address parameters, there is only one credential for all of them. You can notice this in Power BI Desktop as you are prompted only once for credentials to access a given data source, no matter how often you use the corresponding data access function. By default, Power BI Desktop associates a credential with the highest level of the address parameters, such as the SQL Server server name. But you can select lower levels if the data source supports it, such as server and database name, as shown in the following screenshot.

The tricky part is the same association rules apply to Tabular 1400. Theoretically, each data source definition in a Tabular 1400 model has its own credential object. This is certainly the case at the Tabular metadata layer. But as mentioned earlier, the Mashup engine does not deal with Tabular metadata. The Mashup engine expects M expressions with resolved data access functions. The AS engine must translate the structured data source definitions accordingly. If two data source definitions had the same address parameters, it would no longer be possible to identify their corresponding credential objects uniquely at the M layer. To avoid credential ambiguity, there can be only one data source definition with a given set of address parameters in a Tabular 1400 model. Plans exist to eliminate this restriction in a future compatibility level, but in Tabular 1400 this limitation is important to keep in mind when dealing with advanced data access scenarios.

Now, let's jump into these advanced data access scenarios with increasing levels of complexity.

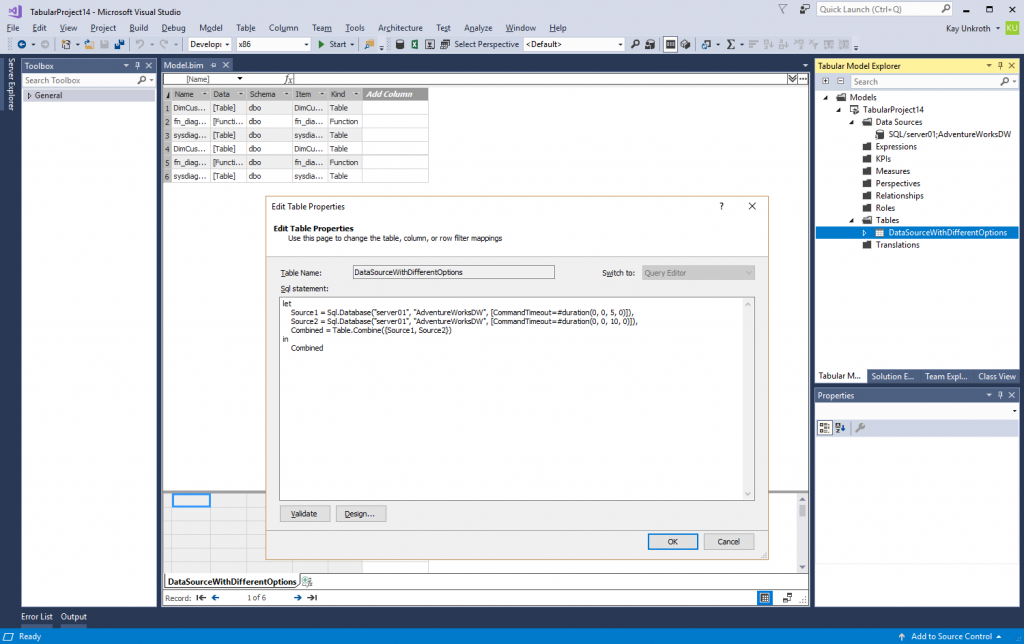

Accessing the same data source with different options

As a warmup, look at the following M expression. It's not very useful, but it illustrates the point. The expression includes two Sql.Database calls with the same address parameters, but with different options. As explained above, in Tabular 1400, you cannot create two separate data source objects for these two function calls. But if you can only create one, then you only get one options record (see the previous table). So what are you going to do with the second command timeout? Can you perhaps use the same command timeout and consolidate both into a single data source definition? What if not?

let

Source1 = Sql.Database("server01", "AdventureWorksDW", [CommandTimeout=#duration(0, 0, 5, 0)]),

Source2 = Sql.Database("server01", "AdventureWorksDW", [CommandTimeout=#duration(0, 0, 10, 0)]),

Combined = Table.Combine({Source1, Source2})

in

Combined

The solution is not intuitive. Perhaps even misleading. It is fragile, and it breaks dependency analysis in the tools. It also breaks the programmability contract because you can no longer simply update the data source definition and expect your M expressions to pick up the changes. It clearly needs a better implementation in a future compatibility level. But for now, you can simply define a data source with the address parameters shown above and then use the M expression unmodified in Tabular 1400. The credential object is magically applied to all corresponding data access functions that use the same parameters across all M expressions in the model. That's how the Mashup engine works, as the following screenshot illustrates.

Note: This workaround of using a data source merely as a holder of a credential object is not recommended and should be considered a last-resort option. If possible, create a common set of options for all data access calls to the same source and use a single data source object to replace the data access functions. Another option might be to register the same server with multiple names in DNS and then use a different server name in each data source definition.

Accessing the same data source with different native queries

Perhaps, you can consolidate your data access options and keep things straightforward in your Tabular 1400 model. But what if your data access functions use different native queries as in the following example?

let

Source1 = Sql.Database("server01", "AdventureWorksDW", [Query="SELECT * FROM dimCustomer WHERE LastName = 'Walker'"]),

Source2 = Sql.Database("server01", "AdventureWorksDW", [Query="SELECT * FROM dimCustomer WHERE LastName = 'Jenkins'"]),

Combined = Table.Combine({Source1, Source2})

in

Combined

Of course, one option is to rewrite the first native query to deliver the combined result so that you can eliminate the second function call. Yet, native query rewrites are not really required if you modified the M expression and used the Value.NativeQuery function, as in the following expression. Now there is only a single Sql.Database function call, which can nicely be replaced with a data source definition in Tabular 1400.

let

Source = Sql.Database("server01", "AdventureWorksDW"),

Table1 = Value.NativeQuery(Source, "SELECT * FROM dimCustomer WHERE LastName = 'Walker'"),

Table2 = Value.NativeQuery(Source, "SELECT * FROM dimCustomer WHERE LastName = 'Jenkins'"),

Combined = Table.Combine({Table1, Table2})

in

Combined

Note: Even if you consolidated the queries, as in SELECT * FROM dimCustomer WHERE LastName = 'Walker' Or LastName = 'Jenkins' , avoid putting this native query on the query parameter of the data source definition. The query parameter exists for full compatibility between data access functions and data source definitions. But it shouldn't be used because it narrows the data source down to a particular query. You could not import any other data from that source. As explained earlier, you cannot define a second data source object with the same address parameters in Tabular 1400. So, define the data source in broadest terms and then use the Value.NativeQuery function to submit a native query.

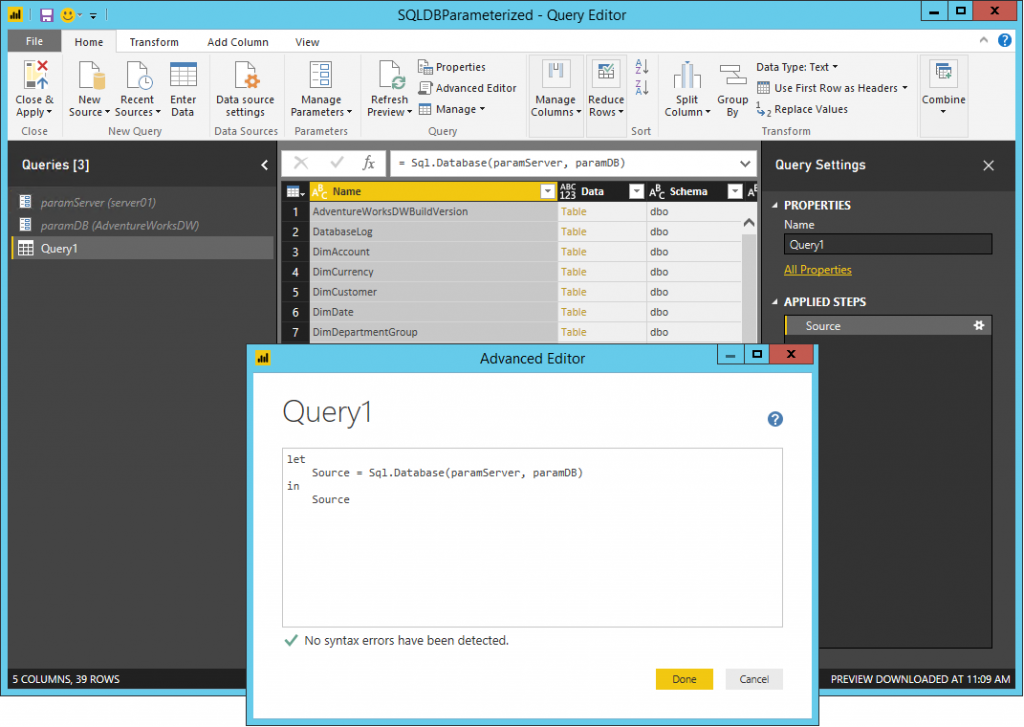

Handling parameterized data access functions

The next level of challenges revolves around the dynamic definition data source parameters. This technique is occasionally used in Power BI Desktop files to maintain the address parameters for multiple data access function calls centrally. The following screenshot shows an example.

Fortunately, the concept of M-based address parameter definitions is comparable to the concept of a data source definition in Tabular 1400. In most cases, you should be able to define the Tabular 1400 data source as always by simply using the parameter values directly. Then, replace the data access function calls in your M expressions with the reference to the data source, delete the M-based parameter definitions, and the job is done. The result is a common Tabular 1400 M expression.

let

Source = #"SQL/server01;AdventureWorksDW"

in

Source

Of course, this technique only works for trivial, single-valued parameter definitions. For more complex scenarios, well, read on.

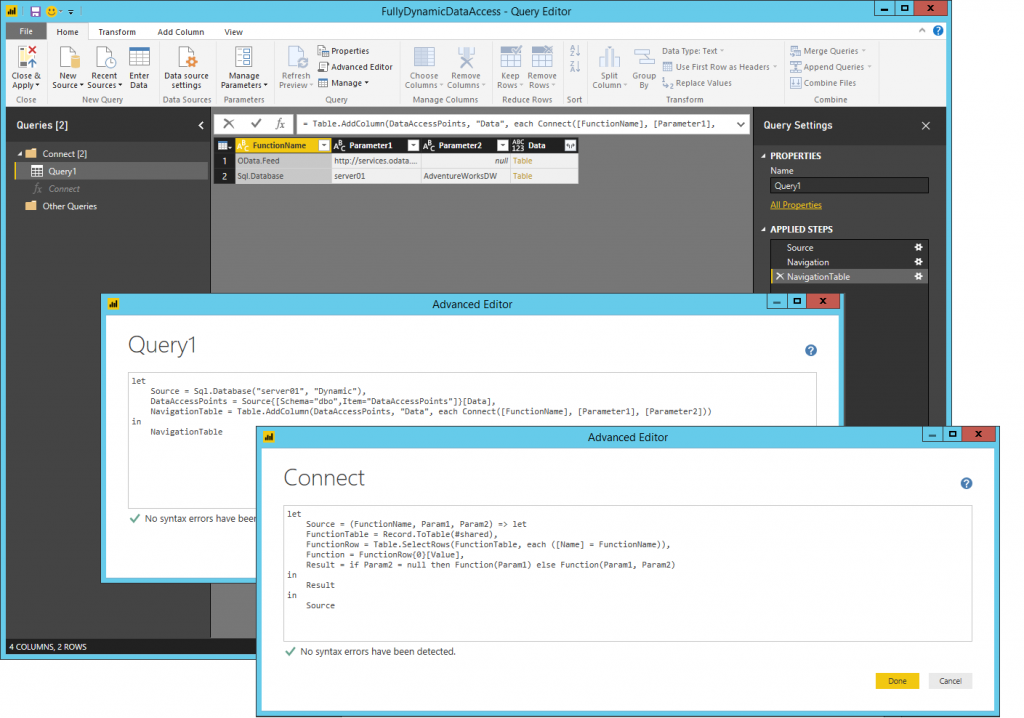

Dealing with Dynamic Data Access Functions

The ultimate challenge in the advanced data access scenarios is the fully dynamic implementation of a data access function call. There are various flavors. Let's analyze the following simple example first.

let

#"Dynamic Function" = Sql.Database,

Source = #"Dynamic Function"("server01", "AdventureWorksDW")

in

Source

Clearly, this M expression is accessing a SQL Server database called AdventureWorksDW on server01. You can create a data source definition for this database in Tabular 1400 and replace these lines with a reference to that data source definition, as shown in the sample in the previous section above. This is easy because it wasn't really a dynamic data source, yet. Here's a fully dynamic example.

let

Source = Sql.Database("server01", "Dynamic"),

DataAccessPoints = Source{[Schema="dbo",Item="DataAccessPoints"]}[Data],

NavigationTable = Table.AddColumn(DataAccessPoints, "Data", each Connect([FunctionName], [Parameter1], [Parameter2]))

in

NavigationTable

This M expression retrieves all rows from a DataAccessPoints table in a SQL Server database called Dynamic. It then passes the column values of each row to a custom function called Connect, which then connects to the specified data source. The Connect function is based on the following M expression.

let

Source = (FunctionName, Param1, Param2) => let

FunctionTable = Record.ToTable(#shared),

FunctionRow = Table.SelectRows(FunctionTable, each ([Name] = FunctionName)),

Function = FunctionRow{0}[Value],

Result = if Param2 = null then Function(Param1) else Function(Param1, Param2)

in

Result

in

Source

Not only are the address parameters (Param1 and Param2) dynamically assigned, but the name of the data access function itself is also passed in as a parameter (FunctionName). So, unless you know the contents of the DataAccessPoints table, you cannot even determine what data sources this M expression accesses! You must evaluate the expressions against the actual data to know what credentials you need to supply, and you must define privacy settings. In this example, the following screenshot reveals that the expressions connect to an OData feed as well as a SQL Server database, but it could really be any supported data source type.

So, if you wanted to use such a dynamic construct, you would have to create the corresponding data source objects with their credentials in your Tabular 1400 model. Power BI Desktop can prompt you for the missing credentials if you added a new entry to the DataAccessPoints table, but Analysis Services cannot because there is no interactive user on a server, and processing would fail. You must add a new matching data source definition with the missing credentials and privacy settings upfront, which somewhat defeats the purpose of a fully dynamic data source definition.

Looking to the future

Perhaps you are wondering at this point why we didn't provide more flexible support for credential handling in Tabular 1400. One of the main reasons is the footprint of the modern Get Data experience on the Mashup engine is already significant. It wasn't justifiable to take a sledgehammer approach at the Mashup layer. Especially when it concerns security features used across several Microsoft technologies, including Power BI.

Fully dynamic scenarios are certainly interesting and fun, but they are corner cases. The more common advanced data access scenarios can be handled with moderate effort in Tabular 1400. And a future compatibility level is going to remove the limitation of a single data source definition per target parameters. It requires some replumbing deep in the Mashup engine. On the other hand, how Tabular models are going to support fully dynamic data source definitions in future compatibility levels hasn't yet been decided. One idea is to move credential storage out of the Tabular metadata. Another is to introduce new metadata objects that can be associated with data access functions through their address parameters. Perhaps another is to invent a form of processing handshake. And there may be other options. If you have a great idea, please post a comment or send it our way via ssasprev at microsoft.com, or use any other available communication channels such as UserVoice or MSDN forums.

That's it for this excursion into handling advanced data access scenarios when moving M expressions from Power BI Desktop to a Tabular 1400 model. One of the next articles will show you how to use legacy provider data sources and native query partitions together with the new structured data sources and M partitions in a Tabular 1400 model. Stay tuned for more Get Data coverage on the Analysis Services Team Blog!

Comments

- Anonymous

August 28, 2017

The comment has been removed - Anonymous

August 28, 2017

Great article Kay!Another useful case for Value.NativeQuery I found is when you need to automate partition creation with a query that requires a couple of joins and parameters. For exampleletquery = Value.NativeQuery(#"SQL/eur database windows net;EUR","select s.* from fact_sale s join entity en ON en.mgmt_entity_code = s.mgmt_entity_code where s.cal_mnth=CAST(@cal_mnth AS INT) AND en.buss_region_code = @region ",[cal_mnth=201609, region="EUR"])in query