Recovery On Secondary Lagging – Shared Redo Target

There are various reasons a secondary may not be keeping up (slow network, blocked redo worker by a long running query on a readable secondary, ….) This post is going to focus on the shared redo target.

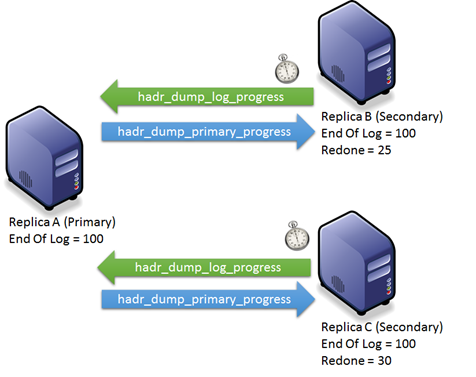

You may not be aware but redo on a secondary involves a handshake with the primary. You can watch this handshake take place using the following XEvents: hadr_dump_primary_progress and hadr_dump_log_progress

This handshake is used so all secondary(s) redo points, advance recovery near the same point in time. The idea is that no matter which readable secondary you connect to the version of the data should be close to, if not exactly the same as any other readable secondary at the same point in time.

Note: This is distinctly different than the hardened log records/blocks. Hardening happens as soon as possible in order to provide the HA/DR capabilities. A redo/recovery lag could impact your time to return to operations (after failover) but the redo lag will not impact the activity associated with mirroring of the log from the primary.

I think a walkthrough of the handshake is the best way for me to help you understand the flow. This is a fairly complicated process so I have taken several liberties to explain the behavior.

As each replica connects to the primary is begins the ‘StartScan’ activity. On the primary this starts a log block send worker and on the secondary it starts a timer task. One of the fundamental activities of the secondary timer task is to send a hadr_dump_log_progress (log progress) message to the primary at near 1 second intervals (think of this like a heartbeat). This is important as you will see this drives the advancement of the secondary redo activities.

Each of the replicas updates the primary with the log progress message. You can see the core of this information using DMV queries on the primary replica (sys.dm_hadr_database_replica_states and sys.dm_hadr_database_replica_cluster_states).

The primary uses the global information about the replicas to establish the redo target and respond to the hadr_dump_log_progress message(s) with the primary progress message. Looking at the secondary(s); log harden locations, type of secondary (sync or async), redo locations, and other data the primary determines the common recovery LSN for the secondary(s). The primary then sends the hadr_dump_primary_progress message the secondary(s).

When the hadr_dump_primary_progress arrives at the secondary the local redo target is updated and the redo worker is signaled to advance redo to the new target. Along with the previous XEvents and DMVs mentioned you can use the following XEvents to watch the redo target advancement:

- hadr_db_commit_mgr_update_harden

- hadr_send_harden_lsn_message

- redo_*

In the diagram the I have advanced the redo on Replica C to 30. Each of the replicas have the same end of log, so all log hardening has taken place (no data loss would occur in this example.)

My first reaction to this is why is the redo target at 30 and not 100?

Here are the basics steps for how you can get to this situation. – (Pseudocode)

- Replica C was online and synchronized with the primary when the primary only had and end of log at 30.

- Replica B came online and started synchronization but redo has become blocked on a read query (May not be a blocking situation, anything that could cause redo to lag can occur at this point.)

- More activity occurs on the primary and new log records advance the end of log on the primary to 100.

- The log is scanned on primary and sent to the secondary(s) and hardened – All secondary(s) have the log through point 100.

- The progress messages continue to be exchanged on the timer interval.

The primary can tell that Replica C made it to a redo point of 30 and would normally tell Replica C to advance to 100. However, when Replica B came into the picture and got stuck at the 25 mark the primary understands there is an issue. In this case the primary may elect to set a redo target of 30, causing Replica C to pause redo/recovery until Replica B advances.

If you suspect a redo is not advancing as expected first compare the primary's end of log LSN to the secondary’s hardened LSN and redo/recovery LSN values. If the secondary’s hardened LSN is near the redo LSN but behind the primary this is a sign of a flow control on the primary or slow network between the primary and secondary. Go to the primary and query the wait status DMV:

select * from sys.dm_os_wait_stats where wait_type like '%FLOW%'

and look at the associated flow control and network performance counters.

If the secondary’s hardened LSN is significantly ahead of the redo activity make sure redo is not blocked and progressing, could simply be a blocking situation of redo. If redo is not blocked and the wait type is “REDO_THREAD_PENDING_WORK” this is a sign that the redo target may be depressed because of another replica. The wait type is accumulated when the redo worker is waiting to be signaled that there is work to be done.

When the primary progress message arrives and the redo target is advanced the worker is signaled out of this wait and the recovery work continues. Once the redo attains the target the worker goes back to waiting to be signaled. You can see that redo has achieved the target watching the wait type in sys.dm_exec_requests and the XEvent redo_caught_up event.

DIAGNOSTICS USE ONLY: (always use with caution and Microsoft guidance – not recommended for long term use) There is a trace flag (-T9559) that when enabled on the secondary ignores the redo target provided from the primary progress message and always set the redo target at the Max LSN value.

Bob Dorr - Principal SQL Server Escalation Engineer

Comments

- Anonymous

March 31, 2014

If the config contains only a primary db and a single AlwaysOn AG replica db (A and B in the diagram), is sole secondary redo target based on that secondary's transmitted-by-timer hadr_dump_primary_progress, or does it short-circuit in this minimal config to avoid the additional overhead?If T1448 affects AlwaysOn AG replicas, what is the relationship between the diagnostics-only T9559 and seemingly similar T1448?support.microsoft.com/.../2934934 - Anonymous

April 13, 2014

Great article - just a question regarding our environmentWe are running a two node Cluster with SQLServer 2012 SP1We receive REDO_THREAD_PENDING_WORK as the main wait-type in our Always-On configuration.We run however only 1 reading secondary so we conclude that we have another issue at our site.One interpretation was that – having the secondary in reading mode and therefore in Snapshot Isolation – the versioning in the secondary and the necessary update of the primary with the versioning info might be the reason for that? - Anonymous

March 20, 2015

Good afternoon,I have two replicas AlwaysOn one synchronous and asynchronous another. In the secondary synchronous mostly have the wait "REDO_THREAD_PENDING_WORK". Changes are taking more than 1.5 seconds to be visible in the secondary.How can I optimize this?As the trace flag T9559 what really makes?Thank you and excellent article.