Create and configure all the resources for Azure AI model inference

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

In this article, you learn how to create the resources required to use Azure AI model inference and consume flagship models from Azure AI model catalog.

Understand the resources

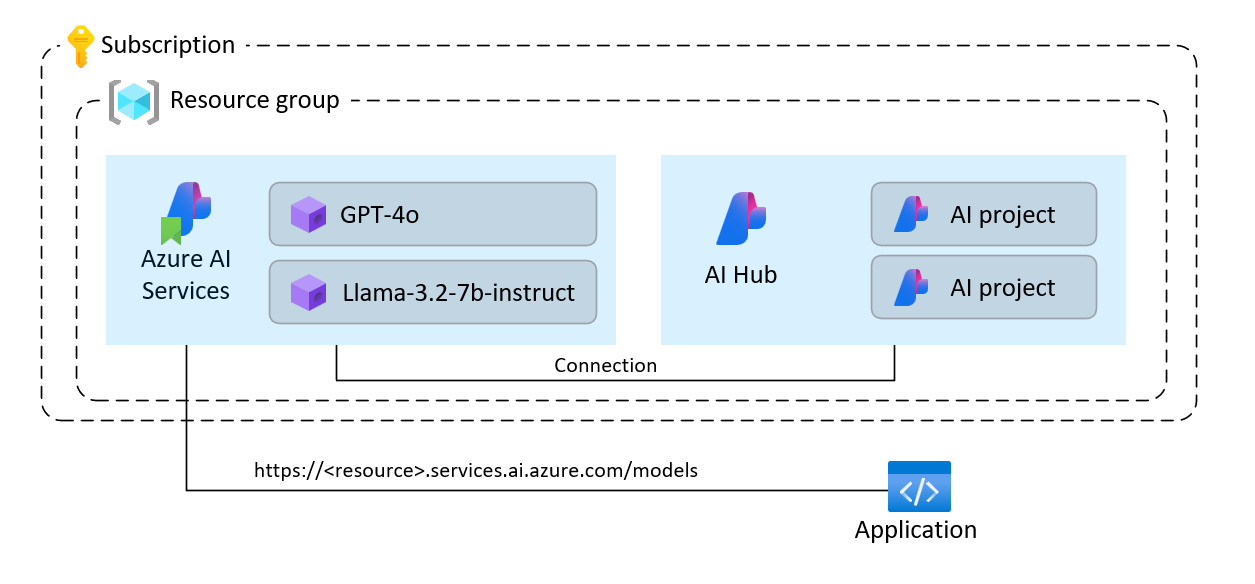

Azure AI model inference is a capability in Azure AI Services resources in Azure. You can create model deployments under the resource to consume their predictions. You can also connect the resource to Azure AI Hubs and Projects in Azure AI Foundry to create intelligent applications if needed. The following picture shows the high level architecture.

Azure AI Services resources don't require AI projects or AI hubs to operate and you can create them to consume flagship models from your applications. However, additional capabilities are available if you deploy an Azure AI project and hub, including playground, or agents.

The tutorial helps you create:

- An Azure AI Services resource.

- A model deployment for each of the models supported with pay-as-you-go.

- (Optionally) An Azure AI project and hub.

- (Optionally) A connection between the hub and the models in Azure AI Services.

Prerequisites

To complete this article, you need:

- An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

Important

Azure AI Foundry portal uses projects and hubs to create Azure AI Services accounts and configure Azure AI model inference. If you don't want to use hubs and projects, you can create the resources using either the Azure CLI, Bicep, or create the Azure AI services resource using the Azure portal.

Create the resources

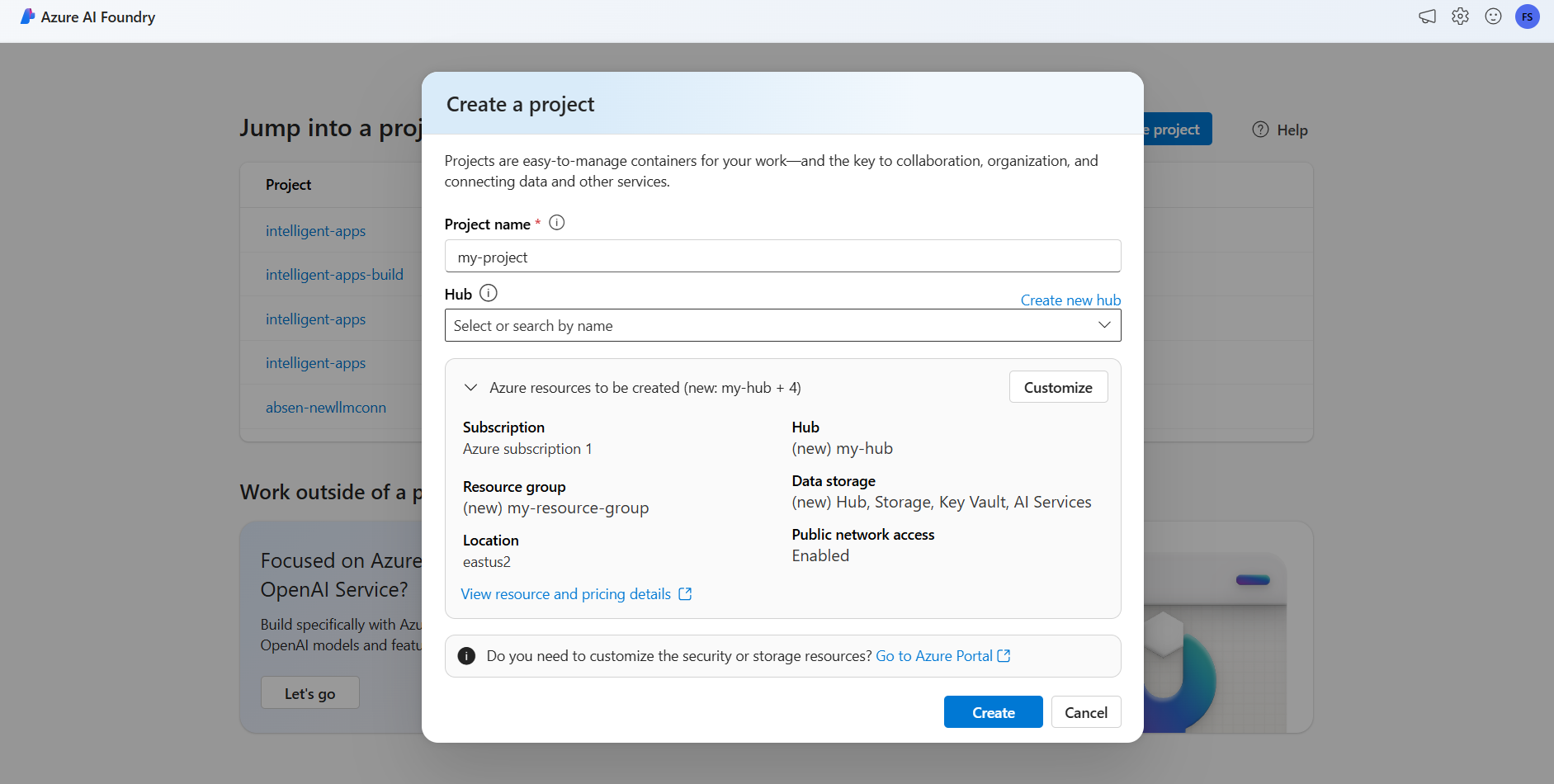

To create a project with an Azure AI Services account, follow these steps:

Go to Azure AI Foundry portal.

On the landing page, select Create project.

Give the project a name, for example "my-project".

In this tutorial, we create a brand new project under a new AI hub, hence, select Create new hub.

Give the hub a name, for example "my-hub" and select Next.

The wizard updates with details about the resources that are going to be created. Select Azure resources to be created to see the details.

You can see that the following resources are created:

Property Description Resource group The main container for all the resources in Azure. This helps get resources that work together organized. It also helps to have a scope for the costs associated with the entire project. Location The region of the resources that you're creating. Hub The main container for AI projects in Azure AI Foundry. Hubs promote collaboration and allow you to store information for your projects. AI Services The resource enabling access to the flagship models in Azure AI model catalog. In this tutorial, a new account is created, but Azure AI services resources can be shared across multiple hubs and projects. Hubs use a connection to the resource to have access to the model deployments available there. To learn how, you can create connections between projects and Azure AI Services to consume Azure AI model inference you can read Connect your AI project. Select Create. The resources creation process starts.

Once completed, your project is ready to be configured.

Azure AI model inference is a Preview feature that needs to be turned on in Azure AI Foundry. At the top navigation bar, over the right corner, select the Preview features icon. A contextual blade shows up at the right of the screen.

Turn the feature Deploy models to Azure AI model inference service on.

Close the panel.

To use Azure AI model inference, you need to add model deployments to your Azure AI services account.

Next steps

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

You can decide and configure which models are available for inference in the inference endpoint. When a given model is configured, you can then generate predictions from it by indicating its model name or deployment name on your requests. No further changes are required in your code to use it.

In this article, you'll learn how to add a new model to Azure AI model inference in Azure AI Foundry.

Prerequisites

To complete this article, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource.

Install the Azure CLI and the

cognitiveservicesextension for Azure AI Services:az extension add -n cognitiveservicesSome of the commands in this tutorial use the

jqtool, which might not be installed in your system. For installation instructions, see Downloadjq.Identify the following information:

Your Azure subscription ID.

Your Azure AI Services resource name.

The resource group where the Azure AI Services resource is deployed.

Add models

To add a model, you first need to identify the model that you want to deploy. You can query the available models as follows:

Log in into your Azure subscription:

az loginIf you have more than 1 subscription, select the subscription where your resource is located:

az account set --subscription $subscriptionId>Set the following environment variables with the name of the Azure AI Services resource you plan to use and resource group.

accountName="<ai-services-resource-name>" resourceGroupName="<resource-group>"If you don't have an Azure AI Services account create yet, you can create one as follows:

az cognitiveservices account create -n $accountName -g $resourceGroupName --custom-domain $accountNameLet's see first which models are available to you and under which SKU. The following command list all the model definitions available:

az cognitiveservices account list-models \ -n $accountName \ -g $resourceGroupName \ | jq '.[] | { name: .name, format: .format, version: .version, sku: .skus[0].name, capacity: .skus[0].capacity.default }'Outputs look as follows:

{ "name": "Phi-3.5-vision-instruct", "format": "Microsoft", "version": "2", "sku": "GlobalStandard", "capacity": 1 }Identify the model you want to deploy. You need the properties

name,format,version, andsku. Capacity might also be needed depending on the type of deployment.Tip

Notice that not all the models are available in all the SKUs.

Add the model deployment to the resource. The following example adds

Phi-3.5-vision-instruct:az cognitiveservices account deployment create \ -n $accountName \ -g $resourceGroupName \ --deployment-name Phi-3.5-vision-instruct \ --model-name Phi-3.5-vision-instruct \ --model-version 2 \ --model-format Microsoft \ --sku-capacity 1 \ --sku-name GlobalStandardThe model is ready to be consumed.

You can deploy the same model multiple times if needed as long as it's under a different deployment name. This capability might be useful in case you want to test different configurations for a given model, including content safety.

Manage deployments

You can see all the deployments available using the CLI:

Run the following command to see all the active deployments:

az cognitiveservices account deployment list -n $accountName -g $resourceGroupNameYou can see the details of a given deployment:

az cognitiveservices account deployment show \ --deployment-name "Phi-3.5-vision-instruct" \ -n $accountName \ -g $resourceGroupNameYou can delete a given deployment as follows:

az cognitiveservices account deployment delete \ --deployment-name "Phi-3.5-vision-instruct" \ -n $accountName \ -g $resourceGroupName

Use the model

Deployed models in Azure AI model inference can be consumed using the Azure AI model's inference endpoint for the resource. When constructing your request, indicate the parameter model and insert the model deployment name you have created. You can programmatically get the URI for the inference endpoint using the following code:

Inference endpoint

az cognitiveservices account show -n $accountName -g $resourceGroupName | jq '.properties.endpoints["Azure AI Model Inference API"]'

To make requests to the Azure AI model inference endpoint, append the route models, for example https://<resource>.services.ai.azure.com/models. You can see the API reference for the endpoint at Azure AI model inference API reference page.

Inference keys

az cognitiveservices account keys list -n $accountName -g $resourceGroupName

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

In this article, you learn how to create the resources required to use Azure AI model inference and consume flagship models from Azure AI model catalog.

Understand the resources

Azure AI model inference is a capability in Azure AI Services resources in Azure. You can create model deployments under the resource to consume their predictions. You can also connect the resource to Azure AI Hubs and Projects in Azure AI Foundry to create intelligent applications if needed. The following picture shows the high level architecture.

Azure AI Services resources don't require AI projects or AI hubs to operate and you can create them to consume flagship models from your applications. However, additional capabilities are available if you deploy an Azure AI project and hub, including playground, or agents.

The tutorial helps you create:

- An Azure AI Services resource.

- A model deployment for each of the models supported with pay-as-you-go.

- (Optionally) An Azure AI project and hub.

- (Optionally) A connection between the hub and the models in Azure AI Services.

Prerequisites

To complete this article, you need:

- An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

Install the Azure CLI.

Identify the following information:

- Your Azure subscription ID.

About this tutorial

The example in this article is based on code samples contained in the Azure-Samples/azureai-model-inference-bicep repository. To run the commands locally without having to copy or paste file content, use the following commands to clone the repository and go to the folder for your coding language:

git clone https://github.com/Azure-Samples/azureai-model-inference-bicep

The files for this example are in:

cd azureai-model-inference-bicep/infra

Create the resources

Follow these steps:

Use the template

modules/ai-services-template.bicepto describe your Azure AI Services resource:modules/ai-services-template.bicep

@description('Location of the resource.') param location string = resourceGroup().location @description('Name of the Azure AI Services account.') param accountName string @description('The resource model definition representing SKU') param sku string = 'S0' @description('Whether or not to allow keys for this account.') param allowKeys bool = true @allowed([ 'Enabled' 'Disabled' ]) @description('Whether or not public endpoint access is allowed for this account.') param publicNetworkAccess string = 'Enabled' @allowed([ 'Allow' 'Deny' ]) @description('The default action for network ACLs.') param networkAclsDefaultAction string = 'Allow' resource account 'Microsoft.CognitiveServices/accounts@2023-05-01' = { name: accountName location: location identity: { type: 'SystemAssigned' } sku: { name: sku } kind: 'AIServices' properties: { customSubDomainName: accountName publicNetworkAccess: publicNetworkAccess networkAcls: { defaultAction: networkAclsDefaultAction } disableLocalAuth: allowKeys } } output endpointUri string = 'https://${account.outputs.name}.services.ai.azure.com/models' output id string = account.idUse the template

modules/ai-services-deployment-template.bicepto describe model deployments:modules/ai-services-deployment-template.bicep

@description('Name of the Azure AI services account') param accountName string @description('Name of the model to deploy') param modelName string @description('Version of the model to deploy') param modelVersion string @allowed([ 'AI21 Labs' 'Cohere' 'Core42' 'DeepSeek' 'Meta' 'Microsoft' 'Mistral AI' 'OpenAI' ]) @description('Model provider') param modelPublisherFormat string @allowed([ 'GlobalStandard' 'Standard' 'GlobalProvisioned' 'Provisioned' ]) @description('Model deployment SKU name') param skuName string = 'GlobalStandard' @description('Content filter policy name') param contentFilterPolicyName string = 'Microsoft.DefaultV2' @description('Model deployment capacity') param capacity int = 1 resource modelDeployment 'Microsoft.CognitiveServices/accounts/deployments@2024-04-01-preview' = { name: '${accountName}/${modelName}' sku: { name: skuName capacity: capacity } properties: { model: { format: modelPublisherFormat name: modelName version: modelVersion } raiPolicyName: contentFilterPolicyName == null ? 'Microsoft.Nill' : contentFilterPolicyName } }For convenience, we define the model we want to have available in the service using a JSON file. The file infra/models.json contains a list of JSON object with keys

name,version,provider, andsku, which defines the models the deployment will provision. Since the models support pay-as-you-go, adding model deployments doesn't incur on extra cost. Modify the file by removing/adding the model entries you want to have available. The following example shows only the first 7 lines of the JSON file:models.json

[ { "name": "AI21-Jamba-1.5-Large", "version": "1", "provider": "AI21 Labs", "sku": "GlobalStandard" },If you plan to use projects (recommended), you need the templates for creating a project, hub, and a connection to the Azure AI Services resource:

modules/project-hub-template.bicep

param location string = resourceGroup().location @description('Name of the Azure AI hub') param hubName string = 'hub-dev' @description('Name of the Azure AI project') param projectName string = 'intelligent-apps' @description('Name of the storage account used for the workspace.') param storageAccountName string = replace(hubName, '-', '') param keyVaultName string = replace(hubName, 'hub', 'kv') param applicationInsightsName string = replace(hubName, 'hub', 'log') @description('The container registry resource id if you want to create a link to the workspace.') param containerRegistryName string = replace(hubName, '-', '') @description('The tags for the resources') param tagValues object = { owner: 'santiagxf' project: 'intelligent-apps' environment: 'dev' } var tenantId = subscription().tenantId var resourceGroupName = resourceGroup().name var storageAccountId = resourceId(resourceGroupName, 'Microsoft.Storage/storageAccounts', storageAccountName) var keyVaultId = resourceId(resourceGroupName, 'Microsoft.KeyVault/vaults', keyVaultName) var applicationInsightsId = resourceId(resourceGroupName, 'Microsoft.Insights/components', applicationInsightsName) var containerRegistryId = resourceId( resourceGroupName, 'Microsoft.ContainerRegistry/registries', containerRegistryName ) resource storageAccount 'Microsoft.Storage/storageAccounts@2019-04-01' = { name: storageAccountName location: location sku: { name: 'Standard_LRS' } kind: 'StorageV2' properties: { encryption: { services: { blob: { enabled: true } file: { enabled: true } } keySource: 'Microsoft.Storage' } supportsHttpsTrafficOnly: true } tags: tagValues } resource keyVault 'Microsoft.KeyVault/vaults@2019-09-01' = { name: keyVaultName location: location properties: { tenantId: tenantId sku: { name: 'standard' family: 'A' } enableRbacAuthorization: true accessPolicies: [] } tags: tagValues } resource applicationInsights 'Microsoft.Insights/components@2018-05-01-preview' = { name: applicationInsightsName location: location kind: 'web' properties: { Application_Type: 'web' } tags: tagValues } resource containerRegistry 'Microsoft.ContainerRegistry/registries@2019-05-01' = { name: containerRegistryName location: location sku: { name: 'Standard' } properties: { adminUserEnabled: true } tags: tagValues } resource hub 'Microsoft.MachineLearningServices/workspaces@2024-07-01-preview' = { name: hubName kind: 'Hub' location: location identity: { type: 'systemAssigned' } sku: { tier: 'Standard' name: 'standard' } properties: { description: 'Azure AI hub' friendlyName: hubName storageAccount: storageAccountId keyVault: keyVaultId applicationInsights: applicationInsightsId containerRegistry: (empty(containerRegistryName) ? null : containerRegistryId) encryption: { status: 'Disabled' keyVaultProperties: { keyVaultArmId: keyVaultId keyIdentifier: '' } } hbiWorkspace: false } tags: tagValues } resource project 'Microsoft.MachineLearningServices/workspaces@2024-07-01-preview' = { name: projectName kind: 'Project' location: location identity: { type: 'systemAssigned' } sku: { tier: 'Standard' name: 'standard' } properties: { description: 'Azure AI project' friendlyName: projectName hbiWorkspace: false hubResourceId: hub.id } tags: tagValues }modules/ai-services-connection-template.bicep

@description('Name of the hub where the connection will be created') param hubName string @description('Name of the connection') param name string @description('Category of the connection') param category string = 'AIServices' @allowed(['AAD', 'ApiKey', 'ManagedIdentity', 'None']) param authType string = 'AAD' @description('The endpoint URI of the connected service') param endpointUri string @description('The resource ID of the connected service') param resourceId string = '' @secure() param key string = '' resource connection 'Microsoft.MachineLearningServices/workspaces/connections@2024-04-01-preview' = { name: '${hubName}/${name}' properties: { category: category target: endpointUri authType: authType isSharedToAll: true credentials: authType == 'ApiKey' ? { key: key } : null metadata: { ApiType: 'Azure' ResourceId: resourceId } } }Define the main deployment:

deploy-with-project.bicep

@description('Location to create the resources in') param location string = resourceGroup().location @description('Name of the resource group to create the resources in') param resourceGroupName string = resourceGroup().name @description('Name of the AI Services account to create') param accountName string = 'azurei-models-dev' @description('Name of the project hub to create') param hubName string = 'hub-azurei-dev' @description('Name of the project to create in the project hub') param projectName string = 'intelligent-apps' @description('Path to a JSON file with the list of models to deploy. Each model is a JSON object with the following properties: name, version, provider') var models = json(loadTextContent('models.json')) module aiServicesAccount 'modules/ai-services-template.bicep' = { name: 'aiServicesAccount' scope: resourceGroup(resourceGroupName) params: { accountName: accountName location: location } } module projectHub 'modules/project-hub-template.bicep' = { name: 'projectHub' scope: resourceGroup(resourceGroupName) params: { hubName: hubName projectName: projectName } } module aiServicesConnection 'modules/ai-services-connection-template.bicep' = { name: 'aiServicesConnection' scope: resourceGroup(resourceGroupName) params: { name: accountName authType: 'AAD' endpointUri: aiServicesAccount.outputs.endpointUri resourceId: aiServicesAccount.outputs.id hubName: hubName } dependsOn: [ projectHub ] } @batchSize(1) module modelDeployments 'modules/ai-services-deployment-template.bicep' = [ for (item, i) in models: { name: 'deployment-${item.name}' scope: resourceGroup(resourceGroupName) params: { accountName: accountName modelName: item.name modelVersion: item.version modelPublisherFormat: item.provider skuName: item.sku } dependsOn: [ aiServicesAccount ] } ] output endpoint string = aiServicesAccount.outputs.endpointUriLog into Azure:

az loginEnsure you are in the right subscription:

az account set --subscription "<subscription-id>"Run the deployment:

RESOURCE_GROUP="<resource-group-name>" az deployment group create \ --resource-group $RESOURCE_GROUP \ --template-file deploy-with-project.bicepIf you want to deploy only the Azure AI Services resource and the model deployments, use the following deployment file:

deploy.bicep

@description('Location to create the resources in') param location string = resourceGroup().location @description('Name of the resource group to create the resources in') param resourceGroupName string = resourceGroup().name @description('Name of the AI Services account to create') param accountName string = 'azurei-models-dev' @description('Path to a JSON file with the list of models to deploy. Each model is a JSON object with the following properties: name, version, provider') var models = json(loadTextContent('models.json')) module aiServicesAccount 'modules/ai-services-template.bicep' = { name: 'aiServicesAccount' scope: resourceGroup(resourceGroupName) params: { accountName: accountName location: location } } @batchSize(1) module modelDeployments 'modules/ai-services-deployment-template.bicep' = [ for (item, i) in models: { name: 'deployment-${item.name}' scope: resourceGroup(resourceGroupName) params: { accountName: accountName modelName: item.name modelVersion: item.version modelPublisherFormat: item.provider skuName: item.sku } dependsOn: [ aiServicesAccount ] } ] output endpoint string = aiServicesAccount.outputs.endpointUriRun the deployment:

RESOURCE_GROUP="<resource-group-name>" az deployment group create \ --resource-group $RESOURCE_GROUP \ --template-file deploy.bicepThe template outputs the Azure AI model inference endpoint that you can use to consume any of the model deployments you have created.