How to get lineage from Airflow into Microsoft Purview (Preview)

Airflow is an open-source workflow automation and scheduling platform that can be used to author and manage data pipelines. Microsoft Purview supports collecting Airflow lineage by integrating with OpenLineage, an open framework for data lineage collection and analysis. Learn about how Airflow works with OpenLineage from here.

Enabling OpenLineage in Airflow automatically tracks metadata and lineage about jobs and datasets as DAGs execute. The information is sent to an Azure Event Hubs that you configure. Microsoft Purview subscribes to the events, parse them and ingest into the data map.

Important

This feature is currently in preview. The Supplemental Terms of Use for Microsoft Azure Previews include additional legal terms that apply to Azure features that are in beta, in preview, or otherwise not yet released into general availability.

Supported capabilities

The supported Airflow versions are 1.10+ and 2.0-2.7.

Microsoft Purview supports metadata and lineage collection when the following types of data sources are used in Airflow:

- Amazon RDS for PostgreSQL

- Azure Database for PostgreSQL

- Google BigQuery

- PostgreSQL

- Snowflake

The following Airflow metadata are captured along:

- Airflow workspace

- Airflow DAG

- Airflow task

Lineage is collected into Microsoft Purview upon successful DAG runs in an event-based manner.

Known limitations

- Column level lineage is currently not supported. The schema of the data assets is captured.

- If database views are referenced in the tasks, they're currently captured as table assets.

- All the metadata are ingested into Microsoft Purview root collection. The assets already existed in the data map are preserved in the configured collection.

How to bring Airflow lineage into Microsoft Purview

As a prerequisite, you need a running Airflow instance.

To get lineage from Airflow into Microsoft Purview, you need to:

- Set up an Azure Event Hubs

- Configure Event Hubs to publish messages to Microsoft Purview

- Configure your Airflow with OpenLineage

- Run Airflow jobs and view the assets/lineage

Set up Azure Event Hubs

Set up an Azure Event Hubs as the receiver of the metadata and lineage tracked by OpenLineage in Airflow.

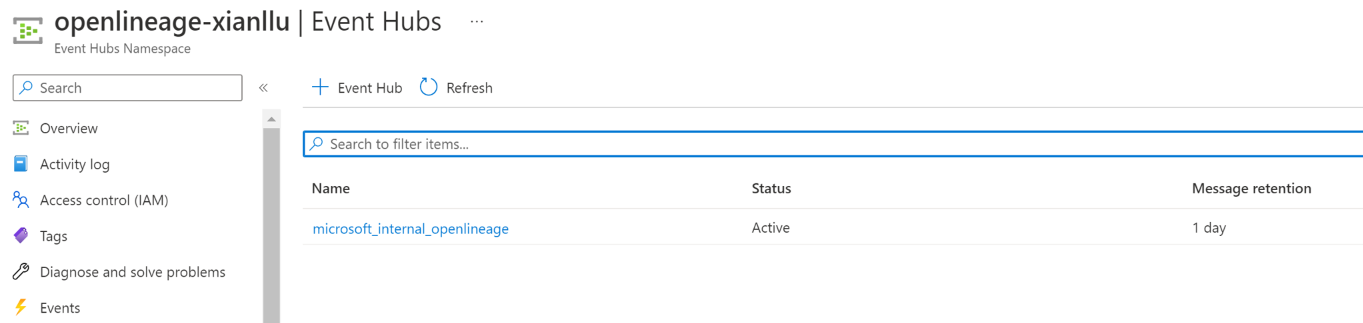

Create an event hub. Name your event hub as “microsoft_internal_openlineage”.

Go to your “microsoft_internal_openlineage” event hubs -> Access control (IAM) -> Add role assignment, assign “Azure Event Hubs Data Receiver” role to your Microsoft Purview account’s managed identity. For detailed steps, see Assign Azure roles using the Azure portal.

Configure Event Hubs to publish messages to Microsoft Purview

Microsoft Purview supports consuming and pushing events from/to your own Event Hubs. Follow this doc to configure Event Hubs for Microsoft Purview: Configure Event Hubs with Microsoft Purview to send and receive Atlas Kafka topics messages.

In summary:

Go to your Microsoft Purview account -> Managed resources tab, disable the managed Event Hubs namespace.

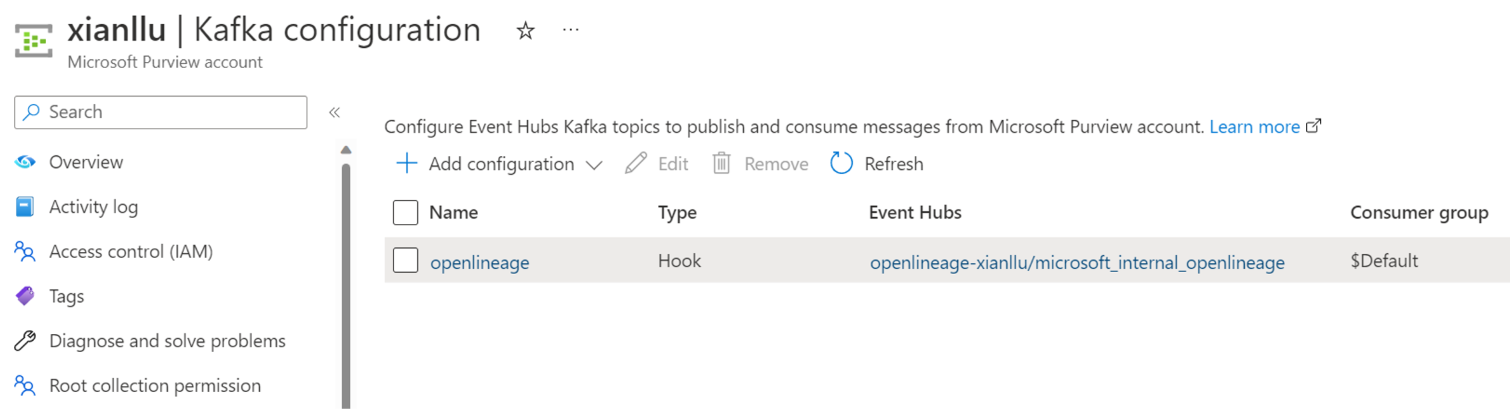

Go to Kafka configuration tab -> + Add configuration -> Hook configuration, input a name, and select the Event Hubs namespace and Event Hubs you created in previous step.

Configure your Airflow with OpenLineage

Installation:

To download and install the latest ‘openlineage-airflow’ library, update the ‘requirements.txt’ file of your running Airflow instance with:

openlineage-airflow

Note

The Airflow version and the openlineage-airflow version need to match. For example, when you use Airflow 2.7.1, you can use openlineage-airflow version 1.1.0 or 1.2.0. You can view matching versions on this website.

Configuration:

Next, configure your Azure Event Hubs instance as the target to which OpenLineage sends the events.

Create an ‘openlineage.yml’ file under your Airflow root path. The content of the file is as below:

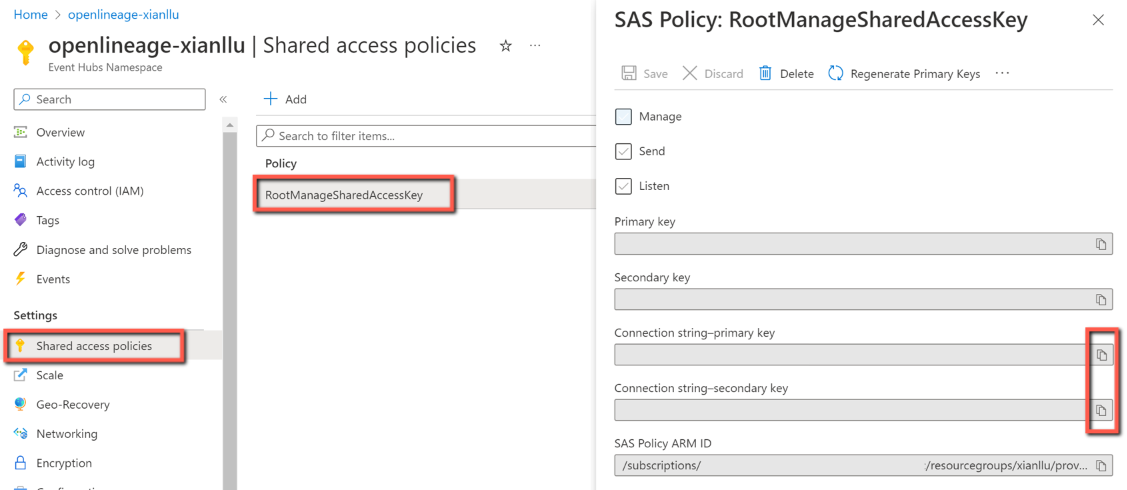

transport: type: "kafka" config: bootstrap.servers: "{EVENTHUB_SERVER}:9093" security.protocol: "SASL_SSL" sasl.mechanism: "PLAIN" sasl.username: "$ConnectionString" sasl.password: "{PASSWORD}" client.id: "airflow-client" topic: "microsoft_internal_openlineage" flash: TrueReplace the two place-holders with values:

Restart your Airflow server.

Run Airflow jobs and view the assets/lineage

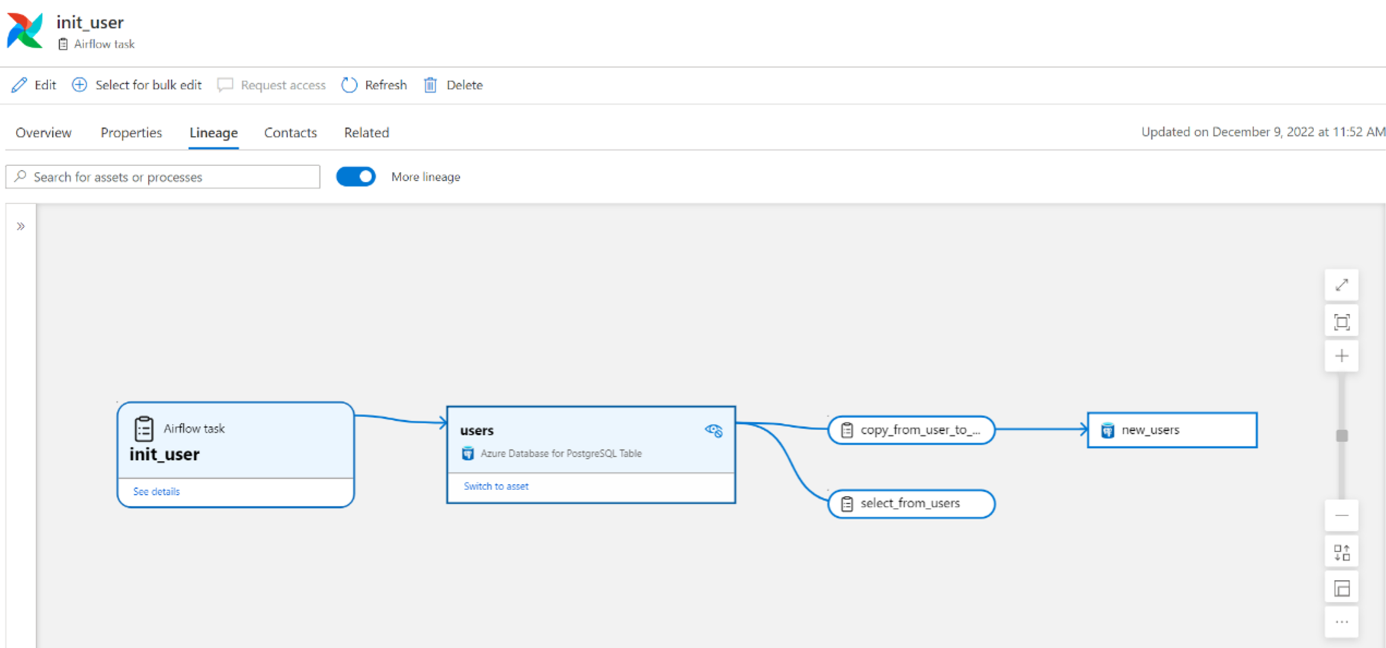

You can now run your Airflow jobs, then go to Microsoft Purview Governance portal to browse/search/view assets. The assets should show up shortly after a successful DAG run.

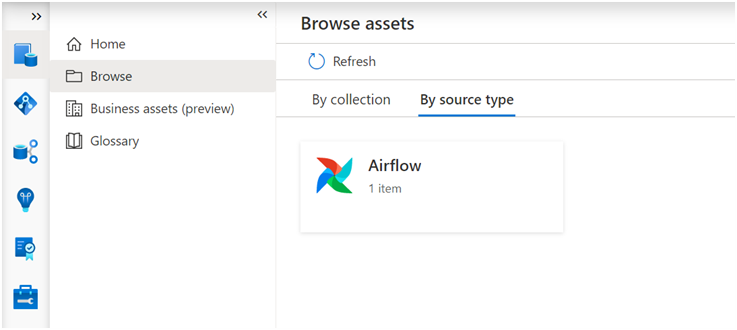

Browse Airflow assets:

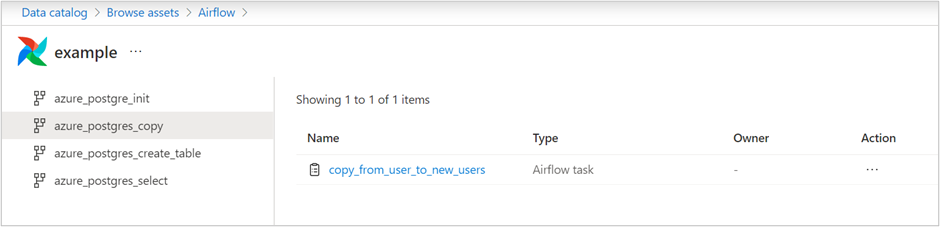

View Airflow task asset details with lineage:

Troubleshooting tips

If you run the Airflow job but don’t see the corresponding assets/lineage shown up in Microsoft Purview:

- Check if your Airflow use case is supported by Microsoft Purview. Refer to the supported capabilities section.

- Go to your Event Hubs instance to check if any incoming requests and messages. If no, double check your OpenLineage configuration in Airflow.