Easier Azure Data Lake Store management: alerts for folders and files.

The massive scale and capabilities of Azure Data Lake Store are regularly used by companies for big data storage. As the number of files, file types, and folders grow, things get harder to manage and staying compliant becomes a greater challenge for companies. Regulations such as GDPR (General Data Protection Regulation) have heightened requirements for control and supervision of files that contain sensitive data.

In this blog post, I'll show you how to set up alerts in your Azure Data Lake Store to make managing your data easier. We will create a log analytics query and an alert that monitors a specific path and file type and sends a notification whenever the path or file is created, accessed, modified, or deleted.

Step 1: Connect Azure Data Lake and Log Analytics

Data Lake accounts can be configured to generate diagnostics logs, some of which are automatically generated (e.g. regular Data Lake operations such as reporting current usage, or whenever a job completes). Others are generated based on requests (e.g. when a new file is created, opened, or when a job is submitted). Both Data Lake Analytics and Data Lake Store can be configured to send these diagnostics logs to a Log Analytics account where we can query the logs and create alerts based on the query results.

To send diagnostics logs to a Log Analytics account, follow the steps outlined in the blog post Struggling to get insights for your Azure Data Lake Store? Azure Log Analytics can help!

Step 2: Create a query that identifies when a path or file type is used

If you followed our previous blog on how to control Azure Data Lake costs using Log Analytics to create service alerts, you will remember that a Log Analytics query can be used to identify when certain operations take place in the Azure Data Lake Store account. For this specific case, a couple of different fields can be queried, such as Path_s. Have a look at the several example queries that follow:

Query File Paths, Names and Types

AzureDiagnostics

| where ( ResourceProvider == "MICROSOFT.DATALAKESTORE" )

| where ( Path_s contains "/webhdfs/v1/##YOUR PATH##")

This query will return every single action/diagnostic log that takes place in a specified path. It can be used to:

Query for a specific path

| where ( Path_s contains "/webhdfs/v1/MY/SAMPLE/PATH/")Query for a file name and/or type

| where ( Path_s endswith "MYFILE.EXT")Query for both file path and file name/type.

| where ( Path_s contains "/webhdfs/v1/MY/SAMPLE/PATH/") AND where ( Path_s endswith "MYFILE.EXT")or

| where ( Path_s contains "/webhdfs/v1/MY/SAMPLE/PATH/") | where ( Path_s endswith"MYFILE.EXT")

Query Folder/File Creation or Deletion

We can also be more specific on the type of operations, such as:

A new file gets created in a path:

AzureDiagnostics | where ( ResourceProvider == "MICROSOFT.DATALAKESTORE" ) | where ( OperationName == "create" ) | where ( Path_s contains "/webhdfs/v1/##YOUR PATH##")A new folder is created in a path

AzureDiagnostics | where ( ResourceProvider == "MICROSOFT.DATALAKESTORE" ) | where ( OperationName == "mkdirs" ) | where ( Path_s contains "/webhdfs/v1/##YOUR PATH##")A folder/path is deleted in a path

AzureDiagnostics | where ( ResourceProvider == "MICROSOFT.DATALAKESTORE" ) | where ( OperationName == "delete" ) | where ( Path_s contains "/webhdfs/v1/##YOUR PATH##")

Query on specific account

If you have multiple Azure Data Lake Store accounts that write logs to a single Log Analytics account, you can add specify which account to search in the query too:

| where ( Resource == "myadlsacct" )

Using Other Query Operations

Some other operations (compliant with WebHDFS) that may be useful for creating a query that targets specific actions are:

For ADLS-specific operations:

- setexpiry - when the file expiration is set.

- modifyaclentries - when the ACL for a file/folder is modified.

Here is an example of a query combining both account and operation:

AzureDiagnostics

| where ( ResourceProvider == "MICROSOFT.DATALAKESTORE" )

| where ( Resource == "myadlsacct" )

| where ( OperationName == "create" )

| where ( Path_s contains "/webhdfs/v1/##YOUR PATH##")

Step 3: Create an alert when an event is detected or a threshold is reached.

Using a query such as those shown in the previous step, Log Analytics can be used to set an alert that will notify users via e-mail, text message, or webhook when the event is captured or metric threshold is reached. Check out this blog post for creating a new alert: Simple Trick to Stay on top of your Azure Data Lake: Create Alerts using Log Analytics.

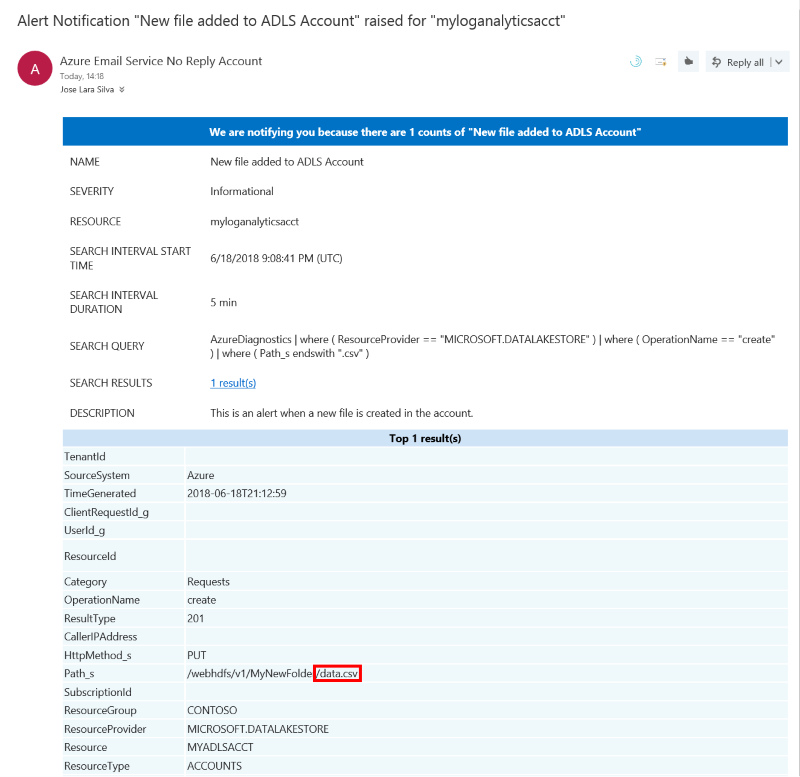

An email alert for a new CSV file in an Azure Data Lake Store account can look as follows:

[caption id="attachment_10335" align="alignnone" width="800"] Alert for a new file of type CSV created in an ADLS account[/caption]

Alert for a new file of type CSV created in an ADLS account[/caption]

As with any other alerts, the name, delivery method (email, sms, and webhook) can be set to fit your needs.

The bottom line for a best practice...

Setting up alerts for your Azure Data Lake Store to monitor when files or directories are created, accessed, modified, or deleted will help manage your account and data efficiently. With the right alerts in place, you will be able to tell when certain files are created, or when folders are created in a specific path through alerts.

Go ahead and create alerts for your accounts and let us know how you're using them. Have any tips for other Data Lake users? Leave a comment below. Have more ideas for alerts? Reach out to us on https://aka.ms/adlfeedback.