Configure a connection to use Azure AI model inference in your AI project

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

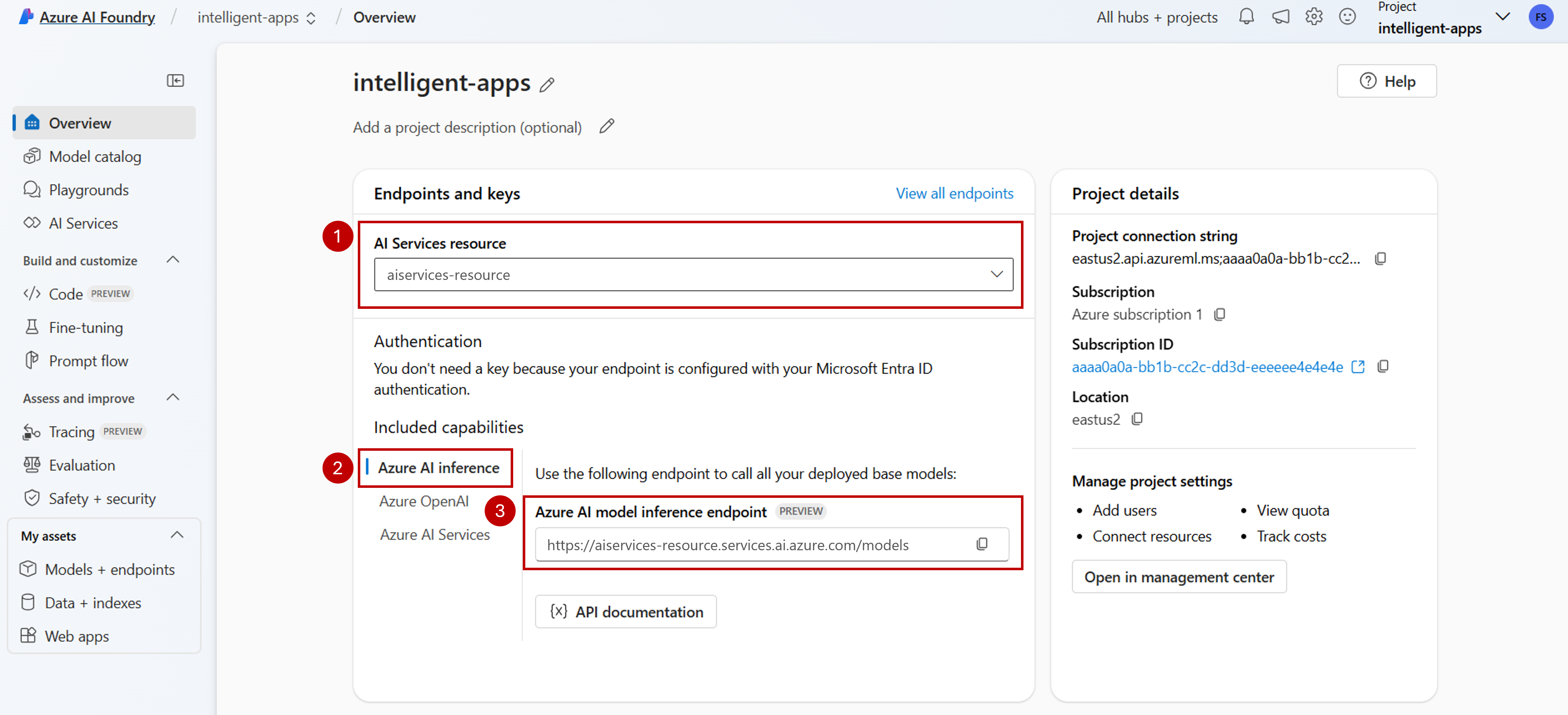

You can use Azure AI model inference in your projects in Azure AI Foundry to create reach applications and interact/manage the models available. To use the Azure AI model inference service in your project, you need to create a connection to the Azure AI Services resource.

The following article explains how to create a connection to the Azure AI Services resource to use the inference endpoint.

Prerequisites

To complete this article, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. See Create an Azure AI Services resource for more details.

Add a connection

You can create a connection to an Azure AI services resource using the following steps:

Go to Azure AI Foundry Portal.

In the lower left corner of the screen, select Management center.

In the section Connections select New connection.

Select Azure AI services.

In the browser, look for an existing Azure AI Services resource in your subscription.

Select Add connection.

The new connection is added to your Hub.

Return to the project's landing page to continue and now select the new created connection. Refresh the page if it doesn't show up immediately.

See model deployments in the connected resource

You can see the model deployments available in the connected resource by following these steps:

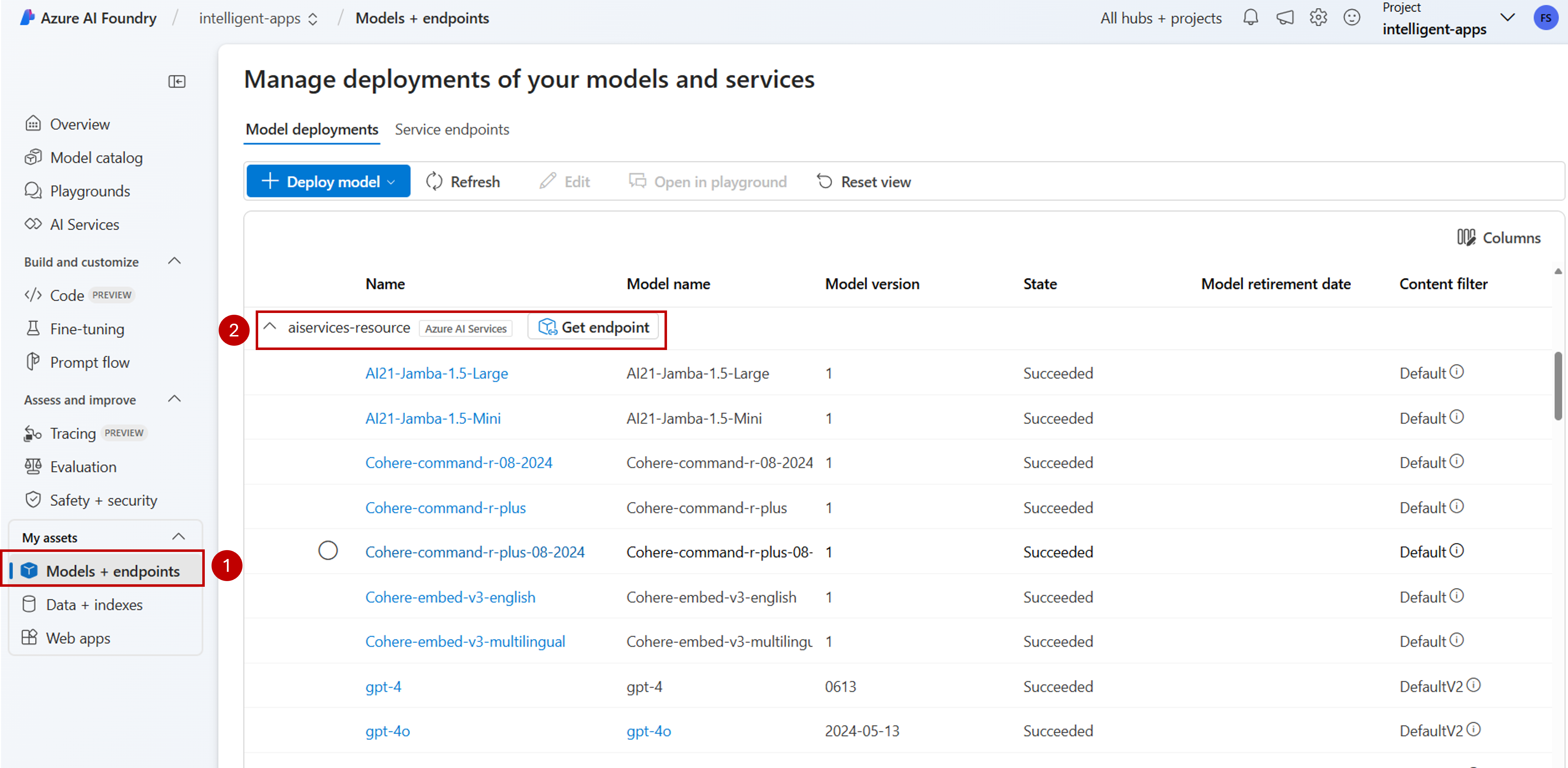

Go to Azure AI Foundry Portal.

On the left navigation bar, select Models + endpoints.

The page displays the model deployments available to your, grouped by connection name. Locate the connection you have just created, which should be of type Azure AI Services.

Select any model deployment you want to inspect.

The details page shows information about the specific deployment. If you want to test the model, you can use the option Open in playground.

The Azure AI Foundry playground is displayed, where you can interact with the given model.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

You can use Azure AI model inference in your projects in Azure AI Foundry to create reach applications and interact/manage the models available. To use the Azure AI model inference service in your project, you need to create a connection to the Azure AI Services resource.

The following article explains how to create a connection to the Azure AI Services resource to use the inference endpoint.

Prerequisites

To complete this article, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. See Create an Azure AI Services resource for more details.

Install the Azure CLI and the

mlextension for Azure AI Foundry:az extension add -n mlIdentify the following information:

Your Azure subscription ID.

Your Azure AI Services resource name.

The resource group where the Azure AI Services resource is deployed.

Add a connection

To add a model, you first need to identify the model that you want to deploy. You can query the available models as follows:

Log in into your Azure subscription:

az loginConfigure the CLI to point to the project:

az account set --subscription <subscription> az configure --defaults workspace=<project-name> group=<resource-group> location=<location>Create a connection definition:

connection.yml

name: <connection-name> type: aiservices endpoint: https://<ai-services-resourcename>.services.ai.azure.com api_key: <resource-api-key>Create the connection:

az ml connection create -f connection.ymlAt this point, the connection is available for consumption.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

You can use Azure AI model inference in your projects in Azure AI Foundry to create reach applications and interact/manage the models available. To use the Azure AI model inference service in your project, you need to create a connection to the Azure AI Services resource.

The following article explains how to create a connection to the Azure AI Services resource to use the inference endpoint.

Prerequisites

To complete this article, you need:

An Azure subscription. If you're using GitHub Models, you can upgrade your experience and create an Azure subscription in the process. Read Upgrade from GitHub Models to Azure AI model inference if that's your case.

An Azure AI services resource. See Create an Azure AI Services resource for more details.

An Azure AI project with an AI Hub.

Install the Azure CLI.

Identify the following information:

Your Azure subscription ID.

Your Azure AI Services resource name.

Your Azure AI Services resource ID.

The name of the Azure AI Hub where the project is deployed.

The resource group where the Azure AI Services resource is deployed.

Add a connection

Use the template

ai-services-connection-template.bicepto describe connection:ai-services-connection-template.bicep

@description('Name of the hub where the connection will be created') param hubName string @description('Name of the connection') param name string @description('Category of the connection') param category string = 'AIServices' @allowed(['AAD', 'ApiKey', 'ManagedIdentity', 'None']) param authType string = 'AAD' @description('The endpoint URI of the connected service') param endpointUri string @description('The resource ID of the connected service') param resourceId string = '' @secure() param key string = '' resource connection 'Microsoft.MachineLearningServices/workspaces/connections@2024-04-01-preview' = { name: '${hubName}/${name}' properties: { category: category target: endpointUri authType: authType isSharedToAll: true credentials: authType == 'ApiKey' ? { key: key } : null metadata: { ApiType: 'Azure' ResourceId: resourceId } } }Run the deployment:

RESOURCE_GROUP="<resource-group-name>" ACCOUNT_NAME="<azure-ai-model-inference-name>" ENDPOINT_URI="https://<azure-ai-model-inference-name>.services.ai.azure.com" RESOURCE_ID="<resource-id>" HUB_NAME="<hub-name>" az deployment group create \ --resource-group $RESOURCE_GROUP \ --template-file ai-services-connection-template.bicep \ --parameters accountName=$ACCOUNT_NAME hubName=$HUB_NAME endpointUri=$ENDPOINT_URI resourceId=$RESOURCE_ID